AI Center Modules

The Coralogix AI Observability platform offers a variety of tools for tracking, troubleshooting and assessing the performance of your LLM-powered applications.

AI Center Overview

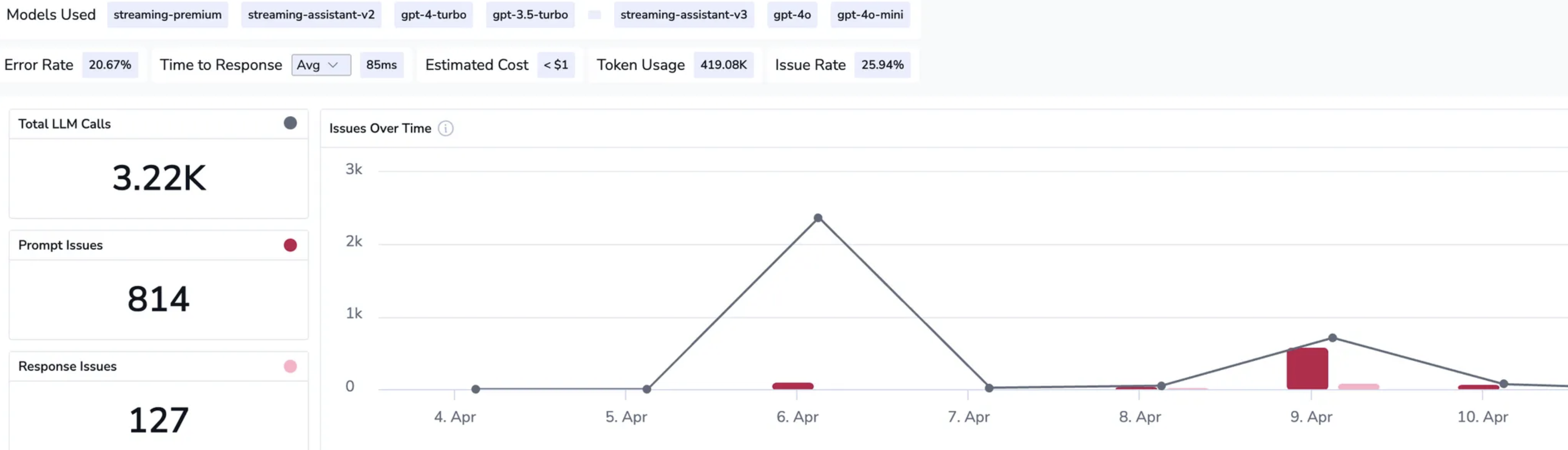

The AI Center Overview consolidates observability metrics from all your AI applications within Coralogix, offering valuable insights into cost, performance, errors, security, and usage trends. The dashboard features key analytics such as error rates, response times, token consumption, and detected issues over time, enabling teams to monitor and optimize the performance of AI applications effectively. This centralized view enhances decision-making by providing a comprehensive understanding of application health and operational efficiency.

Application Catalog

The Application Catalog offers a detailed overview of all AI applications within the platform, showcasing critical observability metrics such as error rates, security and safety issues, token usage, costs, and response times. Users can easily compare applications, analyze trends over time, and sort data by key performance indicators. This functionality enhances monitoring, troubleshooting, and cost optimization across a diverse range of AI applications, empowering teams to make informed decisions and improve operational efficiency.

Application Overview

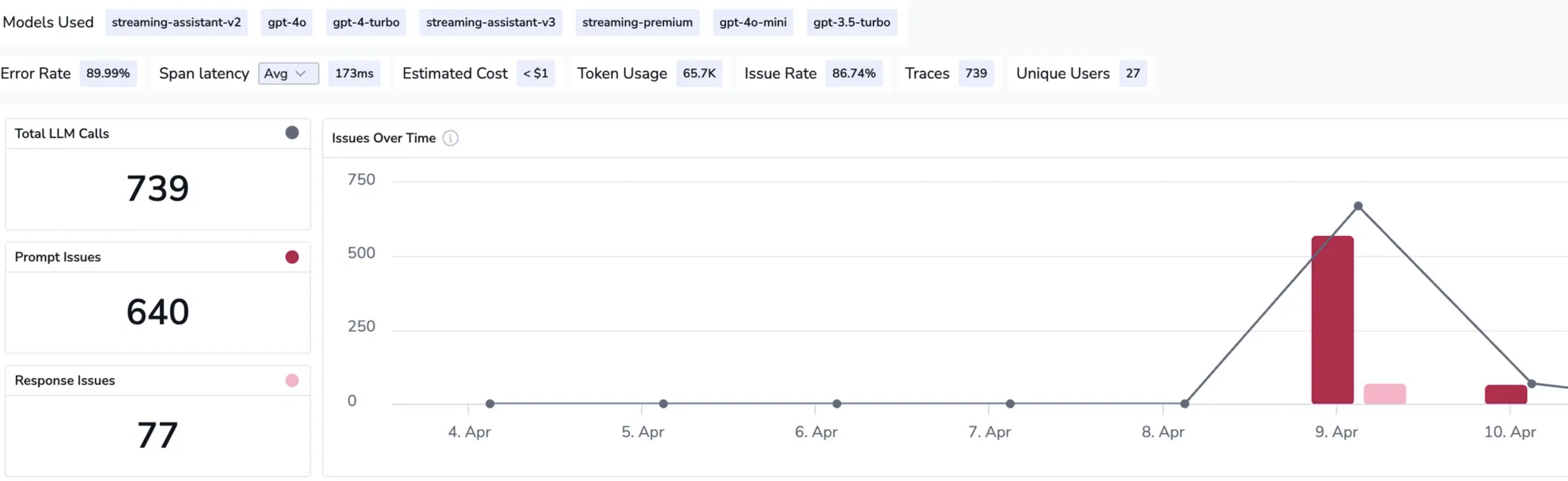

The Application Overview delivers essential observability insights at the application level, enabling users to track performance, costs, errors, quality and security issues, latency, and tool usage. The dashboard is organized into sections focused on high-priority metrics, security and quality assessments, latency, errors, costs, and tool performance, providing a comprehensive view of application health and facilitating effective monitoring and optimization.

LLM Calls

LLM Calls functionality offers users a structured, detailed view of all LLM-related interactions within an application. It enables users to inspect traces and spans, analyze detected security and quality issues, debug errors, monitor latency impacts, and review invoked tools.

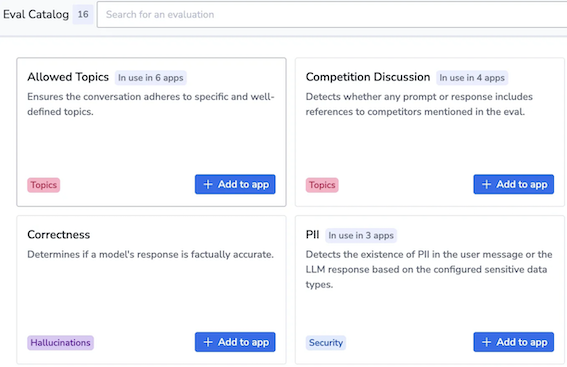

Eval Catalog

The Evaluations are metric-based tools designed to evaluate various aspects, such as security, quality, and performance of LLM-based applications. Through the Eval Catalog, users can select and configure predefined evaluations (evals) to monitor specific behaviors and issues for each agent. This enables users to analyze their AI models, gain actionable insights, and address potential issues to enhance reliability and performance.

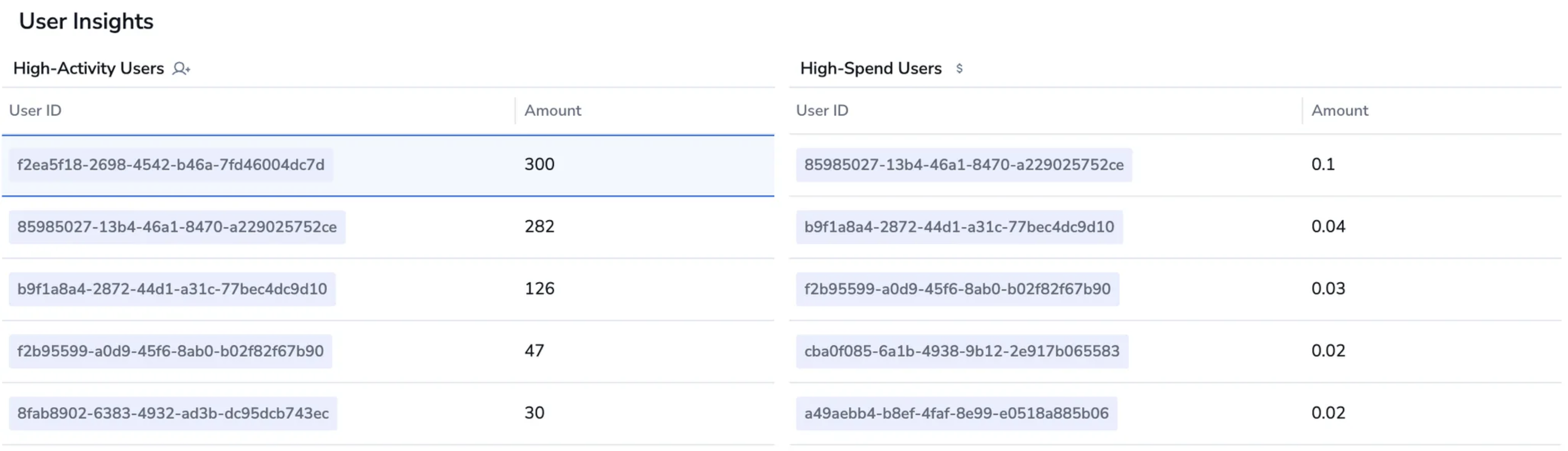

AI-SPM

AI-SPM (AI Security Posture Management) provides CISOs and security teams with a comprehensive view of AI usage across their organization, helping them identify potential risks and enforce security best practices. The dashboard showcases key security metrics, such as detected security issues, insights on risky users, and an overall AI Security Posture Score. With AI-SPM, security teams are empowered to proactively address threats, ensure compliance, and safeguard AI-driven environments.