Application Overview

The Application Overview dashboard offers essential observability insights at the application level, enabling users to monitor performance, costs, errors, security and quality issues, latency, and tool usage.

Accessing the Application Overview dashboard

- In the Coralogix UI, navigate to AI Center > Application Catalog.

- Scroll down to the application grid and click on an app row to display its Application Overview page.

- Use the time picker to select the desired time interval for metrics collection.

- Proceed by scrolling to the appropriate Application Overview section.

Summary

The Summary section consolidates key performance information for the selected AI app, showcasing essential counters that gather data from the spans. Additionally, the bar chart provides a quick overview of prompt and response data at a glance.

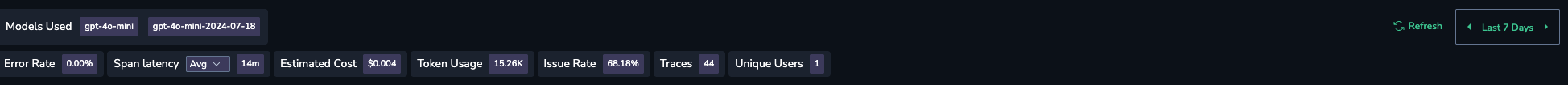

Counters

The counters offer a comprehensive snapshot of key performance, usage, and cost metrics.

- Models Used – A list of models used in the processed spans.

- Error Rate – Percentage of traces with errors. Along with the current count, this counter shows the percentage change from the previous time period, highlighting the trend in the metric.

- Span Latency – The latency of spans within the application. You can filter the metrics by average, P75, P95, and P99 percentiles.

- Estimated Cost – Cost calculation based on token usage and model pricing.

- Token Usage – The total number of tokens processed in the application.

- Issue Rate – The percentage of LLM calls flagged with issues. Along with the current count, this counter shows the percentage change from the previous time period, highlighting the trend in the metric.

- Traces – The total number of traces processed in the application.

- Unique Users – The number of unique users who made requests.

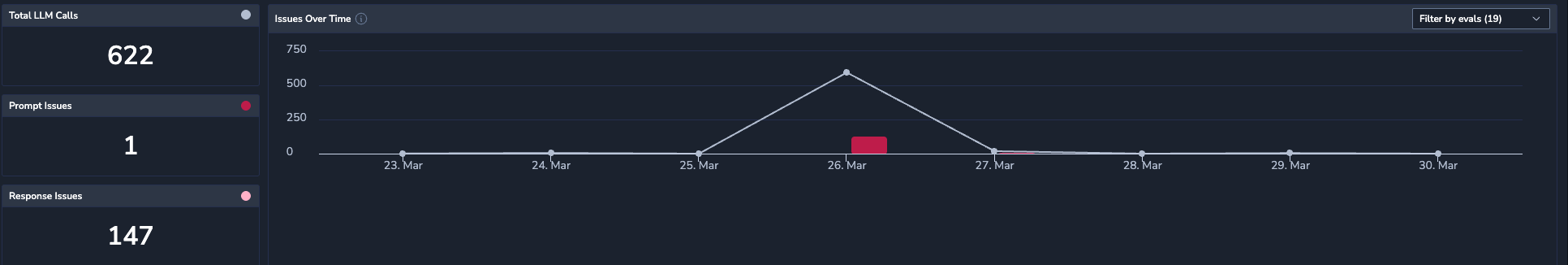

LLM calls

Investigate trends in LLM call (prompt and response) issues.

- Total LLM Calls – The total count of LLM calls (prompts and responses) in the application.

- Prompt Issues – The total number of prompts that contain issues.

- Response Issues – The total number of responses that contain issues. For example, if a single prompt contains three different issues, it should still be counted as one issued prompt.

- Issues Over Time – The issue trend over time.

- Filter the issue data by evals using the dropdown at the top right-hand corner of the graph.

- Hover over the graph to view the data distribution for that specific time point.

Issues

Visualize security and quality issues related to evals assigned to the application. An issue refers to a prompt/response pair where the evaluation score surpasses the pre-defined threshold, indicating a problem. Issues with low scores are excluded.

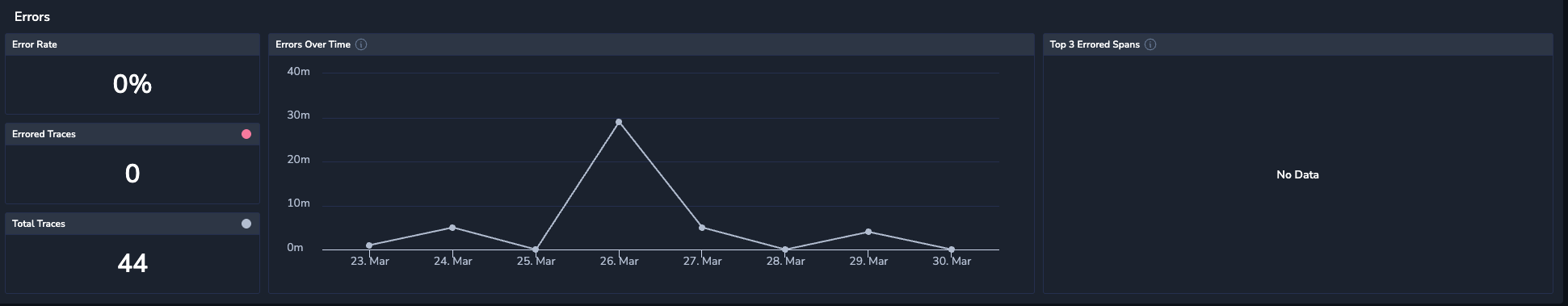

Errors

Monitor errors in your app traces, showcasing error counts and trends over time.

- Error Rate – The percentage of errored traces to the total number of traces.

- Errored Traces – The total number of traces with errors.

- Total Traces – The total number of traces.

- Errors Over Time – The error trends over the selected time period.

- Top 3 Errored Spans – The 3 spans that have the highest number of errors.

Latency

Investigate latency data to assess the delays between a user's request initiation and the LLM's response, including both the application and the models it uses.

- Span Latency – The average span time for this application compared to the average span time of other applications within the organization.

- Top 3 Slowest Spans (average) – The three spans with the longest average durations.

- Latency Over Time – Span duration trends (average, P75, P90, P95).

- Latency by Model – Average, P75, P95, and P99 span durations for each model used in the application.

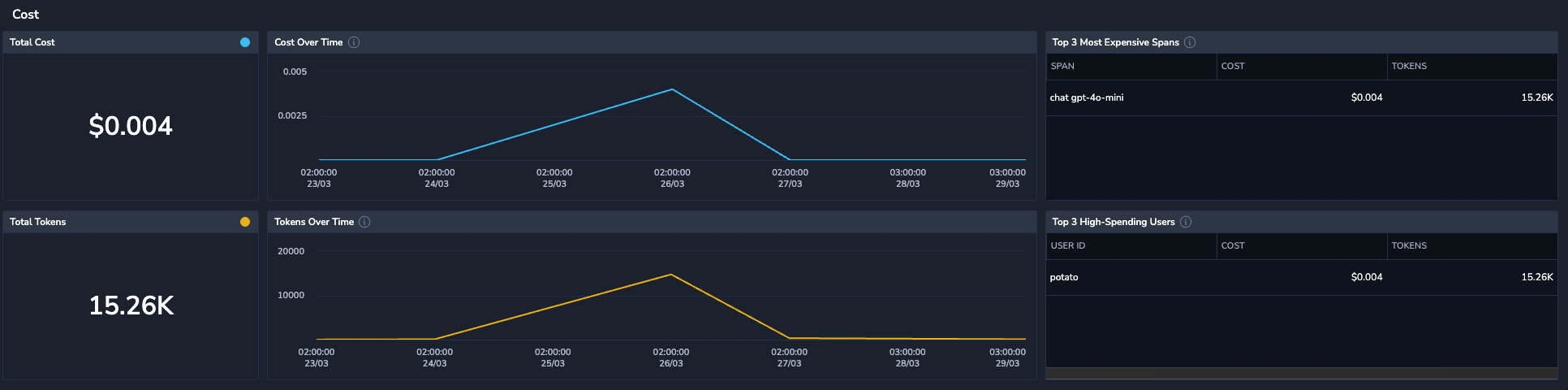

Cost

Analyze costs across different spans to identify whether some spans tend to be more expensive than others. All amounts are estimates, calculated by multiplying the token count by the cost per token for each model (excluding discounts or special plans).

- Total Cost – The overall expenditure, accompanied by a graph illustrating cost trends over time.

- Total Tokens – The cumulative token usage, with a corresponding graph tracking token consumption over time.

- Top 3 Most Expensive Spans – The 3 spans with the highest costs, ranked primarily by cost and secondarily by token usage.

- Top 3 High-Spending Users – The top 3 users based on spending, ranked by cost first, followed by token usage.

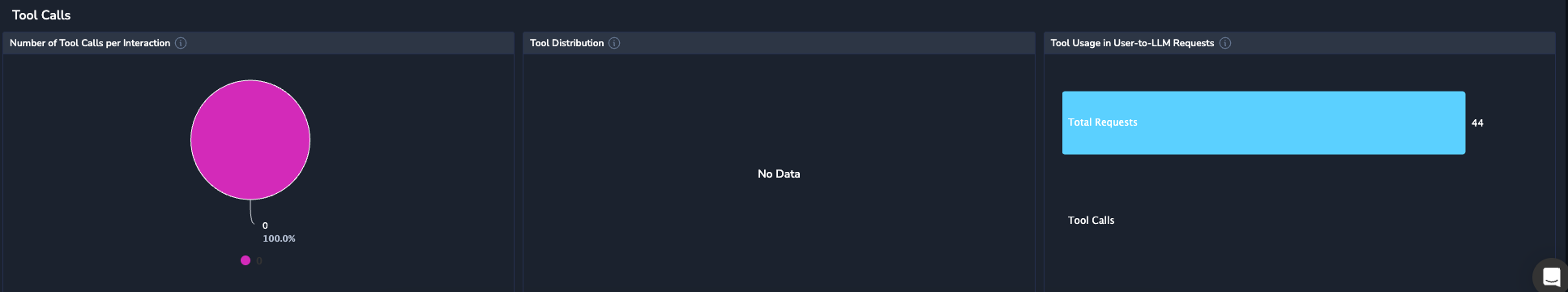

Tool calls

Visualize metrics for interactions where the application model sends requests to external tools, services, or systems as part of generating a response.

- Number of Tool Calls per Interaction – The total number of tool calls, broken down into successful and failed attempts.

- Tool Distribution – Distribution of tool usage, illustrating the frequency of each tool's utilization.

- Total Usage in User-to-LLM Requests – The amount of user-to-LLM interactions (from the moment a user sends a message to receiving the LLM's response) that triggered tool usage.