Monitoring CPU Consummption

Continuous Profiling enables deep, function-level analysis of CPU usage across your services, allowing you to detect inefficiencies, uncover bottlenecks, and optimize runtime behavior. This page explains how to use the Profiles UI to visualize CPU consumption, explore aggregated stack traces, and correlate performance trends with infrastructure or code-level changes.

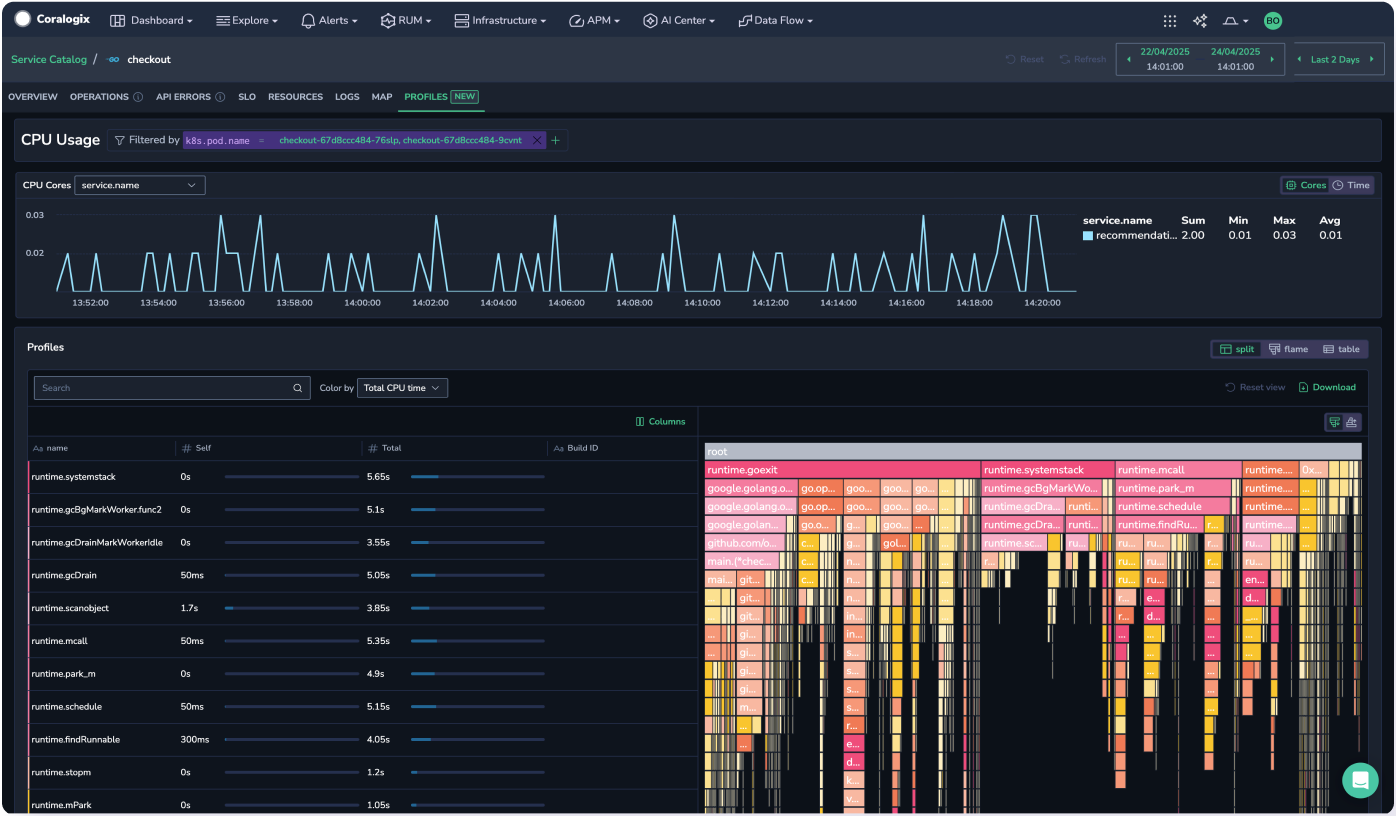

The Profiles UI presents:

- High-level CPU usage trends – Helps detect performance spikes, inefficiencies, and correlations with deployments or system events.

- Function-level CPU breakdown – Identifies which functions consume the most CPU time, allowing for targeted optimizations.

- Stack trace visualization & analysis – Visualizes execution flow to pinpoint bottlenecks and inefficient code paths.

Accessing profiles

To investigate resource usage and performance bottlenecks, navigate to APM > Service Drilldown > PROFILES. This section provides insights into CPU utilization across services at the function level.

Customize the UI to fit your needs

Filter by dimensions

If you’ve set up APM dimensions, filter by these labels to slice and dice the data as needed.

Filter CPU usage

Filter usage by pod, node or other OTel labels to display data that is relevant to your specific needs.

Visualize high-level CPU trends over time

Visualize CPU consumption over time for a particular service, helping you quickly detect performance trends.

- Y-axis (CPU time): Measures CPU time, the total processing time used by the service. Spikes may indicate heavy computations, inefficient queries, or memory management issues.

- X-axis (time): Helps correlate CPU trends with deployments, traffic changes, or system events.

When you spot an anomaly, hone in by narrowing down on the UI time picker or clicking or highlighting the point of interest to refocus the graph.

Grouping profile attributes

Our group-by labels feature lets you sum profiling metrics across processes by grouping them with specific labels, such as host_id, os_type, pod, or envr. Ideal for large infrastructures, select a group-by label to view its aggregated metrics over time. This helps you quickly analyze resource usage across multiple processes or pods.

Toggle between Time and Core views

Toggle between the Time and Core views. The former presents the total processing time, while the latter helps diagnose whether your application is effectively utilizing multiple CPU cores or if certain threads are causing contention.

If CPU time is increasing steadily, your service may have inefficiencies that need optimization. On the other hand, sudden spikes or dips may signal an issue such as inefficient garbage collection, unexpected traffic loads, or code regressions. Once you’ve detected when and how CPU consumption changes, use the stack trace visualization to dig deeper and find which functions are driving high CPU usage.

Pinpoint functions with highest CPU consumption

The Profiles Grid presents a structured breakdown of CPU usage at the function level. Complementing the CPU consumption graph, it reveals which specific functions are responsible for high CPU consumption. Functions are presented along with their self-CPU time and total CPU time.

Self CPU time ("Self"): The amount of CPU time spent exclusively in that function (not including calls to other functions). Sort by Self CPU time to find the functions consuming the most processing power.

Total CPU time ("Total"): The total CPU time spent in that function, including time spent in any functions it calls. High Total CPU time suggests functions that call expensive operations.

Focus on functions with both high Self and Total CPU time to reduce overall load.

Drill down with profiled stack traces

Visualize performance analysis easily and efficiently with a flame graph, a visual representation of profiled stack traces, where the width of each function block indicates its resource consumption, helping identify performance bottlenecks in a program.

Icicle graphs, a variant of flame graphs, provide an alternative visualization approach for performance analysis. Unlike flame graphs, which present the call stack with the root at the bottom and branches extending upward, icicle graphs invert this structure—placing the root at the top and leaves at the bottom.

Flame graph components

Frames

- Each frame in the stack represents a function call or method known as a frame. A method is a function that belongs to a class and operates on an object.

- The width of a frame represents CPU consumption, where wider rectangles indicate higher resource usage per execution compared to narrower ones. This width includes both the time spent directly in the method (self-time) and the time spent in its child frames.

- For icicle graphs, frames are stacked from bottom to top in the order they were executed during the program’s runtime. (Remember, the opposite is the same for flame graphs.)

- The topmost frame, known as the “root frame,” represents the combined resource usage of all its child frames. It can be compared to a pie chart, where the root frame is the entire pie, and each stack trace forms a segment.

- The bottom frame, known as the “leaf frame”, represents the last method called in the stack. The leaf frame only represents its self-time because it has no child frames.

- Side-by-side methods may have been executed in parallel or in any order, as frames are sorted alphabetically from left to right.

X-axis

- The x-axis represents total CPU usage, not the passage of time over which profiles were collected. Functions are sorted alphabetically.

Y-axis

- The y-axis represents the stack depth or the number of active function calls. The function at the bottom is the one using the most resources, while those above it represent its call hierarchy. A function directly above another is its parent.

Customizing your visualization

Sorting stack traces

You can also customize the Icicle graph such that it sorts the stack traces by function, it’s cumulative or different value.

Hide binaries

This can be useful when you want to only see all data in the icicle graph except for a particular binary. This is especially useful for Python workloads that call out to native extensions, so just viewing interpreted frames is not quite right, but just hiding the Python runtime.

Copy values

By right-clicking anywhere on the icicle graph, you can access the copy button, which allows you to copy all the values associated with a node.

Method name convention

Coralogix Continuous Profiling follows the standard naming conventions used in stack traces for each programming language when displaying method or function names. For instance, Ruby and Python stack traces typically show the file name rather than the class name. As a result, the profiler uses the file name in the profile output for these languages.

In contrast, Java stack traces include the class name, so the profiler uses the class name in Java profiles. For example, you might encounter a method name like Database.queryData(Database$Connection).

Here's how to interpret the format:

Database.queryDatarefers to the methodqueryDatain theDatabaseclass.Database$Connectionrepresents the argument passed to the method.

Method names may be displayed in different formats, as shown in the table below:

| Representation | Description |

|---|---|

Database.queryData(Database$Connection) | The method name without line numbers, as shown in the UI. |

Database.queryData(Database$Connection):L#58 | The method name along with the line number, as shown in the UI. |

queryData | The method name as it appears in the source code (e.g., in Database.java). |

All of these variations refer to the queryData method.