13 Security Alerts and Visualizations for VPC Flow Logs

AWS VPC Flow Logs monitor and record details about the traffic passing through your application, including requests that were allowed or denied according to your ACL…

Previously, we talked about the huge amount of time that software developers spend on maintenance. In this post we will try to give some advice on how to tackle this issue, save time and improve customer satisfaction.

It turns out you spend approximately 75% of your time debugging your code, fixing production problems and trying to understand what the hell went wrong this time. But how can you drastically reduce this time consumer you ask? The typical answers such as ‘write more unit tests’, ‘do better code reviews’, ‘stick to code conventions’ or simply ‘hire better developers’ are obvious and I’m not sure I can say anything you didn’t know about them. What we’d like to elaborate in this post is how you can reduce these 75% in a big way with minimal effort on your side. The short answer is Log Analytics. The long answer is elaborated below.

It’s obvious you have a deadline and that no one will give you the time needed to write the quality code you’d like to produce. It is also obvious that no one will accept a buggy code that will crash on production. This is exactly the fine balance you need to find between delivery and quality – a never ending story. Among the obvious methods to deliver quality code on time, one method remained unchanged for almost 30 years: log entries in your code. If you have a working production system, chances are you have thousands of log entries emitted from your system each second, and once you encounter a problem you browse through these logs in order to figure out what went wrong. Now let’s raise two questions: do you write quality log entries that really help you solve your bugs? And how can you understand the cause of problems from your logs without wasting hours on text search and graph analysis?

Regarding the first question, you know you are writing quality logs when you can actually understand the story of what your system does from your log records alone. This is not the case if you only log exceptions or problematic end-cases, because once a problem occurs you have no idea why it happened. You just know it did. On the other hand, you don’t want to clutter your log files with irrelevant information – for example logging a method which is called every 2 milliseconds. Writing quality logs does not take a lot of effort, but you do need to follow a few basic rules of thumb:

Write real sentences in the text message (so you can understand what you meant to log)

Use metadata which will help you filter and understand what this log entry is all about (severity, category, class name, method name, etc..)

Don’t be lazy – write enough logs which will tell you the story of your system

These will go a long way once you try to solve real problems in the field.

Regarding the second question – how to avoid bleeding your eyes out while reading your logs – even if you are writing super informative logs, most often than not, you are cluttered by them: Production systems create anywhere between 3-50 GB of logs per day. We are talking about ~80M log records per day. Try to understand a problem’s cause from this mass amount of information, and you are lost.

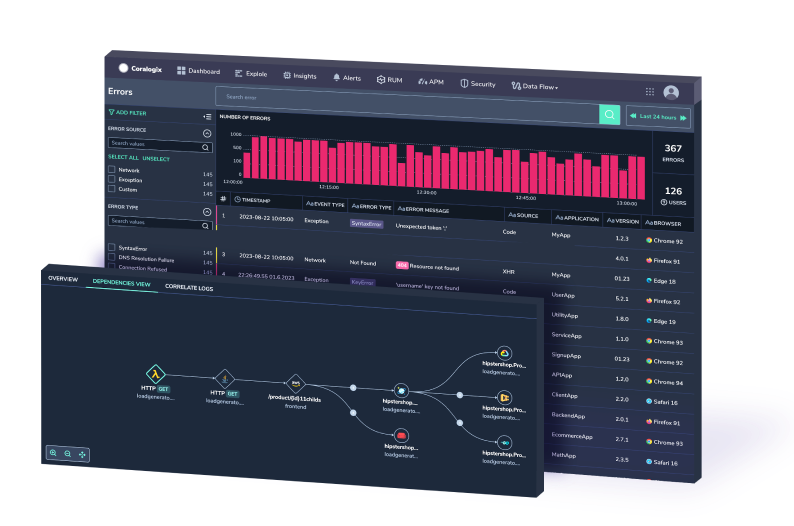

To tackle this ever growing problem, many Log Analytics companies are rising to the challenge and are trying to make your (truly Big Data) logs searchable and accessible. These companies provide the tools to search your data for specific keywords, define conditions for receiving alerts on suspicious cases, provide a single point repository for all your log data and provide cool graphs to show different trends.

The next challenge Log Analytics companies need to tackle, other than the feats they provide today, is to actually make sense out of your log entries, and provide an automatic manner to find the smoking gun that caused your problem. Looking even further into the future, these companies would love to offer actionable solutions to the problem you encounter, and let their algorithms do the frustrating work you do today.

With growing attention and advances in Big Data analytics, we assume we’ll see a growing number of Log Analytics platforms going to that direction in the next few years.