By now DevOps are hopefully aware that among the multitude of modern analytics methods available to them, an advanced log monitoring tool provides the most complete view into how a product is functioning. Cases of log files among DevOps are broad, ranging from the expected, like production monitoring and development debugging, to the less obvious, like business analytics.

But when faced with the dizzying list of third-party logging tools on the market, what key features should DevOps look for before making a choice? And is it possible to find a single platform that combines all the essential features for today’s SDLC?

The Must-Haves When Choosing a Logging and Monitoring Tool

Functionalities you might assume as standard among logging tools may not be universally offered, and the most recent developments in log management — particularly via machine learning — might pleasantly surprise you. This list of features is a good starting point for narrowing down the large market selection and choosing the best logging and enterprise monitoring tools for your needs:

Range and Scalability – As a product’s user base expands, the range of log sources that DevOps need to monitor grows as well. A logging tool should collect logs from each system component, application-side or server-side, and provide access to them in one centralized location. Not all logging platforms are capable of maintaining speed as the number of logs they process grows. Therefore, you should keep a critical eye on the process when trying out different solutions.

Advanced Aggregation – It’s easy to be overwhelmed by all the data that a logging tool collects. Good aggregation features should allow DevOps to group their logs together according to a variety of shared characteristics, including log origin (devices, servers, databases, applications), feature usage, user action, error type, etc. As logs are generated they can be automatically analyzed to determine which of the existing groups they fit into, and then categorized accordingly.

Intelligent Pattern Recognition – Advancements in machine learning allow contemporary logging and monitoring tools to quickly (within two weeks, in Coralogix’s case) learn the standard log syntaxes within a given system. This gives the platform a sense of what the logs look like when components of the system are running optimally, and saves DevOps time that would be spent programming the platform with standard log patterns.

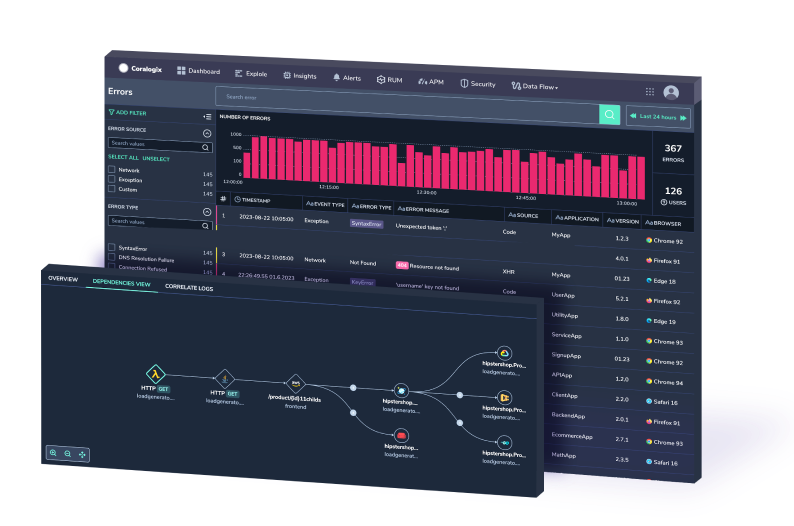

Automatic Anomaly Flagging – The days of static reporting are over. Once a platform has learned log patterns, it can generate DevOps alerts notifying DevOps of deviations in those patterns, including possible bugs, security vulnerabilities, sudden changes in user behavior, and more.

Deep Storage – In order to discover the history behind certain product behaviors, or to really get a clear sense of evolving trends in your business, you’ll need to dig deep. Extended storage times for old log files may also be necessary for terms of compliance, be it with externally-mandated laws and practices or for your specific organization’s internal standards.

Search Functionality – In the event that a bug is reported by users, rather than automatically identified by your logging tool, you need a high-performing search function to track the issue down among the heap of log files. Note that it’s not a given for all logging platforms to provide code context (what occurred before and after the issue) for a search result, or extensive filtering options to narrow down returns of false leads.

Minimal Footprint – What’s the point of using logs to monitor signs of potential resource abuse within your environment if the log management platform you’re using contributes to that abuse? When you test run a logging tool, it’s crucial to be mindful of the impact it has on your system’s overall performance.

Keep It Consolidated

There’s nothing cohesive, practical, or fiscally sound about using a conveyor belt of different enterprise tools. Time is wasted by constantly readjusting to the workflow of each, and money is wasted by virtue of adding 5-10 line items to a budget rather than one.

The checklist in this article is lengthy, but surprisingly attainable within a single logging platform. If a DevOps team finds itself piecing together multiple logging and monitoring tools in order to satisfy its full range of needs across development, testing, and production, it might be time to move to a more holistic solution.