.NET Logging: Best Practices for your .NET Application

Web application logging best practices Logging monitoring is a key requirement of any production application. .NET Core offers support for outputting logs from your application. It…

Log scaling is something that should be top of mind for organizations seeking to future-proof their log monitoring solutions. Logging requirements will grow through use, particularly if not maintained or utilized effectively. There are barriers to successful log scaling, and in this post we’ll be discussing storage volume problems; increased load on the ELK stack, the amount of ‘noise’ generated by a growing ELK stack, and the pains of managing burgeoning clusters of nodes.

Capacity is one of the biggest considerations when looking at scaling your ELK stack. Regardless of whether you are looking at expanding your logging solution, or just seeking to get more out of your existing solution, storage requirements are something to keep in mind.

If you want to scale your logging solution, you will increasingly lean on your infrastructure engineers to grow your storage, better availability and provisioning backups – all of which are vital to support your ambitions for growth. This is only going to draw attention away from their primary functions, which include supporting the systems and products which are generating these logs! You may find yourself needing to hire or train staff specifically to support your logging aspirations – this is something to avoid. Log output volumes can be unpredictable, and your storage solution should be elastic enough to support ELK stack scaling.

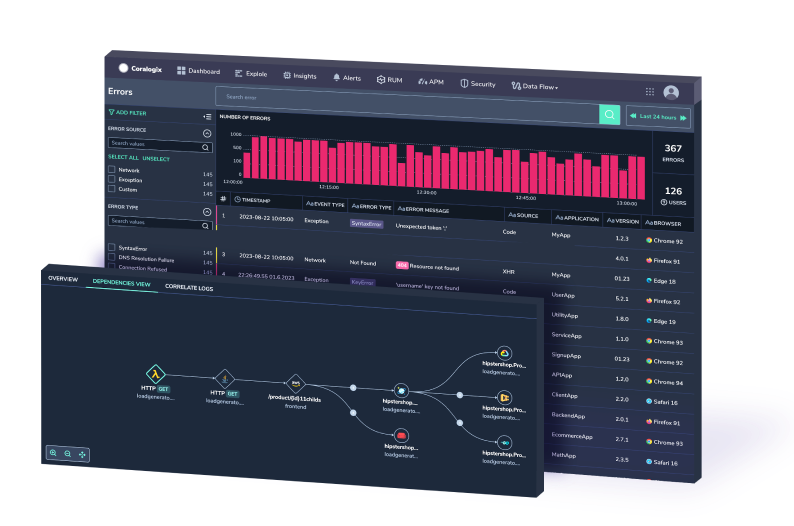

If you’re reading this then it is likely you are looking at log scaling to support some advanced analytics, potentially using machine learning (ML). For this to be effective, you need the log files to be in a highly available storage class. To optimize your storage provisioning for future ELK stack requirements, look at Coralogix’s ELK stack SaaS offering. Coralogix’s expertise when it comes to logging means that you can focus on what you want to do with your advanced logging, and we will take care of the future proofing.

So you want to scale your log solution? Well you need to prepare for the vast volume of logs that a scaled ELK stack is going to bring with it. If you aren’t sure exactly how you plan to glean additional insights from your bigger and better ELK stack deployment, then it most certainly isn’t of much use.

In order to run effective machine learning algorithms over your log outputs, you need to be able to define what is useful and what is not. This becomes more problematic if you have yet to define what is a “useful” log output, and what is not. The costs associated with getting your unwieldy mass of logs into a workable database will quickly escalate. Coralogix’s TCO calculator (https://coralogix.com/tutorials/optimize-log-management-costs/) will give you the ability to take stock over what is useful now, and help you to understand what outputs will be useful in the future, making sure that your scaled log solution gives you the insights you need.

Optimizing the performance of each of the constituent parts of the ELK stack is a great way of fulfilling future logging-related goals. It isn’t quite as easy as just “turning up” these functions – you need to make sure your ELK stack can handle the load first.

You can adjust Logstash to increase parsing, but this increases the ingestion load. You need to ensure that you have allocated sufficient CPU capacity for the ingestion boxes, but a common Logstash problem is that it will hoover up most of your processing capacity unless properly configured. Factor in your ingestion queue and any back pressure caused by persistent queues. Both of these issues are not only complex in resolution, but will hamper your log scaling endeavours.

The load on Elasticsearch when scaled can vary greatly depending on how you choose to host it. With Elasticsearch scaling on-prem, failure to properly address and configure the I/O queue depth will grind your cluster to a total standstill. Of bigger importance is the compressed object pointer – if you approach its 32GB heap limit (although Elastic still isn’t certain that the limit isn’t even lower, elastic.co/blog/a-heap-of-trouble) then performance will deteriorate. Both of these Elasticsearch concerns compound with the volume or extent of ELK stack scaling you are attempting, so perhaps you should delegate this out to Coralogix’s fully managed ELK stack solution.

Whilst forewarned is forearmed when it comes to these log scaling problems, you’re only well prepared if you have fully defined how you plan to scale your ELK stack solution. The differences and considerations for a small architecture logging solution scaling upward are numerous and nuanced: discrete parsing tier or multiple parsing tier; queue mediated ingestion or multiple event stores represent just a few of the decisions you have to make when deciding to scale. Coralogix have experience in bringing logging scalability to some of the industry’s biggest and most complex architectures. This means that whatever future proofing issues may present themselves, Coralogix will have seen them before.

The last tenet of log scaling to be mindful of is the implication of having larger clusters with bigger or more nodes. This brings with it a litany of issues guaranteed to cause you headaches galore if you lack some serious expertise.

Every time you add a node to a cluster, you need to ensure that your network settings are (and remain) correct, particularly when running Logstash, Beats or Filebeats on separate ELK and client servers.. You need to ensure that both firewalls are correctly configured, and this becomes an enterprise-sized headache with significant log scaling and cluster augmentation. An additional networking trap is the maintenance of Elasticsearch’s central configuration file. The larger the ELK stack deployment, the greater the potential for mishap in the config of this file, where the untrained or unaware will get lost in a mess of ports and hosts. At best, you have the possibility of networking errors and a malfunctioning cluster, but at worst, you will have an unprotected entrypoint into your network.

Adding more nodes, if done correctly, will fundamentally make your ELK stack more powerful. This isn’t as simple as it sounds as every node needs to be balanced to run at peak performance. Whilst Elasticsearch will try and balance shard allocation for you, this is a “one size fits all” configuration, and may not get the most out of your cluster. Shard allocation can be manually defined, but this is an ongoing process that changes with new indices, new clusters and any network changes.

Coralogix are experts when it comes to log scaling and future proofing ELK stack deployments, dealing with shards, nodes, clusters and all of the associated headaches on a daily basis. The entire Coralogix product suite is designed to make log scaling, both up and down, headache free and fully managed.