Reducing Latency in AI Model Monitoring: Strategies and Tools

In today’s AI-driven landscape, speed isn’t just a luxury—it’s a necessity.

When AI models respond slowly, the consequences cascade beyond mere inconvenience. User experiences deteriorate, infrastructure costs mount, and operational reliability falters. This is crucial for organizations deploying GenAI systems with complex language models or multi-step AI agents.

Latency—the time between receiving input and delivering output—is a crucial metric for your AI system. Each millisecond of delay compounds across your user base, potentially transforming an innovative solution into a frustrating experience.

In this article, we explore practical strategies for reducing latency in AI model monitoring without compromising accuracy or reliability. We then examine optimizations across data pipelines, model architecture, resource allocation, and network configuration to uncover how these techniques collectively minimize response times. Finally, we highlight how the Coralogix AI Center integrates a robust observability platform, empowering teams to detect and resolve performance bottlenecks before they impact end users.

Whether you’re struggling with sluggish response times or proactively optimizing your AI infrastructure, the techniques outlined here will help you deliver responsive, reliable AI experiences for your users.

TL;DR

- Latency in AI systems occurs across multiple stages (data ingestion, inference, post-processing), significantly impacting user experience, system throughput, and operational costs.

- Optimizing data pipelines through real-time streaming, dedicated inference servers, and microservice architecture creates the foundation for low-latency AI operations.

- Model compression techniques like pruning, quantization, and knowledge distillation reduce computational demands without sacrificing accuracy.

- Intelligent resource management (autoscaling, hardware acceleration) and network optimization (faster protocols, proximity deployment) further minimize response times.

- Coralogix AI Center’s unified platform provides real-time visibility, proactive alerts, and span-level tracing to identify latency bottlenecks before they impact users.

Understanding Latency in AI Model Monitoring

Latency in AI model monitoring represents the critical time delay between input reception and output delivery. This delay spans multiple stages—data ingestion, feature transformation, inference, and post-processing—each potentially becoming a performance bottleneck.

These stages introduce compounding complexity that can significantly amplify response times for systems using LLMs or multi-step AI agents. Real-world performance often diverges dramatically from benchmarks when these systems face production workloads.

When latency is too high, model deployment and system performance suffer in several ways:

- Extended Response Time: AI agents may become slow or non-responsive, frustrating users who expect near-real-time interactions.

- Infrastructure Strain: High latency can create queue build-ups, requiring additional compute or memory resources to handle concurrent requests.

- Deployment Complexity: To mitigate latency, teams might deploy more model instances or specialized hardware (e.g., GPUs or TPUs). While these measures help, they increase operational overhead and require careful autoscaling policies.

- Reduced Throughput: When each request takes longer to process, the system can handle fewer queries per second. This constraint can derail business objectives such as real-time recommendations or instant analytics since slower response rates directly impact overall service capacity.

- Unstable Performance: Spikes in latency often cascade into other parts of the pipeline, triggering timeouts or increased error rates, further degrading system reliability.

Proactively monitoring and managing latency through optimized pipelines, model refinement, and robust observability tools enables teams to deploy AI systems that maintain consistent performance even as demand scales.

4 Strategies for Reducing Latency

Reducing latency in AI model monitoring is critical for delivering real-time insights, especially in complex GenAI systems. Organizations can improve responsiveness without sacrificing accuracy by carefully optimizing data pipelines, compressing and refining models, making the most of available hardware, and tightening network performance.

1. Efficient Resource Utilization

Efficient resource utilization ensures low-latency performance even under fluctuating workloads. While pipeline and model optimizations reduce latency, these gains can vanish if hardware is overburdened. Autoscaling, load balancing, and hardware-specific optimizations help maintain responsiveness.

- Autoscaling & Load Balancing: Autoscaling dynamically adds or removes model-serving nodes (CPU/GPU) when usage hits certain thresholds. Load balancing distributes incoming requests, preventing any single instance from being overloaded. Together, they keep latency stable, even during traffic spikes.

- Specialized Hardware: GPUs, TPUs, and AI accelerators (e.g., NVIDIA TensorRT, Google TPUs) significantly speed up deep learning tasks. They handle matrix operations in parallel, making them vital for meeting strict latency targets in real-time AI environments.

- Memory & I/O Management: Adequate RAM, streamlined disk I/O, and co-locating data with compute resources help avoid loading delays. Data-transfer overhead decreases when models and data reside on the same machine or region, ensuring faster inference.

2. Optimizing Data Pipelines

An efficient data pipeline is the first step toward lowering overall latency. Moving from batch processing to real-time data streaming—via tools like Apache Kafka or AWS Kinesis—enables continuous updates and immediate model responses.

- Dedicated Inference Servers: Employ specialized servers (e.g., NVIDIA Triton, TensorFlow Serving) to handle high-throughput, low-latency workloads.

- Microservice Architecture: Break down AI workflows (data preprocessing, inference, post-processing) into smaller services to reduce bottlenecks.

- High-Quality Inputs: Consistent and validated input data simplifies on-the-fly transformations, cutting back on processing overhead.

Using gRPC or other binary protocols lowers communication overhead further, ensuring that data moves swiftly from one service to another.

3. Model Compression and Optimization

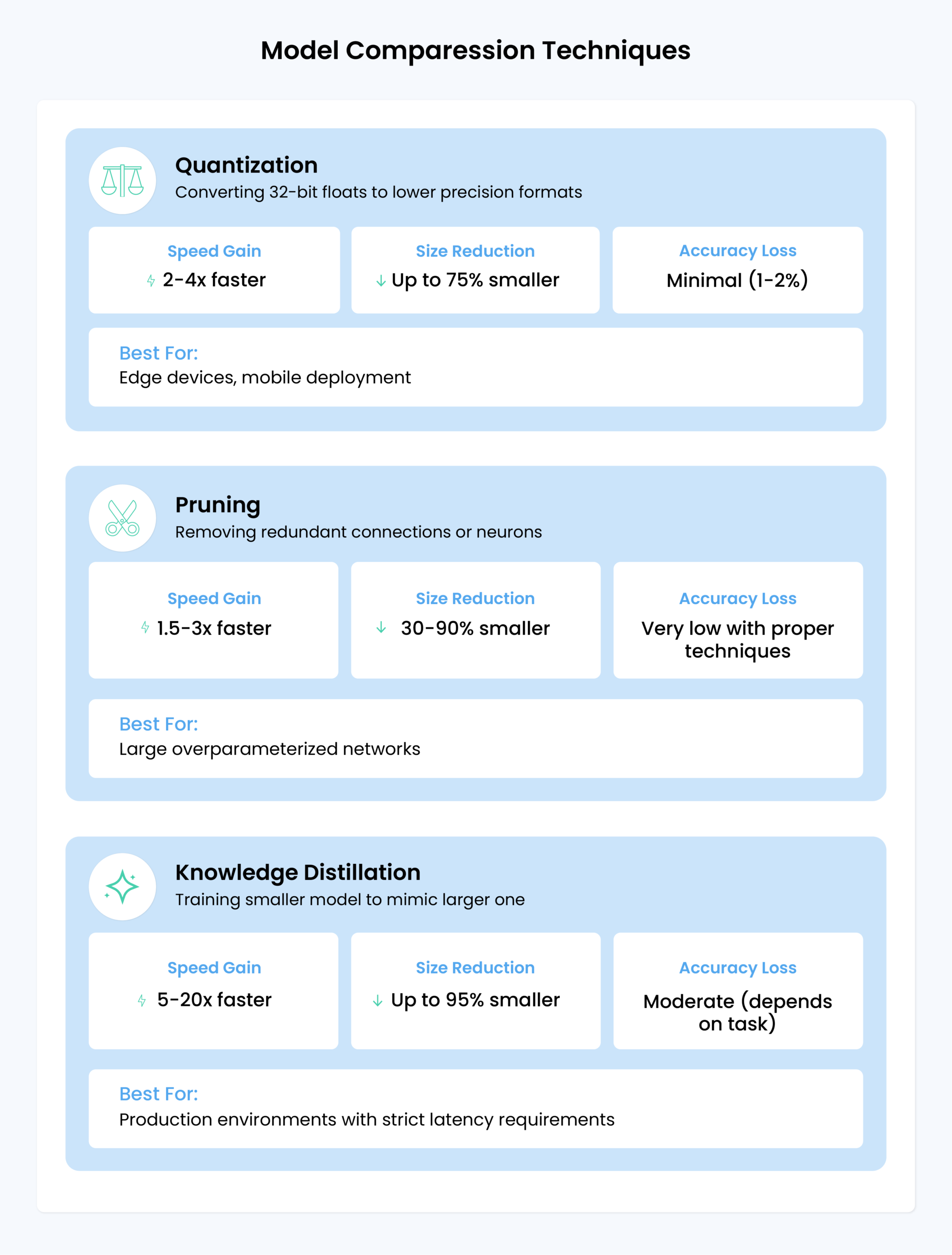

Large, complex models often boost accuracy but can slow down inference. Model compression methods address this trade-off effectively:

- Pruning: Remove low-impact connections to reduce computational load without heavily impacting accuracy.

- Quantization: Convert floating-point weights to lower-precision formats (e.g., 8-bit), accelerating math operations on supported hardware.

- Knowledge Distillation: Train a smaller “student” model to replicate the outputs of a larger “teacher” model, offering faster performance with minimal accuracy loss.

Additionally, carefully streamlining model architectures—fewer or optimized layers—helps avoid unnecessary computations. AI frameworks like PyTorch and TensorFlow include built-in tools for pruning and quantization, simplifying these workflows.

4. Network Latency Reduction

Network delays can comprise a significant portion of total response time in distributed or cloud-based AI systems. Minimizing unnecessary round-trips is key:

- Optimize API Calls: Combine requests and compress payloads for faster transfer.

- Use Faster Protocols: gRPC (over HTTP/2) reduces data serialization overhead compared to REST, enabling persistent connections and parallel multiplexing.

- Leverage Proximity: Deploy model servers closer to end-users or data sources, potentially across multiple regions, to shorten travel distance.

CDNs and edge computing can further trim latency, while private links or dedicated cloud connections help ensure consistent throughput. Continuous monitoring and distributed tracing then pinpoint bottlenecks, enabling swift adjustments or traffic redistribution.

Challenges and Considerations

Reducing latency in AI model monitoring is not without trade-offs. Teams must balance speed against accuracy, cost, throughput, and other operational realities. Below are the primary challenges and key factors to consider when optimizing for low latency in AI systems.

Trade-Off Between Latency and Accuracy

Optimizing a model for speed can sometimes compromise its quality or predictive power.

- Compression vs. Quality: Techniques like pruning and quantization reduce inference time but risk degrading performance if pushed too far.

- Model Size vs. Responsiveness: Smaller language models generally respond faster but may provide less fluent or accurate outputs.

- Best Practice: Pair each latency-focused change (e.g., model compression) with validation tests to ensure it meets acceptable quality thresholds.

Throughput vs. Individual Latency

In multi-user environments, there’s a tension between maximizing total throughput (requests per second) and keeping response time low for each user.

- Concurrency: Running multiple inferences on one GPU speeds up overall processing but can slow individual responses.

- Batching: Grouping requests before processing boosts hardware utilization but may introduce wait times for the first request in the batch.

- Instance Scaling: Spinning up more instances or GPUs can help maintain both throughput and per-request latency targets, though at added infrastructure cost.

| Concurrency Setting | Impact on Throughput | Impact on Individual Latency |

| Low Concurrency | Lower overall throughput | Faster single-request responses |

| Moderate Concurrency | Balanced approach | Acceptable user-facing response times in most cases |

| High Concurrency | Maximizes utilization and total requests served | May raise each request’s response time slightly |

Table: Impact of Different Concurrency Settings on System Performance

Monitoring Overhead

Observability adds some system overhead, so capturing too much detail may slow response times.

- Excessive Instrumentation: Detailed logging or full-trace captures for every request can create extra processing or network load.

- Selective Sampling: Monitoring only a subset of requests (or capturing partial traces) often balances visibility and speed.

- Pipeline Latency: High-latency monitoring pipelines delay alerts and reactive measures. Scalable, low-latency observability tools can mitigate this.

Complexity of Debugging Latency Issues

Latency bottlenecks may stem from model architecture, upstream data stores, network constraints, or external API dependencies.

- Root Cause Identification: Without comprehensive metrics and distributed tracing, teams can struggle to pinpoint which subsystem is the culprit.

- Holistic Observability: Monitoring every step (data ingestion, inference, output) allows quick correlation of concurrent events.

- Correlated Metrics: Linking spikes in input data size or memory usage to increased inference times helps identify the precise cause of slowdowns.

Balancing Latency with Other Metrics

While organizations want fast AI responses, factors like cost, memory usage, and operational complexity also matter.

- Cost Implications: Using high-end GPUs or deploying many regional instances lowers latency but increases infrastructure expenses.

- Scalability: Maintaining sub-second responses for a global user base can require robust scaling strategies, adding complexity.

- Business-Driven Thresholds: Define service-level agreements (SLAs) or acceptable p95 latencies so teams invest resources up to the point that delivers tangible value.

By planning around these challenges—carefully tuning models, managing concurrency, and adopting a smart observability approach—teams can maintain fast AI response times without sacrificing accuracy or inflating costs.

Coralogix’s AI Center for Reducing Latency in AI Model Monitoring

Coralogix’s AI Center offers a unified platform that integrates AI observability into its current platform. Its AI Center centralizes real-time visibility, proactive alerts, and root cause analysis, ensuring teams can swiftly identify and resolve performance bottlenecks.

Real-Time Visibility and Continuous Tracking

A key strength of the AI Center is its ability to instantly capture requests, responses, and performance data. This continuous approach provides a live view of how AI models handle incoming workloads, revealing latency spikes in real-time.

When an AI service begins to slow due to increased user load or inefficient data pipelines, engineers can spot the issue in dashboards, highlighting rising response times and workload imbalances.

Proactive Alerts

Latency can easily escalate from a minor inconvenience to a critical outage. Coralogix AI Center issues live notifications whenever latency levels cross defined thresholds to prevent such scenarios. These warnings appear in real time on dynamic dashboards, enabling teams to act quickly rather than waiting for user complaints.

Key benefits of proactive alerts include:

- Early Detection: Identifies performance dips the moment they arise, reducing the risk of cascading failures.

- Focused Escalation: Routes alerts to the right team members, ensuring swift investigation and targeted mitigation.

- Customizable Rules: Allows users to tailor alert thresholds to reflect the unique requirements of each model or application environment.

Span-Level Tracing for Root Cause Analysis

Diagnosing latency can be difficult in multi-service architectures. The Coralogix AI Center’s span-level tracing pinpoints where and when slowdowns occur, whether in data ingestion, model inference, or downstream services. By mapping out each call and its duration, teams can quickly determine which component is causing delays.

- Detailed Timeline: Reveals the duration of every step in the request-response cycle.

- Contextual Metrics: Compares current performance with historical data to identify emerging patterns.

Tailored Dashboards and Specialized Evaluators

Different projects have different latency objectives. The AI Center allows teams to organize models into a project catalog and apply specialized AI evaluators, such as checks for prompt injection or excessive resource consumption. This helps address potential latency risks, like security flaws or highly inefficient queries.

Organizing services under dashboards keeps the workflow uncluttered. Engineers see exactly the metrics that matter—response times, error rates, latency rates—without sifting through irrelevant data. The combination of curated views and real-time analytics ensures that problem areas stand out when they appear.

A Comprehensive Approach to Latency Management

Coralogix AI Center’s Center combines continuous monitoring, proactive alerting, and detailed tracing within a single solution. The outcome is a more resilient AI ecosystem where latency is quickly identified and mitigated, freeing teams to focus on innovation rather than reaction.

Conclusion: Building Low-Latency AI Systems That Deliver

Optimizing latency in AI model monitoring isn’t simply a technical challenge—it’s a critical business imperative. Organizations deploying GenAI applications face a clear reality: every millisecond matters. Slow responses frustrate users, inflate infrastructure costs, and undermine the value proposition AI promises to deliver.

Effective latency management demands coordinated improvements across data pipelines, model optimization, resource utilization, and network configuration. Combined with comprehensive observability practices, teams can quickly pinpoint bottlenecks and implement targeted improvements.

Coralogix’s AI Center integrates complete AI observability into a unified platform, allowing teams to identify and resolve performance issues before they impact users rapidly. Real-time alerts address emerging problems before they escalate, while advanced analytics track optimization impacts against meaningful business metrics.

The goal isn’t zero latency—finding the optimal balance that delivers exceptional user experiences while meeting SLAs and maintaining cost efficiency. Organizations that master this balance will set the standard for AI services that consistently perform at scale.

FAQ

What causes high latency in AI model monitoring?

How much latency is acceptable for AI applications?

How can I reduce latency without sacrificing model accuracy?

What tools help monitor and reduce AI model latency?

How does latency affect the business value of AI applications?

References

- Luca Stamatescu et al., “The LLM Latency Guidebook: Optimizing Response Times for GenAI Applications,” Microsoft Tech Community – AI Azure Services Blog techcommunity.microsoft.com

- Cris Daniluk, “Optimizing Inference Latency with AWS Bedrock: Key Announcements from re: Invent 2024,” Rhythmic Technologies Blog rhythmictech.com

- Aerospike (Steve Tuohy), “Reducing Latency and Costs in Real-Time AI Applications,” Aerospike Blog aerospike.com

- “Top 5 Strategies for Optimizing Data Pipelines in Your AI Tech Platform,” Data Science Society Blog datasciencesociety.net

- Armon Dadgar, “AI Infrastructure’s Biggest Challenges – The Data Pipeline is the New Secret Sauce,” Heavybit heavybit.com

- Microsoft Azure, “Networking recommendations for AI workloads on Azure (IaaS),” Microsoft Learn Docs learn.microsoft.com

- Greg Loo, “LLM Inference Performance Engineering: Best Practices,” Databricks Engineering Blog, Oct. 2024 databricks.com

“Best Practices for Monitoring AI Models in Production,” Toxigon (AI Monitoring) Blog toxigon.com