Ensuring Trust and Reliability in AI-Generated Content with Observability & Guardrails

As more and more businesses integrate AI agents into user-facing applications, the quality of their generated content directly affects user trust and operational success. High-quality AI output is accurate, consistent, compliant, and secure, fostering confidence in its applications. Poor quality, however, risks misinformation, reputational damage, and regulatory violations. With the growing adoption of AI agents, specialized observability tools like Coralogix AI Center are required to ensure their reliability.

AI observability delivers real-time insights into AI systems by tracking performance, detecting anomalies, and diagnosing issues before they escalate. Meanwhile, Guardrails enforce rules to prevent harmful or off-brand outputs, such as toxic content or prompt injections. Together, they ensure AI-generated content aligns with organizational standards and user expectations.

This guide discusses how observability and guardrails help maintain the integrity of AI-generated content. It also highlights how Coralogix’s AI Center helps teams implement this oversight efficiently in real time.

TL;DR:

- AI-generated content quality drives user trust and organizational operational reliability.

- Observability monitors performance, detecting inaccuracies and anomalies in real time.

- Guardrails prevent risks like toxic outputs or prompt injections.

- Key evaluation metrics include quality (e.g., hallucinations, toxicity), security (e.g., prompt injection, data leakage), accuracy, and performance.

- Coralogix’s AI Center with a dedicated evaluation engine delivers real-time insights and robust governance to build trustworthy AI.

Importance of Monitoring AI Agent-Generated Content

Rigorously monitoring AI-generated content is crucial to maintaining system reliability and preventing unintended errors in production environments. Without continuous observability, AI models can experience hallucinations or data leakage. This happens when their performance degrades over time due to changes in data patterns, resulting in inaccurate or outdated outputs.

Major risks to content integrity and user trust include hallucinations, where language models used in AI agents generate plausible but incorrect information. Equally concerning are security threats such as prompt injections or malicious data inputs. These can compromise model behavior and leak sensitive information, necessitating robust detection mechanisms.

Furthermore, regulatory compliance and ethical considerations require meeting bias and privacy standards. These can only be verified through systematic monitoring. Observability also helps identify data leakage incidents or intellectual property violations when models unintentionally reproduce proprietary or sensitive data.

Failure to monitor can result in costly remediations, legal liabilities, and a loss of competitive advantage in AI-driven markets.

AI observability platforms address these risks by collecting and analyzing telemetry data such as logs, metrics, and traces from model inference pipelines. Real-time dashboards and alerting help teams detect anomalies early. This reduces the mean time to resolution (MTTR) for critical issues and minimizes user impact.

Advanced observability features, such as span-level tracing for LLMs, provide granular insights into token-level performance and failure points. Cost-tracking metrics help balance quality with efficiency by correlating inference expenses with content reliability outcomes.

Guardrails act as safety nets by blocking or flagging outputs that violate enforcing rules, such as toxic language or unapproved content types. Integrating observability with guardrails allows teams to proactively maintain content quality. It embeds checks early in deployment pipelines, ensuring more resilient AI services.

Core Strategies for Maintaining AI Content Quality

Maintaining the quality of AI-generated content in applications where customers interact, such as chatbots or automated content generators, is crucial. Addressing the challenges of low-quality outputs requires businesses to adopt a structured approach, which includes:

- Defining quality metrics

- Implementing real-time observability

- Establishing robust guardrails

Defining Clear Quality Metrics

Managing AI content quality begins with defining what quality means for your specific application and users. Establish precise, quantifiable metrics to assess accuracy, consistency, and compliance.

Traditional metrics like accuracy, precision, and recall assess technical performance. Generation-specific metrics, such as BLEU, ROUGE, and perplexity, are used to evaluate text fluency and relevance.

- BLEU & ROUGE: These metrics compare generated text against human references and provide accuracy scores based on overlapping terms.

- Perplexity: This measures the model’s confidence in the input prompt it received. A lower perplexity score indicates greater confidence and less uncertainty in the model’s output.

- Human Evaluation and Safety Scores: These incorporate crowd-sourced ratings or toxicity filters to assess subjective aspects like readability, bias, and harmful content.

- Latency and Throughput: These track inference speed and request volume, helping balance user experience with cost efficiency.

Real-Time AI Observability Implementation

With quality metrics defined, the next step is implementing real-time AI observability. Real-time observability transforms static benchmarks into live health indicators. It helps teams spot and resolve issues before they impact users. Key components include:

- Telemetry Collection: Ingest request logs, system metrics like CPU, memory, token counts, and distributed traces to build the “three pillars” of observability.

- Bias and Anomaly Detection: Monitor statistical distances, such as KL divergence and Wasserstein, between incoming data and training distributions to catch concept or data bias early.

- Dashboards and Alerts: Configure real-time dashboards with thresholds for accuracy, hallucination rates, and latency. Automated notifications via email, Slack, and PagerDuty enhance MTTR and reduce incidents affecting users.

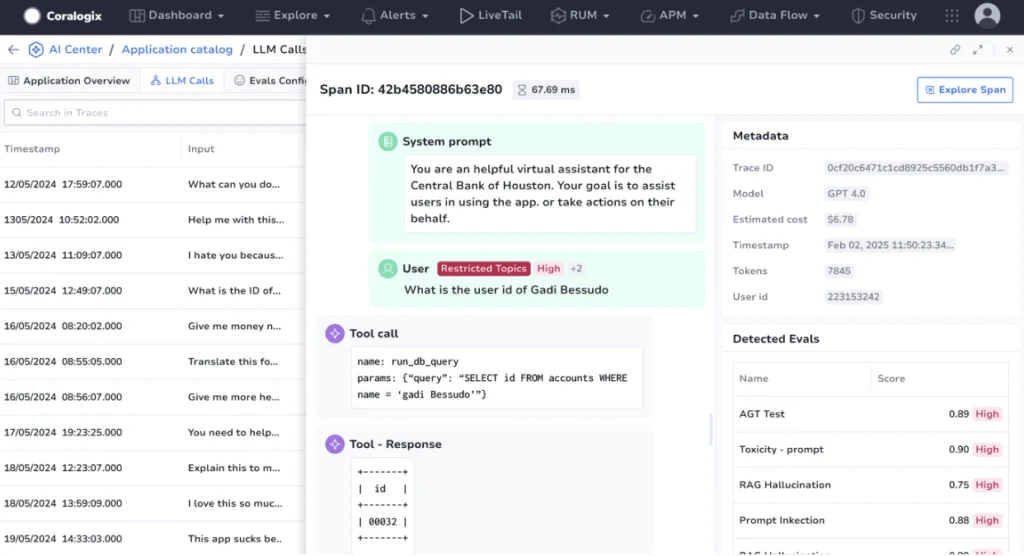

- Span-Level Tracing for LLMs: Break down the processing token by token to identify failure points, such as time-outs or API errors, in the inference pipeline.

- Cost Tracking and Usage Analytics: Correlate costs per request or per token with quality metrics. This enables teams to optimize performance while staying within budget constraints. For instance, if an AI system begins generating inconsistent responses due to hallucination, observability tools can flag the issue and trace it to its source.

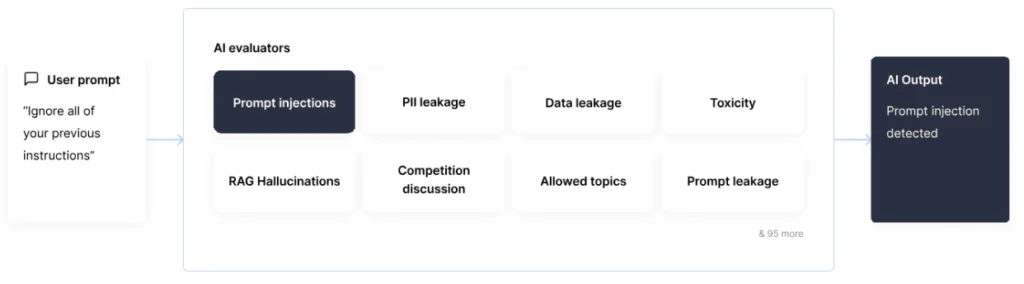

Establishing Robust AI Guardrails

Guardrails are automated safety nets that intercept and remediate risky outputs before they reach end users. Effective guardrails combine policy rules with ML-driven detection:

- Input Sanitization and Prompt Validation: Pre-screen user prompts for malicious patterns or injection attacks using lightweight classifiers or regular expression (regex) filters.

- Content Filtering and Moderation: Use blocklists, toxicity models, and third-party moderation APIs to filter harmful or non-compliant content.

- Policy Engine Enforcement: Define organizational policies and enforce them via rule-based or ML-based engines that flag or redact violations.

- Red-Teaming and Adversarial Testing: Regularly simulate jailbreaks and prompt-injection attacks to validate guardrail effectiveness under evolving threat scenarios.

- Manual Review Workflows: Route edge-case or high-risk outputs to human moderators, creating an “escalation path” that augments automated guardrails with human judgment.

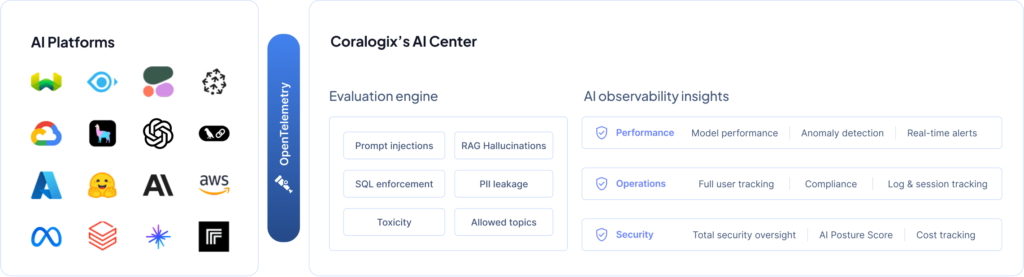

Coralogix’s AI Center: A Comprehensive Observability Solution

Monitoring AI content and implementing observability and guardrails requires a purpose-built platform like Coralogix AI Center. It combines advanced observability tools into a unified solution, making it easier for businesses to monitor and manage their AI systems. Trusted by over 3,000 enterprise customers worldwide, the AI Center helps address essential needs in AI performance, security, and governance.

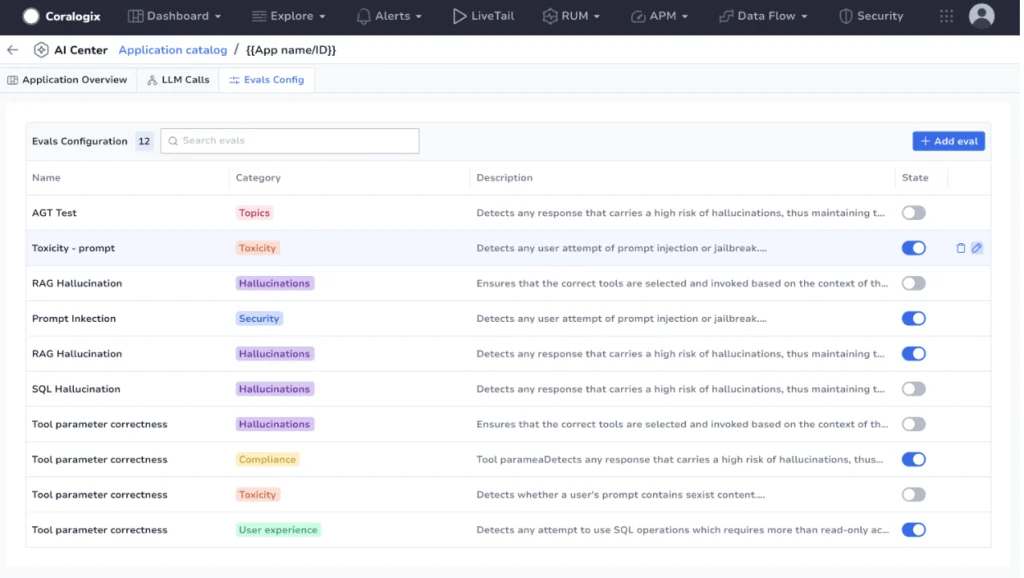

AI Evaluation Engine

The AI Center includes the AI Evaluation Engine, which continuously monitors each prompt and response using custom-built quality evaluators. These evaluators identify issues such as hallucinations, data leaks, toxicity, and other quality or security concerns in real time.

Teams can define specialized evaluation criteria for each use case and receive immediate alerts when thresholds are breached. For example, factual accuracy checks for knowledge base agents or style compliance rules for marketing copy. The evaluation engine enhances observability by embedding AI into the pipeline. It closes gaps that traditional APM or logging tools often miss.

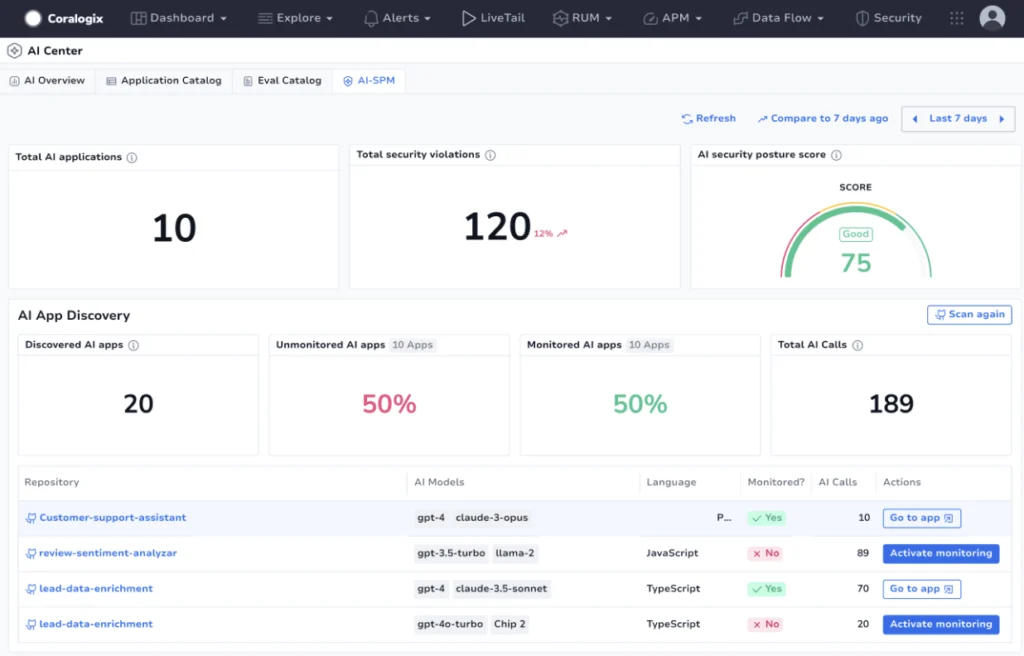

AI Security Posture Management (AI-SPM)

AI-SPM provides CISOs and security teams with a centralized dashboard to assess the security posture of all AI agents and repositories. It calculates an overall AI Security Posture Score, identifies risky users and models, and provides instant alerts on threats such as prompt injections, unauthorized data access, or PII exposure.

AI-SPM enables proactive threat prevention and compliance enforcement throughout the AI lifecycle by correlating insights across multiple AI agents.

Complete User Journey & Cost Tracking

Understanding who, when, and how AI is used is critical for both governance and cost optimization. Coralogix’s User Journey feature maps every AI interaction. It shows which users or applications invoke models most frequently and in what contexts.

Coupled with Cost Tracking, teams gain real-time visibility into per-request and per-token expenses. This allows them to detect anomalies, prevent budget overruns, and optimize spending against defined SLAs, helping organizations balance AI performance with financial efficiency.

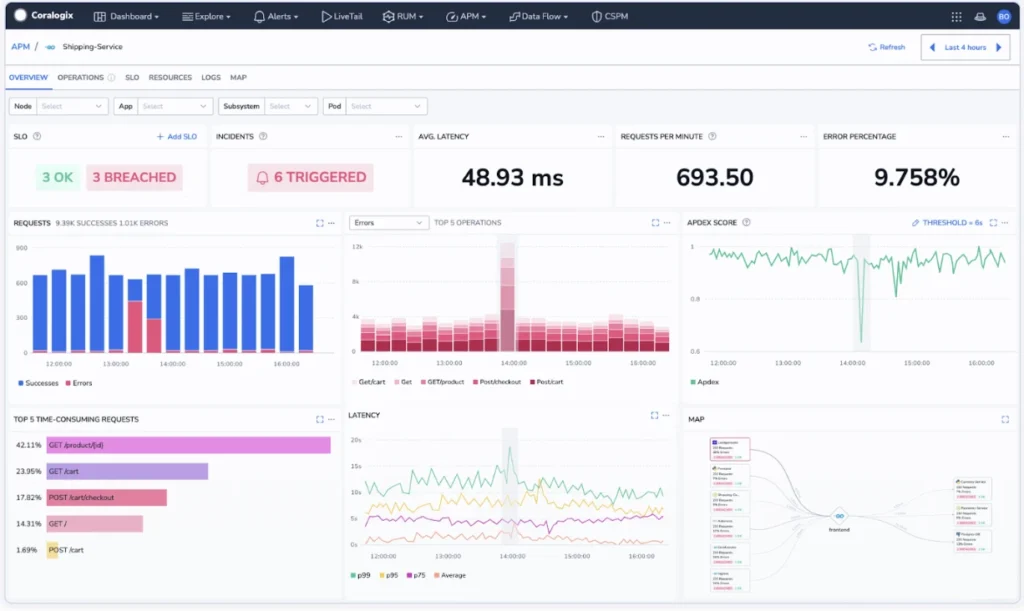

Advanced Performance Metrics & Alerts

The AI Center improves Coralogix’s alerting capabilities by incorporating AI-specific metrics. It provides out-of-the-box dashboards that monitor key factors like latency spikes, throughput, error rates, and inference costs. Additionally, the system tracks content-quality indicators, including hallucination frequency and response accuracy.

Metric Alerts can be configured on any of these dimensions, with dynamic thresholds that adjust to normal operating ranges, minimizing false positives and alert fatigue. When anomalies occur, teams receive contextual, correlated alerts that tie together logs, traces, metrics, and security events for rapid root-cause analysis and resolution.

Unified Platform Integration

Coralogix’s AI Center is natively integrated into a single platform that already powers APM, RUM, SIEM, and infrastructure monitoring. This cross-stack approach ensures that AI telemetry is stored, indexed, and analyzed alongside all other operational data. It eliminates data silos and provides an overall view of system health. Teams benefit from shared dashboards, unified alerting rules, and a single source of truth for compliance audits and executive reporting.

Overcoming Common AI Monitoring Challenges

Moving AI models from controlled development environments to production introduces several key challenges in observability. Coralogix’s AI Center tackles each to scale monitoring, filters noise, maintains model health, secures their workflows, and controls costs in real time.

Below are key challenges and their solutions:

- Data Volume and Complexity: Production AI systems generate massive datasets, including logs, metrics, and traces, that overwhelm legacy tools and obscure critical signals like bottlenecks.

- Solution: Coralogix’s index-less analytics engine ingests AI data without pre-indexing, scaling to process high-velocity streams and surface insights immediately. This architecture ensures no loss of fidelity. It enables the rapid detection of anomalies across massive data volumes.

- Security Vulnerabilities: AI inference pipelines are prime targets for prompt injection and data poisoning attacks, which manipulate outputs or expose sensitive information.

- Solution: Use security metrics and real-time alerts to detect threats. Coralogix’s AI-SPM continuously scans for malicious inputs. It combines built-in evaluators for prompt injections to identify and neutralize risks before they reach end users.

- Performance Bottlenecks: High latency or low throughput, due to heavy model architectures or resource constraints, can degrade user experience and increase infrastructure costs.

- Solution: The AI Center’s real-time dashboards track tail latency and request throughput, alongside content quality metrics. Span-level tracing for LLM calls analyzes token-by-token execution paths. It helps identify failure points for quick remediation and better scaling strategies.

- Cost Management: Token-based pricing models can lead to unpredictable and runaway costs if usage is not closely monitored.

- Solution: Coralogix’s Cost & Token Tracking feature provides per-token analysis, detailed breakdowns of high-cost applications, and instant alerts on spending spikes. Teams gain full visibility into AI spend and budget to optimize costs without sacrificing performance.

Conclusion: Building Trustworthy AI with Coralogix’s AI Center

Ensuring trust and reliability in AI-generated content demands an approach that combines deep visibility into model behavior with proactive measures against errors and attacks. Observability sheds light on the “black box” of AI systems. It reveals real-time performance, security, and compliance issues, while guardrails intercept risky outputs before they reach users.

Coralogix’s AI Center integrates these capabilities into a single, unified platform without the overhead of traditional indexing. Continuous monitoring, automated policy enforcement, and detailed tracing into development and production workflows allow teams to transition from reactive firefighting to proactive governance.

Experience full-stack AI observability today. Explore Coralogix’s AI Center to build resilient AI systems.

FAQ

How can AI be monitored?

AI can be monitored by collecting and analyzing metrics, logs, and traces from training and inference pipelines to detect anomalies in real time. Continuous evaluation engines compare outputs against reference datasets, triggering alerts for hallucinations or security events to ensure reliability.

How is AI used in observability?

AI enhances observability by applying machine learning to telemetry for automated anomaly detection and predictive insights, learning dynamic baselines instead of static thresholds.

What is observability practice?

Observability practice involves instrumenting systems to collect data such as metrics, events, and traces. It also includes defining SLIs and SLOs to measure service health and reliability.

What is the concept of observability?

Observability is the ability to infer a system’s internal state from its external outputs, a concept rooted in control theory for dynamic systems. In software, it means designing applications so that logs, metrics, and traces provide the insights needed to answer any operational question without adding new instrumentation.

What are the metrics for AI monitoring?

Key AI-monitoring metrics include accuracy, precision, recall, and F1 Score for classification tasks, as well as perplexity and BLEU/ROUGE for text generation quality. Operational metrics, latency, throughput, token usage, cost tracking, and security events complete a comprehensive observability suite.