Scaling AI Observability for Large-Scale GenAI Systems

As organizations deploy increasingly complex Generative AI (GenAI) models, AI observability has risen to the forefront of technical priorities. Traditional monitoring solutions were never designed for today’s distributed, data-hungry AI workflows. Minor failures can impact large-scale systems like recommendation agents, fraud detection applications, or language models.

Computational resource bottlenecks or unnoticed generative content failures (e.g., hallucinations) can cause performance degradation, compliance issues, and even reputational harm.

Recent surveys indicate that over 80% of enterprises struggle with the deluge of telemetry data across siloed tools and sources, exposing them to blind spots and late detection of critical failures.

Yet robust observability can mitigate these risks. Unlike basic monitoring, observability takes a holistic approach to system health by capturing a wide range of signals—metrics, logs, traces, and events—and correlating them to reveal what is happening and why it is happening.

This article explores why scaled AI observability is critical for large GenAI deployments, how it differs from traditional monitoring, and which practices and technologies enable success. We define AI observability clearly, then examine its key challenges—from massive data volumes to dynamic model behavior—and progress to recommended strategies.

Throughout, the goal is to outline a path toward an observability strategy that keeps complex AI systems performing reliably and transparently at scale.

TL;DR

- AI observability provides end-to-end visibility into complex AI systems’ model performance, data pipelines, and infrastructure.

- End-to-end pipeline management and AI-powered monitoring tools are essential for real-time detection and root cause analysis.

- Best practices include tracking key model metrics, thorough logging, and integrating observability into the CI/CD process.

- Future innovations (like generative AI for log analysis and retrieval augmentation) promise more proactive, intelligent observability.

AI Observability: Definition and Importance

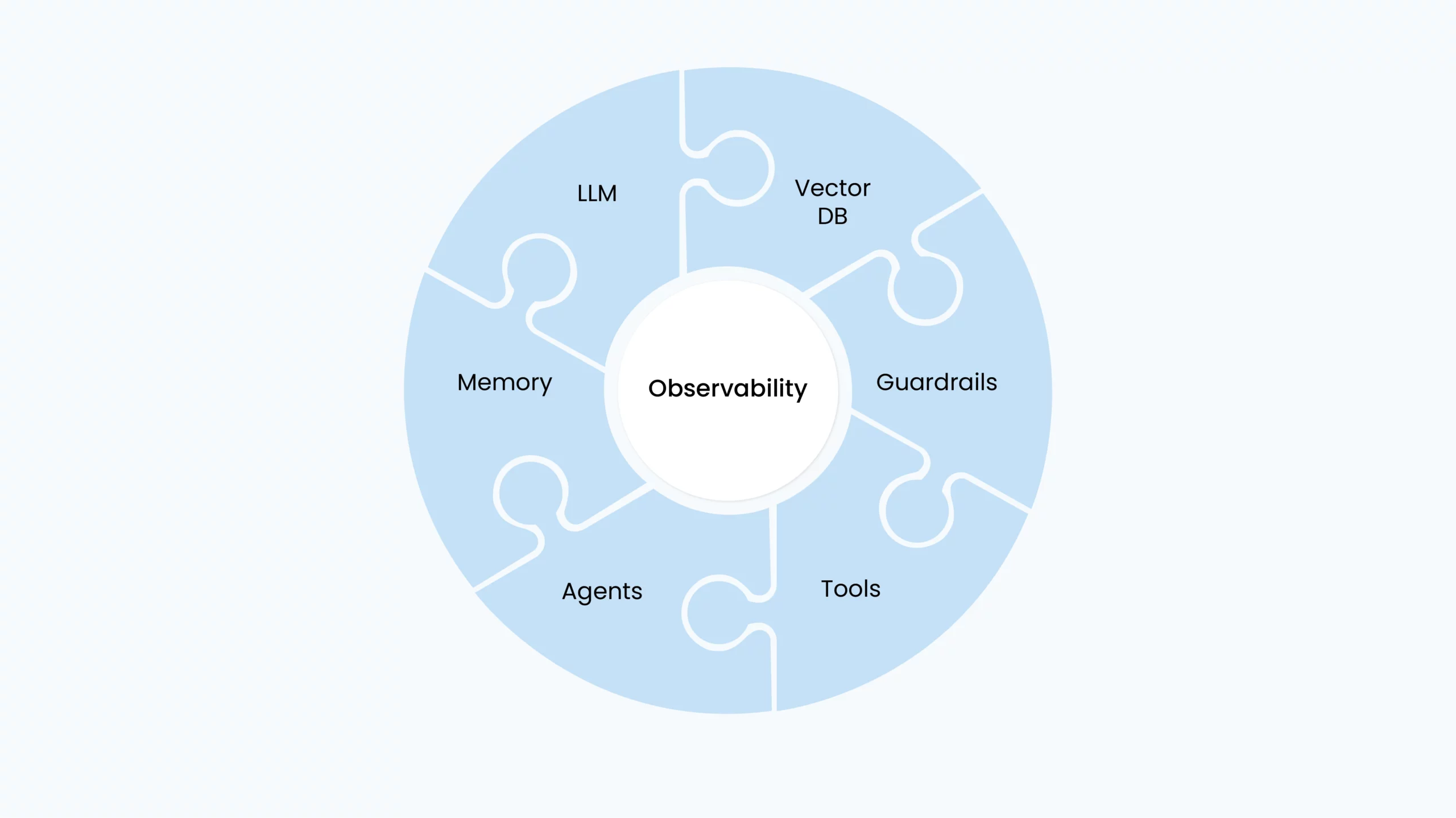

AI observability means achieving end-to-end visibility into an AI system’s internal workings by analyzing external outputs—think logs, traces, metrics, and real-time events. While traditional monitoring flags an error count or latency spike, AI observability goes further by helping pinpoint why the system behaved that way.

This approach tracks the health of data pipelines, model inputs and outputs, the underlying infrastructure, and the code that glues it all together.

For instance, when a GenAI agent operates in production—especially in 2025, where adoption of autonomous, goal-driven agents is rising—it encounters diverse user inputs (e.g., prompts, retrievals from vector databases) and potentially generates continuously evolving responses.

In smaller pilot projects, simply watching CPU usage or collecting error logs might suffice; however, organizations need more granular insights in large-scale environments serving millions of users. For instance, a slight but persistent latency issue can degrade an application’s performance without triggering an obvious “error.”

Today, we need AI observability tools to monitor these subtle changes by contrasting current prompts, vector retrievals, and generative outputs with established usage patterns, thereby detecting issues before they escalate.

AI observability evolves monitoring from simple detection to deep diagnostic capability. It turns black-box systems into transparent ones, enabling teams to respond to anomalies quickly, retain user trust, and optimize performance. As GenAI applications scale, this comprehensive insight is indispensable to maintaining reliability and meeting the heightened expectations of modern AI-driven services.

Observability builds stakeholder trust and meets compliance requirements in regulated industries such as finance and healthcare. Agent explainability and traceability become essential when algorithms significantly impact customers’ lives or health.

Challenges in Scaling AI Observability

Although AI observability is essential, scaling it across large GenAI systems is far from straightforward.

Data Quality and Volume in RAG-Based Systems

Large-scale AI produces massive volumes of data—from high-frequency user prompts and retrievals in vector databases to logs from dozens of microservices orchestrating generative requests. Storing and analyzing billions of events in real time can overwhelm traditional monitoring approaches, leading to data overload.

Moreover, ensuring relevance and consistency in the data fed to an AI model can be difficult. A small corruption in embeddings or outdated references in a vector database can cascade into hallucinations or off-topic responses. These issues are harder to detect at scale because the signals get buried in the noise.

Maintaining consistent schemas, validating real-time data feeds, and verifying retrieved documents are crucial for preventing misaligned outputs. Without robust observability, issues in prompt content or stale embeddings might go unnoticed, effectively “poisoning” production models and harming user experiences.

Infrastructure and Performance Issues

The infrastructure hosting AI workloads—GPUs, CPUs, storage, and network—can become a bottleneck. A minor GPU memory leak might go unnoticed on a small scale but cause huge slowdowns when large GenAI workloads make thousands of requests.

Monitoring infrastructure at this level of detail requires a robust telemetry strategy that collects metrics, traces, and logs from multiple layers. Yet, collecting high-frequency data on token usage or vector DB queries can be costly in storage and computing.

Organizations often face a trade-off: gathering enough observability data to troubleshoot effectively (e.g., spotting surges in prompt latency) without incurring prohibitive costs or performance overhead.

Prompt-Level Observability and Security

GenAI systems are highly vulnerable to malicious or accidental misuse of prompts. A cleverly crafted prompt can inject unwanted instructions or elicit sensitive information, while an improperly formatted prompt might yield off-topic or biased outputs.

Observing real-time prompts, user behaviors, and model responses is essential for detecting anomalies such as prompt injection attempts or brand-damaging content generation. Granular telemetry at this level lets teams quickly pinpoint the source of suspicious or non-compliant outputs and prevents small issues from scaling into major security or PR incidents.

These factors highlight the complexity of maintaining situational awareness for large GenAI agents. With massive data flows, dynamic model behavior, and intricate infrastructure dependencies, it’s easy to miss early warning signs. As systems scale, these blind spots exacerbate the risk of user-impacting incidents and hamper the organization’s ability to optimize costs and performance.

3 Strategies for Effective AI Observability

Overcoming the hurdles of large-scale AI observability calls for technological solutions and a cultural shift in how GenAI agents are built and maintained. Below are key strategies:

- End-to-End Pipeline Observability for GenAI

Ensuring reliable GenAI outputs starts with monitoring data flow—from prompt ingestion to final inference responses. At each stage, teams must validate and log essential details:

- Prompt Validation & Context Tracking: Observe for malformed or potentially malicious prompts, unusual token usage, or schema anomalies in any contextual data added to the prompt. Early detection of irregularities prevents prompt injection and preserves output quality.

- Content Integrity Checks: If your GenAI application retrieves documents or knowledge bases for context, validate those sources for unexpected changes (e.g., unauthorized updates, broken links). This ensures the model’s responses are always built on trusted, correct information.

Traceable Data Lineage: Record which project, prompt set, or external resource contributed to a given output. By logging this lineage, you can quickly pinpoint the source of errors—whether it’s a faulty piece of reference data or an outdated prompt template.

- Utilizing AI-Driven Observability Platforms

As complexity grows, manually defining thresholds and combing through logs becomes infeasible. Modern AI-driven observability tools use machine learning to identify issues and correlate events across the stack.

For instance, Coralogix’s AI Center provides real-time visibility into suspicious prompt injections, cost anomalies, and latency spikes. By leveraging span-level tracing and live alerts, teams quickly identify the root cause of performance or security issues across the entire GenAI workflow.

The AI Center unifies crucial observability data (logs, metrics, and traces) and applies proactive analytics to empower faster, more accurate troubleshooting.

Moreover, predictive analytics can forecast potential failures by analyzing historical trends. This reduces false positives and helps teams act before performance degrades.

- Implementing Best Practices

- Monitor Key Model Metrics: Track accuracy, latency, throughput, bias, and other KPIs in real time.

- Monitor Prompt and Usage Anomalies: Monitor unusual shifts in user prompts, token usage, or content quality. Rapid alerts on suspicious prompt injection attempts or sudden surges in usage patterns help teams intervene before performance or security is compromised.

- Track Infrastructure Health: Integrate system metrics (CPU usage, GPU load, memory, etc.) with model metrics, enabling engineers to correlate model performance issues with resource constraints.

- Comprehensive Logging & Tracing: Log every prediction with context (model version, feature values, etc.). Where feasible, trace the entire path from data ingestion to final output for robust root cause analysis.

- Observability by Design: Embed instrumentation directly into AI code and CI/CD pipelines, ensuring every new model or service is automatically included in dashboards and alerts.

Adopting these strategies builds a foundation for scaling observability. By handling data pipelines holistically, leveraging AI-powered analytics, and applying best practices, teams can track the myriad signals emitted by AI systems without getting overwhelmed.

This robust setup is the key to operating AI solutions reliably in production, enabling faster debugging, higher accuracy, and improved user satisfaction.

Future Directions and Innovations

AI observability is evolving alongside the rapid growth of machine learning. Several promising directions are poised to make observability even more intelligent, proactive, and holistic:

Generative AI and Predictive Observability

Large language models (LLMs) and other generative AI techniques are set to revolutionize how observability tools gather and analyze data. Already, solutions are emerging that use advanced algorithms to cluster error logs, summarize anomalies, and even answer natural-language queries about system behavior.

For instance, an engineer could ask, “Why did Model A’s accuracy drop last Tuesday?” and receive a synthesized explanation that ties together relevant logs, metrics, and traces.

Predictive capabilities will likewise improve: advanced ML embedded into observability platforms can forecast potential outages or performance issues hours before they become critical, allowing proactive mitigation.

Retrieval-Augmented Generation Troubleshooting

Another trend is retrieval augmentation, where observability systems reference knowledge bases and past incident data to guide current resolutions. The platform can suggest potential fixes or highlight relevant runbooks by matching today’s anomaly patterns with archived records of similar incidents.

This approach significantly accelerates root cause analysis. Instead of starting from scratch, engineers receive immediate context on what worked—or didn’t—when a similar issue arose. This can be especially valuable for large enterprises with extensive incident histories and varied team expertise.

Holistic and Responsible Observability

As AI increasingly shapes critical decisions, observability will expand to track technical performance and ethical and compliance metrics. This includes monitoring bias and fairness, privacy boundaries, and even the environmental impact of large-scale compute usage.

Future observability dashboards could unify resource utilization with model outcomes and fairness indicators, giving organizations a complete picture of how their AI systems affect users and the planet.

Open standards like OpenTelemetry will further encourage interoperability, enabling data to flow between different observability tools so teams can leverage best-of-breed solutions without losing a centralized view.

AI observability is on a path to becoming smarter, more predictive, and more ethically informed. By harnessing advanced analytics, retrieval-based insights, and broader coverage of responsible AI concerns, next-generation observability solutions will help organizations maintain robust, efficient, and trustworthy ML services at scale.

Key Takeaways

Scaling AI observability goes beyond simply upgrading your dashboards or collecting more logs. It demands an end-to-end approach integrating data checks, model performance monitoring, anomaly detection, and infrastructure insights into a coherent fabric. Organizations that master this discipline can deploy AI confidently.

The journey typically starts by embedding observability into data pipelines, model training, and production serving. Automated checks ensure every stage is monitored for anomalies, while modern AI-driven platforms reduce the toil of analyzing mountains of telemetry.

Best practices—such as tracking key model metrics, comprehensive logging, and real-time alerting—keep teams agile enough to respond to issues in minutes, not days.

Ultimately, observability is the backbone of effective AI operations. It transforms the black box of AI into something measurable, explainable, and improvable. Investing in a robust observability strategy is non-negotiable for any enterprise scaling up its AI initiatives.

FAQ

What is AI observability, and how does it differ from standard monitoring?

How do organizations handle massive data volumes and ensure data quality for large-scale GenAI workloads?

hich best practices help maintain high infrastructure performance in production AI?

What emerging trends and tools are shaping the future of AI observability?

References

- AI in observability: Advancing system monitoring and performance

New Relic Blog. - State of Observability: Surveys Show 84% of Companies Struggle

Datamation. - AI-Driven Observability in Large Systems – AI Resources

Modular.com. - AI Observability: Key Components, Challenges & Best Practices

Coralogix. - Chapter 12 – Observability | AI in Production Guide

Azure GitHub. - ML Observability Overview | Machine Learning Observability Resources

Arize. - ML Monitoring vs. ML Observability: Mastering Model Deployment

Encord. - System@Scale: AI Observability | At Scale Conferences

- Observability Predictions for 2025: Trends Shaping the Future of Observability

- Metadata-based retrieval for resolution recommendation in AIOps for ESEC/FSE 2022 IBM Research.