What is a Reasoning Engine?

TL/DR

- Reasoning engines are systems that process data, apply logic, and conclude.

- They come in various types: rule-based, semantic, probabilistic, and machine learning-based.

- While offering benefits like enhanced decision-making and efficiency, they face challenges in implementation.

- Their continued evolution promises significant advancements across industries.

What is a Reasoning Engine?

Imagine a digital brain that can sift through vast amounts of information, apply logical rules, and draw conclusions faster than any human could. This is the essence of a reasoning engine, a powerful tool for processing and interpreting data.

The concept of reasoning engines, integral to expert systems, emerged as part of the broader development of artificial intelligence and automated reasoning in the mid-20th century.

One of the earliest and most influential works in automated reasoning is the “Logic Theorist” program developed by Allen Newell, Cliff Shaw, and Herbert A. Simon in 1956. This program was designed to mimic human reasoning in proving mathematical theorems and is often considered a foundational work in artificial intelligence and automated reasoning.

Expert systems, which heavily rely on reasoning engines, became prominent in the 1970s. These systems use an inference engine to apply rules from a knowledge base to solve problems and make decisions, mimicking the problem-solving abilities of human experts.

With recent advancements in AI research, reasoning engines are now being integrated with AI models, creating hybrid systems that combine the strengths of both approaches to tackle even more complex problems.

Let’s uncover how reasoning engines work, the different types of reasoning engines, and how they have evolved in today’s AI era.

How a Reasoning Engine Works

At its core, a reasoning engine applies logical inference to input data based on a predefined set of rules. This process involves evaluating conditions and deriving conclusions or actions from them.

Let’s understand with an analogy:

Real-World Analogy: Traffic Control System

Consider a traffic control system as an analogy for understanding a reasoning engine. In this system, traffic lights (rules) change based on sensor data (input data) about vehicle presence and traffic flow.

The control system (inference engine) processes this data, applies the rules, and decides when to change the lights to optimize traffic flow. Similarly, a reasoning engine processes input data, applies logical rules, and derives conclusions or actions.

Core Components of a Reasoning Engine

The core components of a reasoning engine include:

- Knowledge Base: A structured repository of facts and rules about the domain of interest. For example, in a medical diagnosis system, this would include symptoms, diseases, and their relationships.

- Inference Engine: The “brain” of the system, responsible for applying logical rules to the knowledge base. It uses forward chaining (data-driven reasoning) or backward chaining (goal-driven reasoning).

- Working Memory: A temporary storage for the current problem state, including the facts being considered and intermediate conclusions.

User Interface: Allows users to input data, query the system, and receive results. In modern systems, this might be a graphical interface or an API for integration with other software.

Data Flow in a Reasoning Engine

The data flow in a reasoning engine involves several key stages:

- Input Processing: The reasoning engine receives input data from various sources. This data is then stored in the working memory for further processing.

- Rule Matching: The inference engine compares the input data against the rules in the knowledge base to identify which rules are applicable.

- Conflict Resolution: When multiple rules are applicable, the inference engine must resolve conflicts to determine which rule to apply. This can involve prioritizing rules based on specificity, recency, or other criteria.

- Action Execution: The corresponding action is executed once a rule is selected. This could involve updating the working memory, generating new data, or triggering an external process.

Types of Reasoning Engines

Reasoning engines are integral to AI systems, allowing them to derive logical conclusions from data.

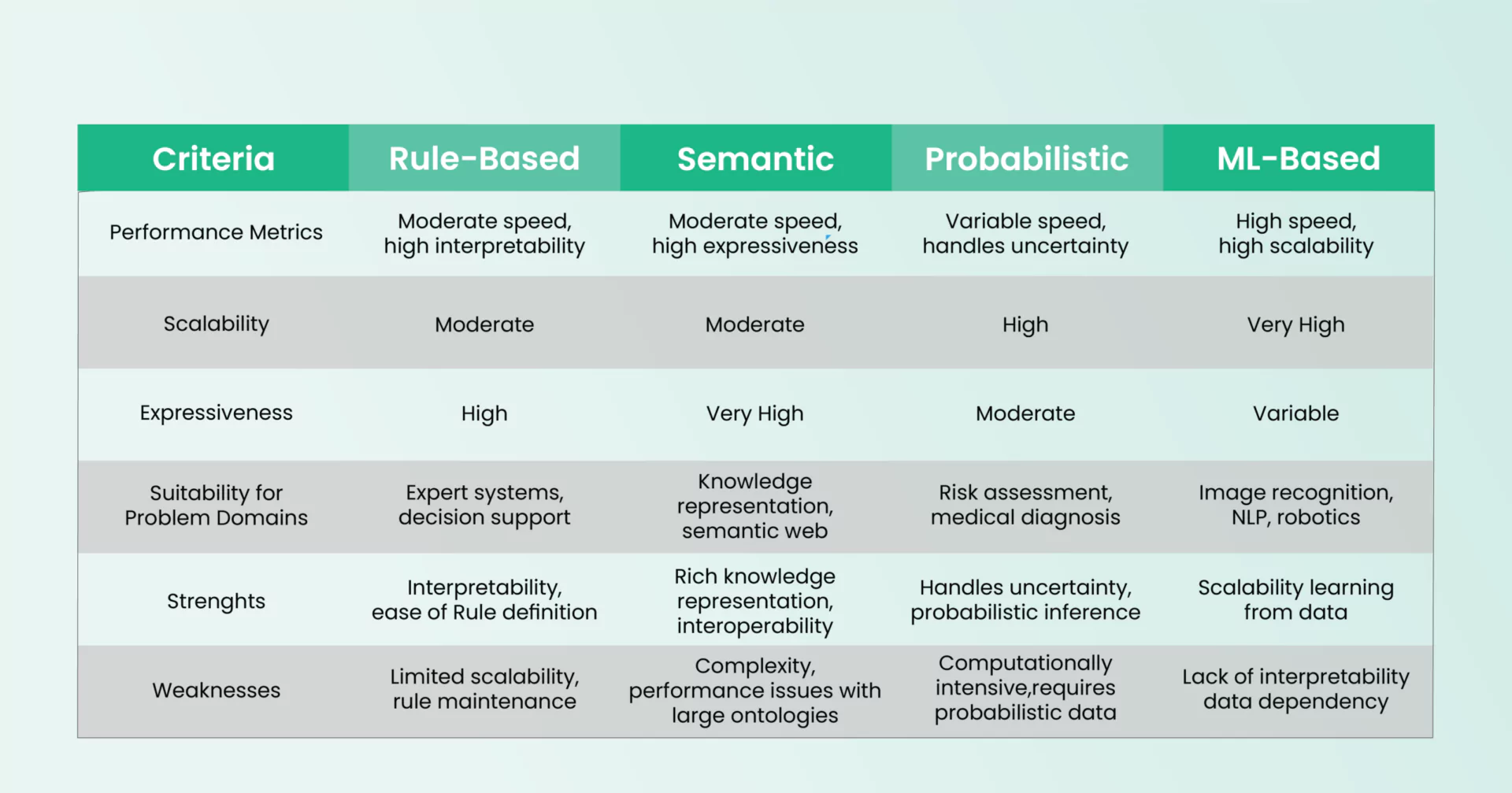

Reasoning engines can be categorized based on two primary factors:

- Logic Used: Rule-based, semantic, probabilistic, machine learning-based

- Inference Method: Forward chaining, backward chaining, probabilistic inference

Knowing different types of reasoning engines is important for selecting the appropriate engine for specific tasks, ensuring optimal performance, accuracy, and scalability in applications ranging from healthcare to semantic web technologies.

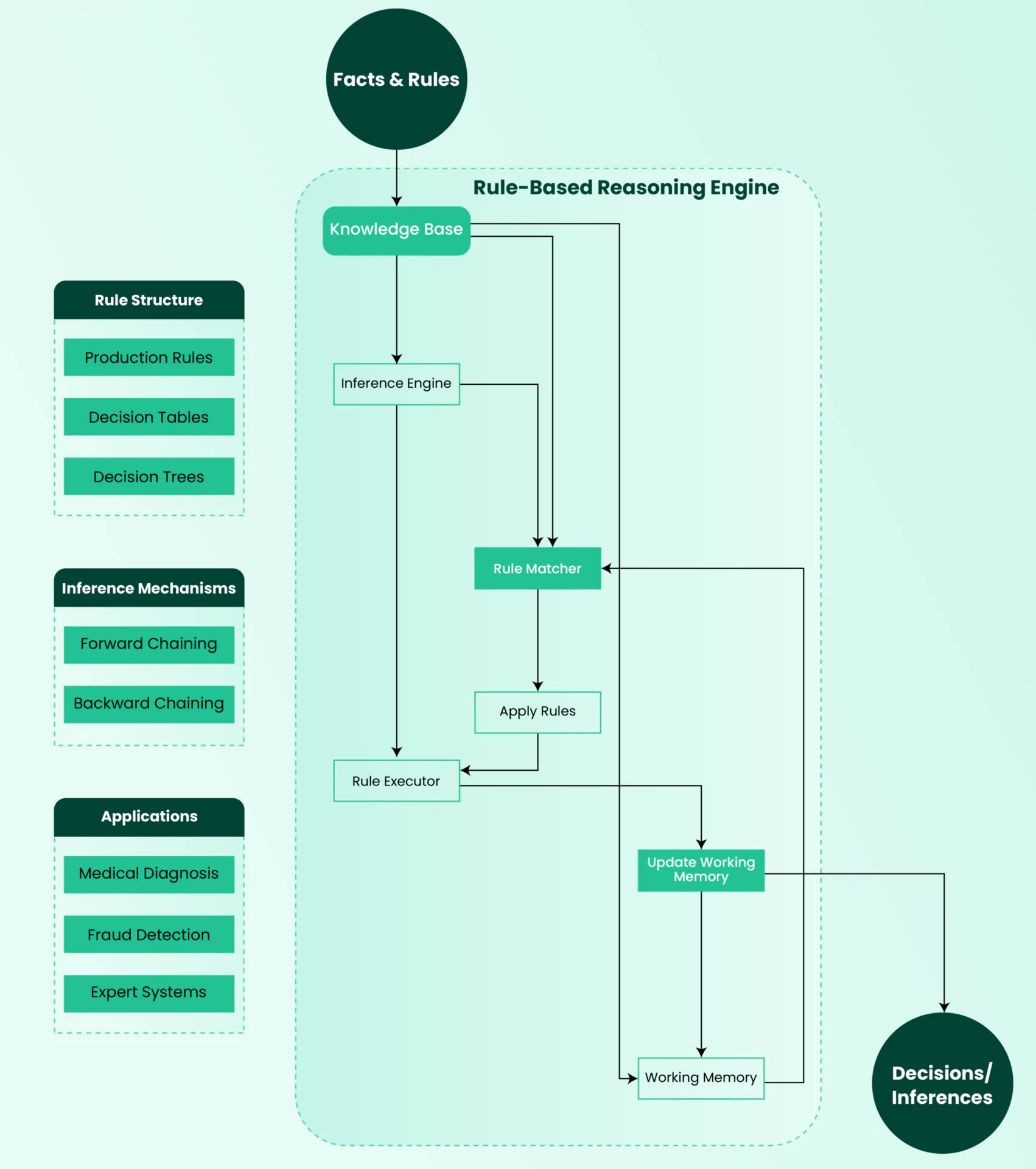

Rule-Based Reasoning Engines

Rule-based reasoning engines use predefined rules to make decisions or infer new information. These systems are built on the foundation of formal logic and are well-suited for domains where expert knowledge can be explicitly encoded.

Key Components:

- Knowledge Base: A collection of facts and rules

- Inference Engine: The mechanism that applies rules to facts

- Working Memory: Temporary storage for facts and intermediate conclusions

Rule Structure and Representation

Rules in these systems typically follow an “if-then” structure:

IF <condition(s)> THEN <action(s) or conclusion(s)>

Example:

IF

(patient_temperature > 38.0°C) AND

(patient_has_cough = true)

THEN

suggest_diagnosis("possible flu")

recommend_action("rest and hydration")

Rules can be represented in various formats, including:

- Production rules

- Decision tables

- Decision trees

Inference Mechanisms

Rule-based systems primarily use two types of inference mechanisms:

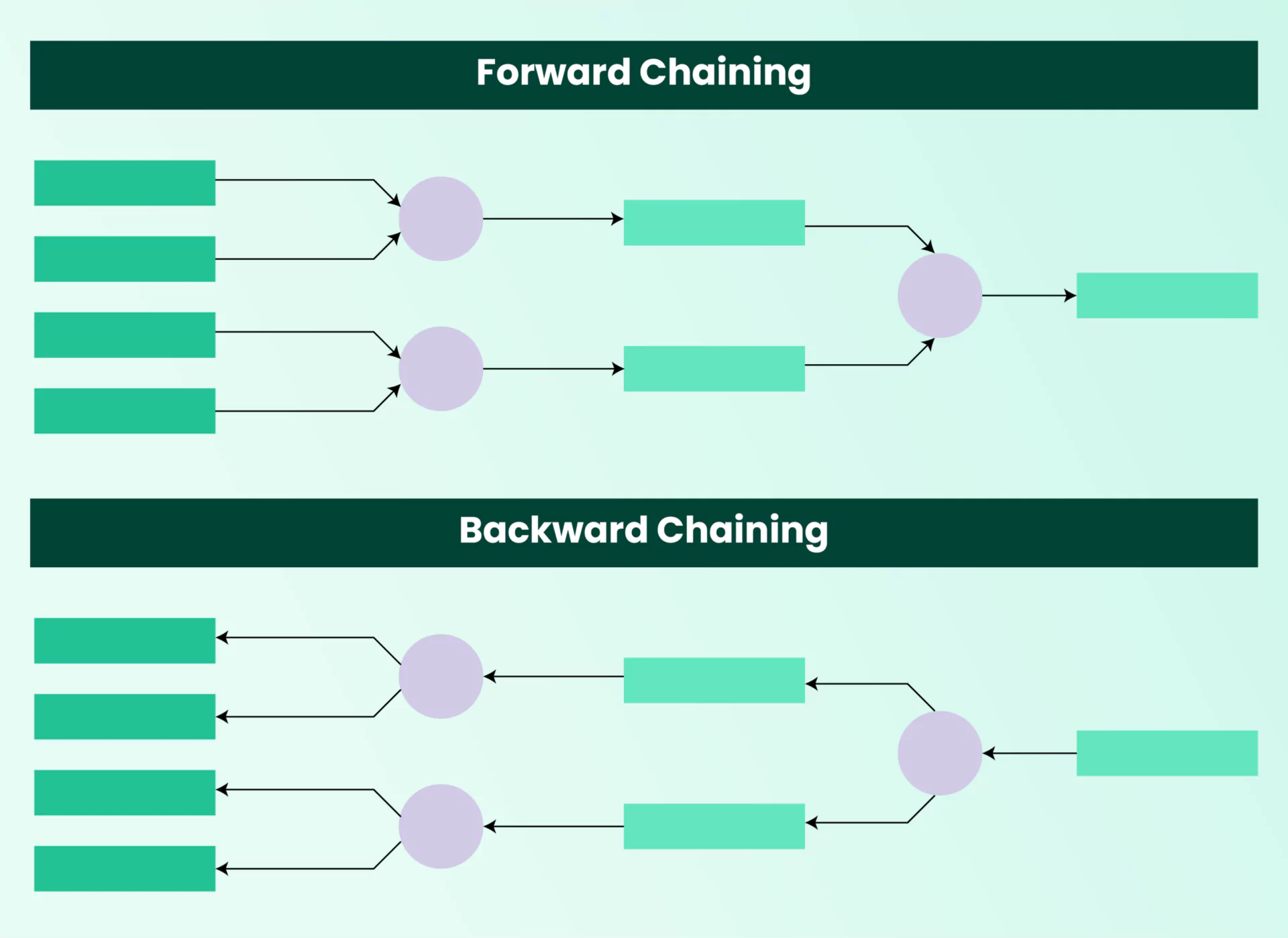

Forward Chaining (Data-Driven Reasoning)

- Starts with known facts

- Applies rules to infer new facts

- Continues until a goal is reached or no more rules can be applied

Process:

- Match facts against rule conditions

- Select a rule to execute (conflict resolution)

- Execute the rule, potentially adding new facts

- Repeat until a termination condition is met

Backward Chaining (Goal-Driven Reasoning)

- Starts with a goal (hypothesis)

- Works backward to find facts that support the goal

- Efficient for proving or disproving specific hypotheses

Process:

- Start with the goal

- Find rules that can produce this goal

- Check if the conditions for these rules are met

- If not, set these conditions as new subgoals

- Repeat until all required facts are found or the goal is disproven

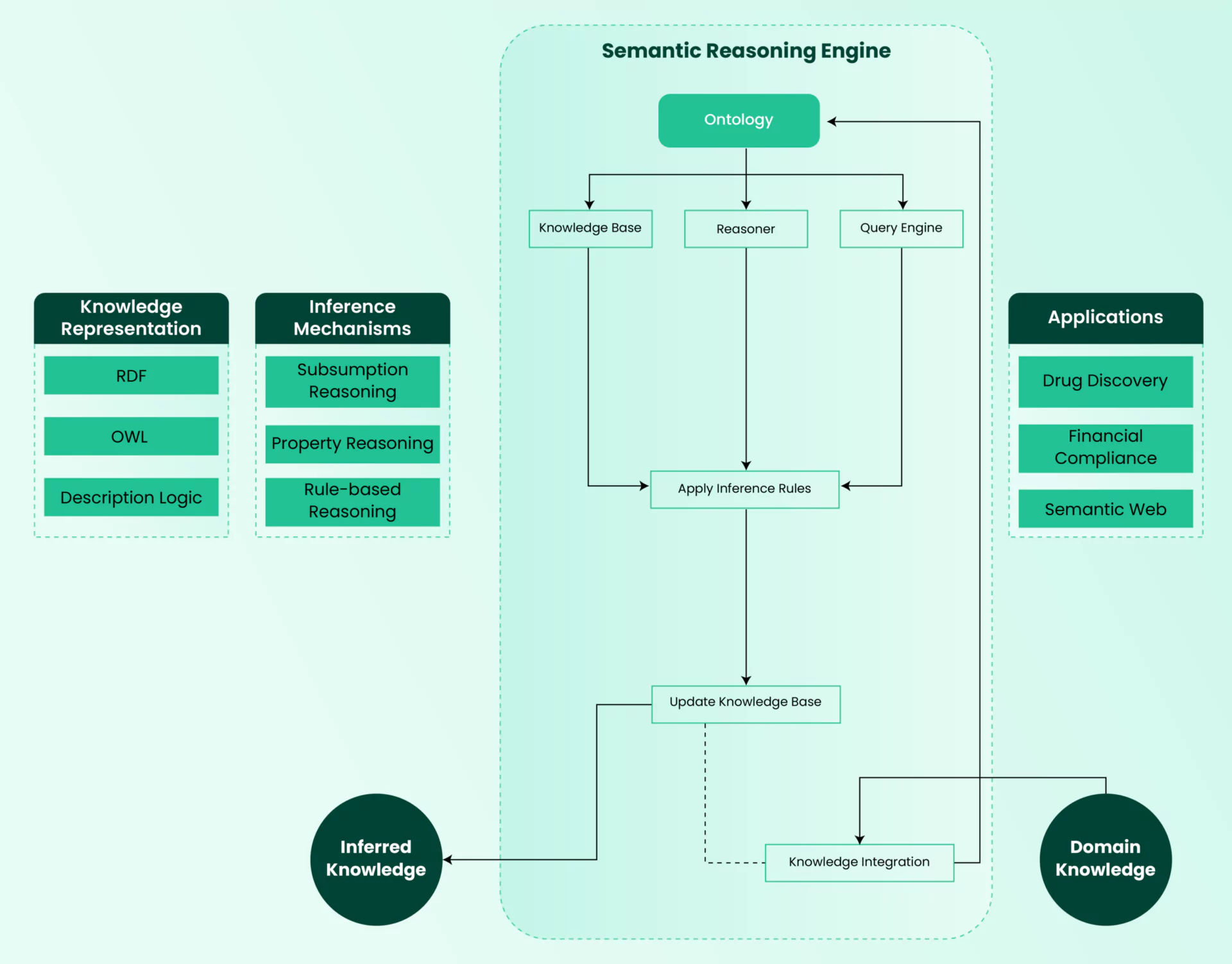

Semantic Reasoning Engines

Semantic reasoning engines use formal representations of concepts, relationships, and axioms to infer new knowledge.

These systems are built on the foundation of knowledge representation and reasoning (KRR) and are well-suited for domains requiring complex knowledge integration and inference.

Key Components:

- Ontology: A formal representation of domain knowledge, including concepts, relationships, and axioms

- Knowledge Base: A collection of facts (assertions) based on the ontology

- Reasoner: The mechanism that applies logical inference rules to the knowledge base

- Query Engine: Facilitates information retrieval and complex queries over the knowledge base

Knowledge Representation

Semantic knowledge is typically represented using standards such as:

- Resource Description Framework (RDF)

- Web Ontology Language (OWL)

- Description Logic (DL)

Example (in OWL format):

<owl:Class rdf_about="#Mammal"/>

<owl:Class rdf_about="#Dog">

<rdfs:subClassOf rdf_resource="#Mammal"/>

</owl:Class>

<owl:NamedIndividual rdf_about="#Fido">

<rdf:type rdf_resource="#Dog"/>

</owl: NamedIndividual>

This example defines a class hierarchy (Dog is a subclass of Mammal) and an individual (Fido is an instance of Dog).

Inference Mechanisms

Semantic reasoning engines employ various inference mechanisms:

Subsumption Reasoning:

- Determines class hierarchies and instance memberships

- It is crucial for ontology classification and consistency checking

Property Reasoning:

- Infers relationships between individuals based on property characteristics (e.g., transitivity, symmetry)

Rule-based Reasoning:

- Applies user-defined or standard (e.g., SWRL) rules to infer new facts

Process:

- Load ontology and instance data

- Apply reasoning rules

- Infer new knowledge

- Update knowledge base

- Repeat as necessary or triggered by new data

Popular reasoners like HermiT and Pellet implement optimized versions of these algorithms.

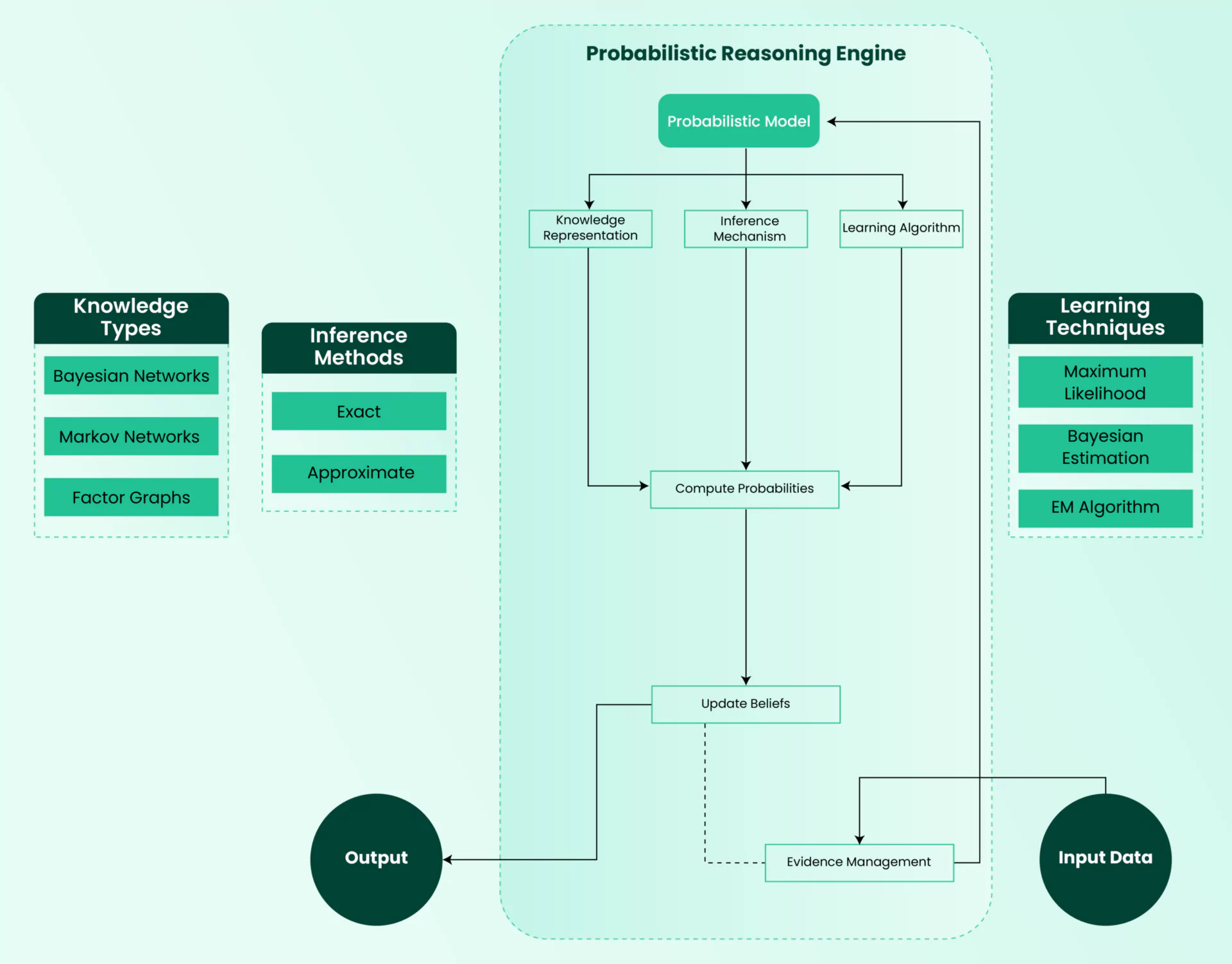

Probabilistic Reasoning Engines

Probabilistic reasoning engines use probability theory and statistical models to reason about uncertain knowledge and make inferences.

These systems are well-suited for domains involving uncertainty, incomplete information, or where reasoning needs to account for the likelihood of different outcomes.

Key Components:

Evidence Management: Handles the incorporation of observed data into the model.

Probabilistic Model: A representation of the domain using random variables and their probabilistic relationships.

Inference Algorithm: The mechanism that computes probabilities of interest given evidence.

Learning Algorithm: Methods for estimating model parameters from data.

Knowledge Representation

Probabilistic knowledge is typically represented using graphical models such as:

- Bayesian Networks (Directed Acyclic Graphs)

- Markov Networks (Undirected Graphs)

- Factor Graphs

Example (Bayesian Network for medical diagnosis):

Smoking

/

Cancer Bronchitis

/

Cough

This example represents causal relationships between smoking, cancer, bronchitis, and cough, where each node is a random variable with associated conditional probability tables.

Inference Mechanisms

Probabilistic reasoning engines employ various inference mechanisms:

Exact Inference:

- Variable Elimination

- Junction Tree Algorithm

- Conditioning

Approximate Inference:

- Monte Carlo Methods (e.g., Gibbs Sampling)

- Variational Inference

- Loopy Belief Propagation

Process:

- Define the probabilistic model

- Incorporate evidence (observed data)

- Select an inference algorithm based on model structure and query

- Compute probabilities of interest

- Update beliefs based on new evidence

Reasoning Algorithms

One of the fundamental algorithms in probabilistic reasoning is the Variable Elimination algorithm:

- Choose an elimination ordering for variables

- For each variable: a. Multiply all factors containing the variable b. Sum out the variable from the resulting factor

- Multiply the remaining factors to obtain the final result

More advanced algorithms like Expectation Propagation or Hamiltonian Monte Carlo are used for complex models or high-dimensional problems.

Learning from Data

Probabilistic reasoning engines often incorporate learning mechanisms to estimate model parameters from data:

- Maximum Likelihood Estimation

- Bayesian Parameter Estimation

- Expectation-Maximization Algorithm

- Structure Learning (for discovering model structure)

These learning methods enable the models to adapt to new data and improve their predictive accuracy.

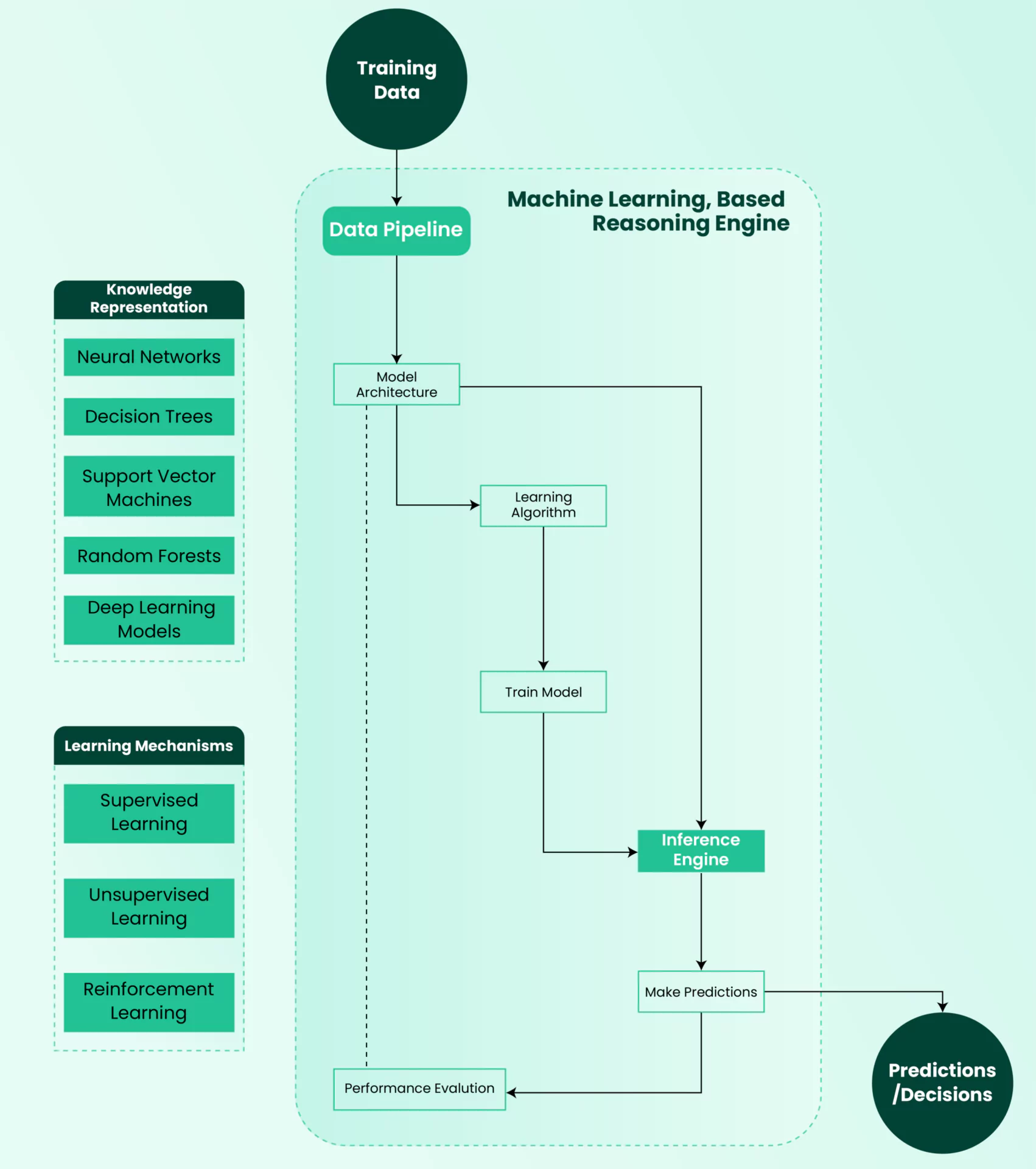

Machine Learning-Based Reasoning Engines

Machine Learning-Based Reasoning Engines use data-driven approaches to learn patterns, make predictions, and perform reasoning tasks. These systems are well equipped for domains with large amounts of data, complex patterns, or where explicit rule formulation is challenging.

Key Components:

- Data Pipeline: Used for data ingestion, preprocessing, and feature engineering.

- Model Architecture: Machine learning model (e.g., neural network, decision tree).

- Learning Algorithm: Methods for optimizing model parameters during training.

- Inference Engine: Mechanism for applying the trained model to new data.

- Performance Evaluation: Metrics and methods for assessing model accuracy and generalization.

Knowledge Representation

Machine learning models represent knowledge in various forms, depending on the type of model:

- Neural Networks: Weighted connections between neurons

- Decision Trees: Hierarchical structure of decision rules

- Support Vector Machines: Hyperplanes in high-dimensional space

- Random Forests: Ensemble of decision trees

- Deep Learning Models: Hierarchical feature representations

Example (Simple Neural Network):

Input Layer Hidden Layer Output Layer

[x1]--- /---[h1]--- /---[y1]

/ /

[x2]-----[w]-----[h2]-----[w]

/ /

[x3]---/ ---[h3]---/ ---[y2]

This example represents a simple feedforward neural network with input features (x), hidden neurons (h), output predictions (y), and weighted connections (w).

Learning Mechanisms

Machine learning-based reasoning engines employ various learning approaches:

Supervised Learning:

- Uses labeled training data

- Examples: Classification, Regression

Unsupervised Learning:

- Discovers patterns in unlabeled data

- Examples: Clustering, Dimensionality Reduction

Reinforcement Learning:

- Learns through interaction with an environment

- Examples: Game playing, Robotics

Process:

- Prepare and preprocess data

- Choose the appropriate model architecture

- Train model using a learning algorithm

- Validate model on held-out data

- Fine-tune hyperparameters

- Deploy model for inference on new data

Reasoning Algorithms

A fundamental algorithm in machine learning-based reasoning is the backpropagation algorithm for training neural networks:

- Forward pass: Compute predictions given input

- Compute loss between predictions and true labels

- Backward pass: Compute gradients of loss concerning model parameters

- Update parameters using an optimization algorithm (e.g., Stochastic Gradient Descent)

- Repeat steps 1-4 until convergence or for a fixed number of epochs

More advanced optimizing algorithms like Adam or RMSprop are commonly used to optimize deep-learning model weights.

Interpretability and Explainability

A key challenge in machine learning-based reasoning is interpretability. Several approaches address this:

- SHAP (Shapley Additive exPlanations) values

- LIME (Local Interpretable Model-agnostic Explanations)

- Attention mechanisms in neural networks

- Decision tree extraction from black-box models

These methods provide insights into how the model arrives at its decisions, which is crucial for many applications.

Comparison

How to Make LLMs Function as Effective Reasoning Engines

Large Language Models (LLMs) have enabled sophisticated language understanding and generation. However, specific methodologies can further enhance their potential as reasoning engines. Here, we explore four strategies to augment the reasoning abilities of LLMs.

Chain-of-Thought (CoT)

Chain-of-thought (CoT) is a prompting technique that encourages LLMs to articulate their reasoning process step-by-step, enhancing their problem-solving capabilities. Instead of generating a direct answer, the model is prompted to break down the problem into smaller, manageable parts, providing intermediate reasoning steps.

Key Benefits

- Transparency: By making the reasoning process explicit, CoT increases the interpretability of the model’s decisions.

- Error Reduction: Intermediate steps allow for the identification and correction of errors before concluding.

- Complex Problem Solving: CoT is effective for tasks requiring multi-step reasoning, such as mathematical problem-solving and logical inference.

Example

For a math problem like “What is the sum of the first 10 natural numbers?”, CoT would guide the model first to identify the sequence of numbers, then calculate the sum step-by-step.

Reasoning and Acting (ReAct)

ReAct synergizes reasoning and acting by interleaving reasoning traces with task-specific actions. This approach allows LLMs to think through problems and interact with external sources to gather additional information, update plans, and handle exceptions.

Key Benefits

- Dynamic Interaction: ReAct enables models to interact with external databases or APIs to fetch real-time data.

- Improved Accuracy: The model can avoid common pitfalls like hallucination and error propagation by continuously updating its action plan based on new information.

- Enhanced Trustworthiness: The interleaved reasoning and action traces provide a clear rationale for the model’s decisions, increasing human trust.

Example

For a fact verification task, ReAct would enable the model to consult a Wikipedia API for real-time information, ensuring the accuracy of its responses.

Tree of Thoughts (ToT)

Tree of Thoughts (ToT) extends the CoT approach by enabling the exploration of multiple reasoning paths. This method allows LLMs to consider various potential solutions, evaluate them, and choose the most promising one.

Key Benefits

- Strategic Lookahead: ToT allows models to plan by evaluating different branches of reasoning, similar to decision trees in game theory.

- Flexibility: The model can backtrack and reconsider previous decisions, making it robust against initial errors.

- Enhanced Problem Solving: ToT is useful for tasks requiring strategic planning and exploration, such as puzzle-solving and creative writing.

Example

In solving the Game of 24, ToT would enable the model to explore various arithmetic operations and sequences, significantly improving its success rate compared to linear reasoning approaches.

Reasoning via Planning (RAP)

Reasoning via Planning (RAP) integrates tree-search algorithms with LLMs to guide multi-step reasoning. This method leverages a learned value function to evaluate different reasoning paths, optimizing decision-making.

Key Benefits

- Long-Horizon Planning: RAP is effective for tasks requiring extensive planning and foresight, addressing limitations of shallow search depths.

- Adaptability: The approach can be tailored to various tasks and model sizes, making it versatile across different applications.

- Iterative Improvement: RAP can guide LLMs during inference and training, continuously enhancing their reasoning capabilities.

Example

In strategic security applications, RAP can infer optimal strategies by evaluating multiple potential actions and their outcomes, similar to AlphaZero algorithms in game theory.

Dive deeper into these approaches with our comprehensive guide on prompt engineering.

Benefits of Using a Reasoning Engine

Reasoning engines are sophisticated systems that emulate human decision-making processes, offering substantial benefits across various domains.

Enhanced Decision-Making

Reasoning engines significantly improve decision-making capabilities by leveraging advanced algorithms and vast datasets. These engines can process complex information and provide well-informed recommendations, which are crucial in healthcare, finance, and strategic planning.

For example, in pharmaceutical quality assurance, a reasoning engine can integrate web mining techniques with case-based reasoning to enhance decision-making processes, ensure compliance with regulations, and maintain product quality.

Improved Efficiency

Efficiency is a critical advantage of reasoning engines, as they can automate and streamline complex tasks that would otherwise require significant human effort. By processing information rapidly and accurately, these engines reduce the time and resources needed for various operations.

Quantum inference engines leverage quantum principles to handle intricate data and probabilistic models more efficiently than classical systems, offering improved scalability and accuracy.

Predictive Analysis

Reasoning engines excel at predictive analysis, utilizing historical data and advanced algorithms to forecast future trends and outcomes. This capability is invaluable in risk management, market analysis, and strategic planning.

In healthcare, reasoning engines using Chi-Square Case-Based Reasoning (χ2 CBR) models can predict death risks in life-threatening ailments, aiding in early intervention and better patient management.

While traditional reasoning engines offer these benefits, modern AI applications face unique challenges in reliability and safety. Coralogix addresses these challenges in AI applications by implementing evaluators designed specifically for security and reliability.

Challenges in Implementing Reasoning Engines

Implementing reasoning engines in real-world scenarios presents several significant challenges that engineers and researchers must address:

Data Quality and Consistency

The effectiveness of reasoning engines heavily relies on the quality and consistency of the data they operate on. Poor data quality can lead to incorrect inferences and unreliable decision-making. Challenges in this area include:

- Dealing with incomplete or inconsistent data

- Handling noise and errors in input data

- Ensuring data integrity across different sources and formats

Integration with Existing Systems

Integrating reasoning engines into existing IT infrastructures can be complex and resource-intensive. Key challenges include:

- Ensuring compatibility with legacy systems

- Managing data flow between reasoning engines and other components

- Maintaining performance and responsiveness of integrated systems

Scalability Issues

As the volume and complexity of data grow, reasoning engines must be able to scale effectively. Key challenges include:

- Handling large-scale data processing

- Optimizing performance for real-time or near-real-time reasoning

- Balancing computational resources and reasoning capabilities

Ethical and Legal Considerations

The use of reasoning engines, especially in sensitive domains, raises important ethical and legal questions:

- Ensuring fairness and avoiding bias in decision-making processes

- Maintaining transparency and explainability of reasoning processes

- Complying with data protection and privacy regulations

- Addressing liability issues in automated decision-making systems

Conclusion

Reasoning engines represent a powerful frontier in artificial intelligence, offering sophisticated decision-making capabilities across various domains. These engines revolutionize how we process and interpret data from rule-based systems to machine learning-based approaches.

While it presents such things as enhanced decision-making, improved efficiency, and predictive analysis, implementing them poses challenges related to data quality, system integration, scalability, and ethical considerations.

As the field of AI advances, integrating traditional reasoning engines with modern AI techniques becomes increasingly important. Coralogix is leading the integration by developing an evaluator engine that enhances the reliability and security of AI applications, particularly those utilizing Large Language Models.

As we look to the future, the evolution of reasoning engines in areas like explainable AI and hybrid approaches promises to bridge the gap between raw data and actionable insights, driving innovation across industries.

FAQ

What is a reasoning engine?

How do rule-based reasoning engines work?

What’s the difference between forward and backward chaining?

Can reasoning engines handle uncertainty?

What are the main challenges in implementing reasoning engines?

How are machine learning and reasoning engines related?

References

- https://www.semanticscholar.org/paper/3c5e8ab77c1cbdb90e5b414a5988da00d9d59c1c

- https://www.semanticscholar.org/paper/16414ead8adc47c412b6e2d0704c0b53f0094bbc

- https://arxiv.org/abs/1209.2355

- https://www.semanticscholar.org/paper/88b17ca9e1235408136c219a6d40fa400fe52a2f

- https://www.semanticscholar.org/paper/ecb0efddf2bc47105c8b925860ffd75394279ea7

- https://www.semanticscholar.org/paper/a6452022093796600a640b7679d2a41a204df595

- https://www.semanticscholar.org/paper/3018bc57e9d2a8b9316b46ffffce673edccd5bb6

- https://www.semanticscholar.org/paper/d7b0d4ed4d3490e24afe01a2e8ac6fdb2a77c46f

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10900078/

- https://arxiv.org/abs/2310.14799

- https://www.semanticscholar.org/paper/85f4cfd2817bf8b97f94f38736c95e6888273ae8

- https://www.semanticscholar.org/paper/750ee7b81efd3a45885bed6172d7a217dc051949

- https://www.semanticscholar.org/paper/991fdb7e37e8f55f03d742d16591af866b8f4ec6

- https://www.semanticscholar.org/paper/615443518756ee91181ec0cd94ab37b04b20bddb

- https://www.semanticscholar.org/paper/9ad8e85438d14d79629db4a848d9f9a4d5752e1d

- https://www.semanticscholar.org/paper/2a8d626ba3a5c17aca984db3817a2728c3a39a17

- https://arxiv.org/abs/2309.16436

- https://arxiv.org/abs/2109.08307

- https://www.semanticscholar.org/paper/5f0456f24e72b98b12327862845cabb622f749ba