Agent vs Assistant: The key distinction between Olly and the competition

The market is saturated with agents and assistants, making it difficult to tell them apart. However, the difference between these two approaches is significant. They offer radically distinct levels of impact, reflecting major differences in both their technical complexity and the quality of their inferences. Let’s figure out the distinction.

What is an assistant?

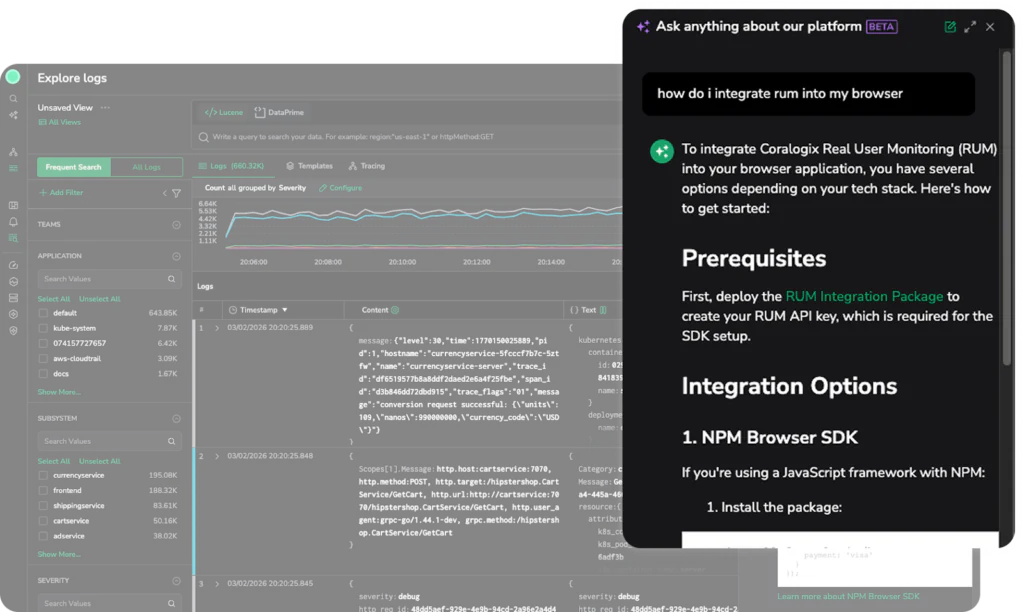

An AI assistant is a general term, and is used in different contexts, but it typically refers to a large language model (LLM) that has been prompted towards a certain use case. Assistants are excellent for refining complex information that is already organised. For example, in the Coralogix platform, Cora is an assistant that answers questions about the platform and how it works.

When should you use an assistant?

Assistants (such as our in-app natural language query) excel at workflow augmentation. They work best when they’re improving an existing tool or platform. This is why they’re almost always seen as additions, built within a user interface. Users see good productivity improvements (around 10-20%), but only when applied within the realm of the product.

When shouldn’t you use an assistant?

For unbounded or unclear requests, assistants tend to get stuck and hallucination rates spike. They lack the proper tooling necessary to work through a complex problem step by step, and so fill the gaps with whatever they can get from their context databases. This often results in a subpar experience for the user. The same is true if you intend to use only an assistant. Assistants work well as workflow augmentation tools, but not as entire workflows in and of themselves.

This means that for higher level, unbounded or questions with higher ambiguity, that requires reasoning and autonomy, assistants often fall short, and it is for this reason that Olly is not an assistant – it’s an agent.

What is an AI Agent?

AI agents are designed to handle much bigger, complex tasks. They are architecturally and technologically more sophisticated than traditional AI assistants. This sophistication is needed, so that they’re able to bring a vast array of tools to bear on a problem space.

Crucially, AI agents are able to make decisions autonomously. They have a suite of tools at their command that they can bring to the table when breaking up a complex problem. This ability, to take complex problems, investigate and triage them, and ultimately derive a meaningful response, is the hallmark of an agentic system.

An agentic architecture: Olly and what it brings to problems

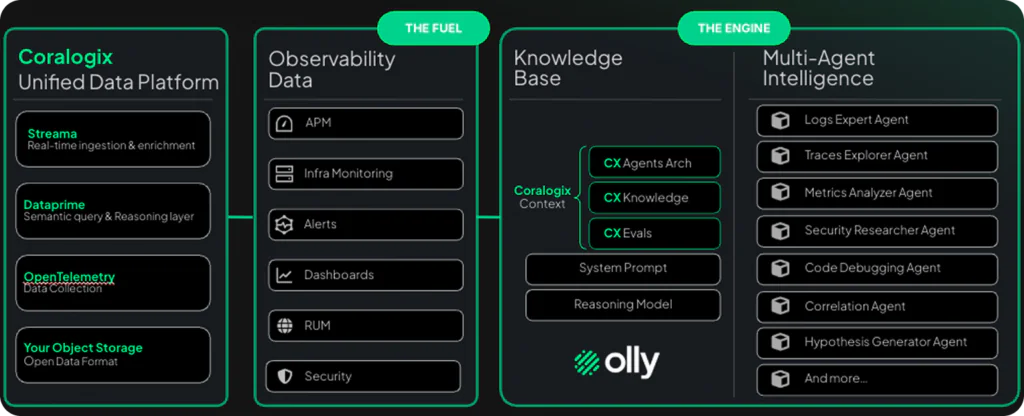

Olly’s power lies in its sophisticated multi-agent architecture, purpose-built to tackle the unbounded nature of operational and business challenges. Rather than relying on a single agent to reason its way through complexity, Olly deploys a coordinated fleet of specialized agents, each engineered to excel within their domain.

This ensemble includes agents dedicated to log analysis, trace exploration, metrics interpretation, security research, code debugging, correlation analysis, and hypothesis generation, with the capability to extend far beyond. At the orchestration layer, Olly synthesizes insights across these specialized agents, enabling them to work in concert to break down intricate problems that would leave traditional assistants foundering.

When you pose a high-level question to Olly, the system doesn’t simply retrieve and refine existing information. Instead, it reasons about which agents and tools are most relevant to your inquiry, sequences their execution intelligently, and weaves together their findings into actionable intelligence, supported by the evidence in the telemetry.

This architectural approach, grounded in a knowledge base enriched by Coralogix’s observability data, powered by advanced reasoning models, and orchestrated through a carefully designed system prompt, means Olly can handle the messiness of real-world operational problems without needing you to specify exactly how it should investigate them. Add to that Olly’s ability to develop context over time, meaning it remembers the previous queries and prompts, and creates an It’s the difference between asking a question and watching an expert team work on your behalf.

What does this look like in action?

When prompting an assistant, we often ask bounded questions like the example above: How do I install RUM into my browser?. This doesn’t tax the assistant too heavily, and gives it simple semantic tags with which it can find the answer in its context database. None of this is necessary for Olly, because Olly is designed to reason through a problem. Let’s go step by step.

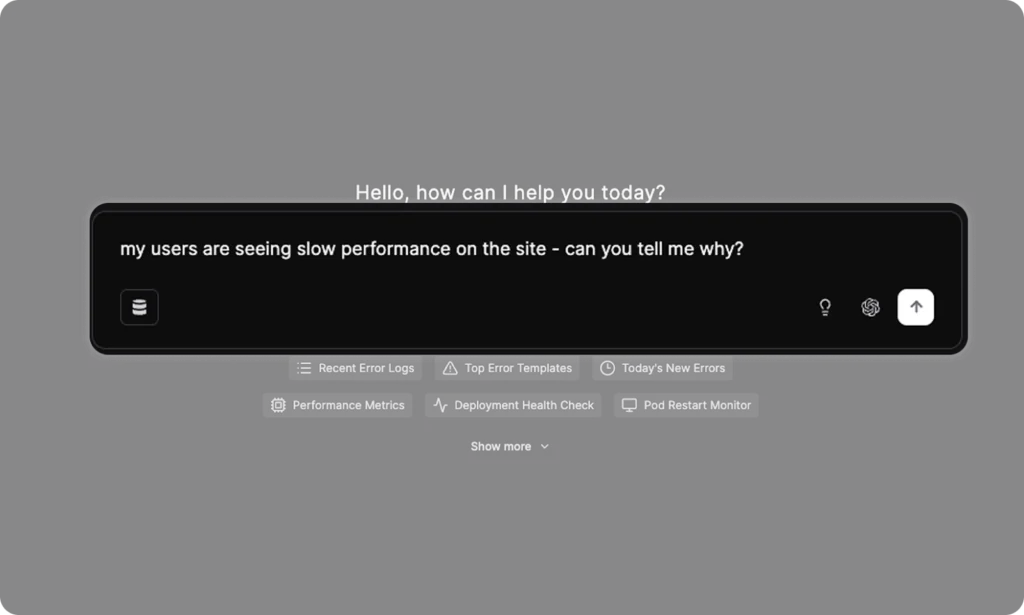

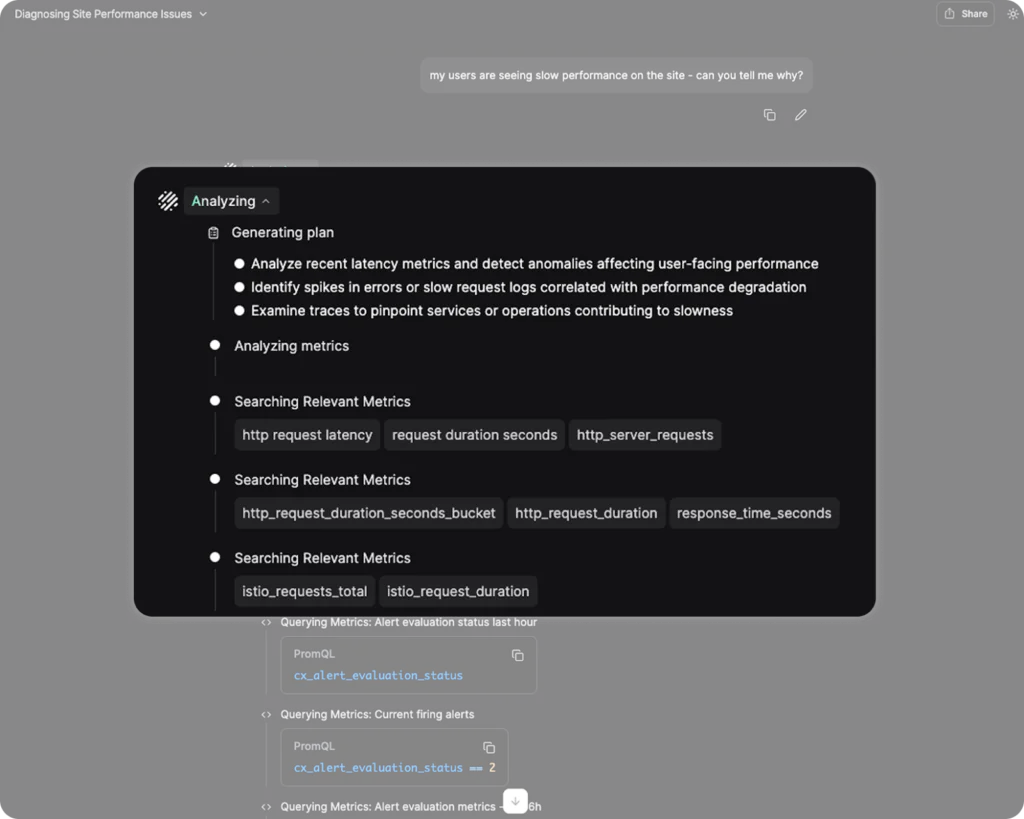

We’re sharing with Olly the production issue we have, the same way we would ask a colleague to help investigate it. Note here that we have not provided any technical detail. Olly is designed to begin investigating from a simple beginning, and expanding into all relevant telemetry, to better understand a problem. It does this by building a plan, and leveraging the agents it has at its disposal to solve the problem.

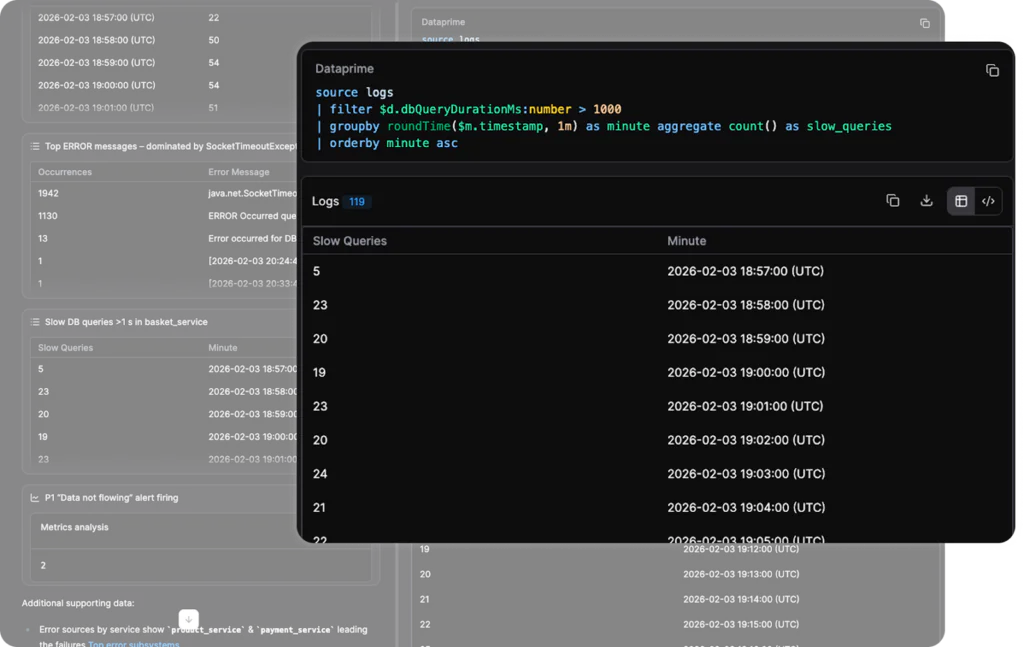

By leveraging its agents, it quickly homes in on the problem. In this example, Olly has no metrics to work with, so it brings spans and logs into the equation. It successfully homes in on the core of the problem, by running DataPrime queries, each becoming more sophisticated as its understanding of the problem space improves.

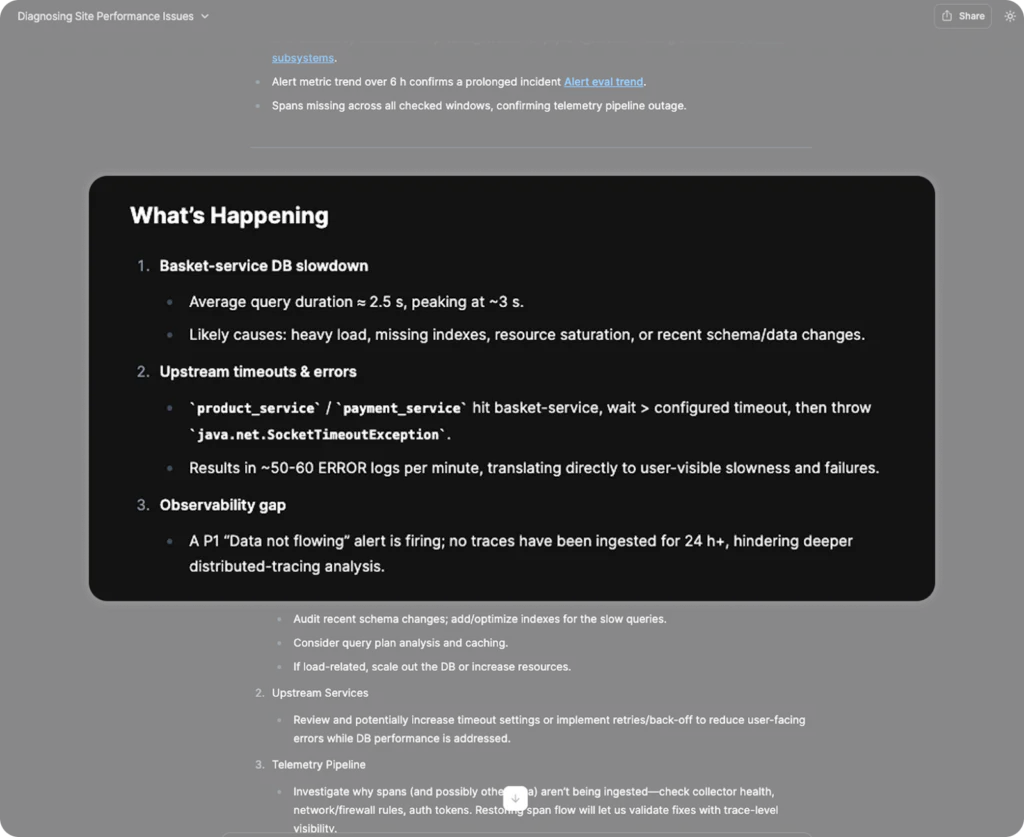

After it has gathered the evidence, Olly is able to test its hypotheses and understand if it has discovered correlation or causality. In this case, Olly identifies the precise root cause of the problem, with the database at the bottom of a microservices architecture causing the slowdown that users are experiencing.

Why is an agent crucial for modern observability?

In Olly’s final analysis, it has identified multiple impact sites, spawning from the same root cause, but it also finds something else. A separate alarm has triggered, the “Data not flowing” alarm, pointing to a lack in telemetry. An assistant does not have the tools necessary to investigate adjacent problems, but the root cause of an issue is often multifaceted.

Agents are able to bring the necessary collection of tools and specialized models, combined with a sophisticated hypothesis engine and orchestration layer, to answer not only the question that the user has asked, but to identify adjacent problems that may have an effect. This is the nature of good analysis, to remain open minded and think laterally about a problem.

This isn’t about workflow augmentation – it’s a new way

Assistants are often built into existing user interfaces to improve and augment existing workflows. This is a powerful way to leverage LLMs, but Olly is not a workflow augmentation tool. It is an entirely new path through the system, a way of joining together dots that a human operator would struggle to find, and doing so in minutes rather than hours or days. Agents excel at building new workflows, and Olly is among the best in the industry.

Olly is growing – check it out

With new customers every day, and hours of effort turns into minutes, Olly is bringing an entirely new workflow to organizations, and with it, insane efficiencies, improved MTTR and more. Try the Olly free trial today and explore how Olly, powered by the Coralogix data layer, can bring your observability to the next level.