Mapping Statistics – What You Need to Know

When your Elasticsearch cluster ingests data, it needs to understand how the data is constructed. To do this, your Elasticsearch cluster undergoes a process called mapping. Mapping involves defining the type for each field in a given document. For example, a number or a string of text. But how do you know the health of the mapping process? And why do you need to monitor it? This is where mapping statistics come in.

Mapping statistics give you an overall view of the mapping process. For example, this includes the number of fields that have been mapped, the mapping exceptions, and errors that have occurred. These statistics are essential to ensure your cluster is working as well as possible.

What is mapping?

Different data types can be queried in different ways. Elasticsearch has a myriad of optimizations under the hood for the different data types that it stores. Elasticsearch indexes the field and its data type so that when you are querying for that field, it knows how to handle it. It does this in two different ways.

1. Dynamic Mapping to automatically create Mapping Fields

When Elasticsearch ingests a document with fields that it has not seen before, it will attempt to automatically detect the type of that field. This is great when you’re just starting out with the data. You can explore and learn about the information you have in your cluster. As soon as Elasticsearch indexes the document, it automatically adds new fields.

If you need to customize the dynamic mapping process, you can do this with dynamic templates. These templates tell Elasticsearch to apply mappings for specific fields that have been dynamically added. This can be useful when you’ve got lots of new fields, and some simple rules will ensure they are mapped correctly.

Explicit Mapping to specify the correct Elasticsearch datatype

When the default behavior for mapping is not what you want, you can be more surgical with the mapping process. Explicit mapping allows you to go in and change the mappings for each field. This is especially useful when you need to state things like the format of a date string.

Mapping Explosions and Errors

As with all things, there is a limit to these features. Firstly, when you have a lot of mutable data, Elasticsearch will attempt to index too many fields as part of the mapping process. Secondly, if a field changes its type, this is going to cause errors because Elasticsearch already has a copy of a field in one type and is attempting to ingest it in another. So how do you manage and fix these issues?

The Mapping Fields Limit

Out of the box, Elasticsearch allows you to index 1000 fields in your mappings. This limit is in place to ensure that your index size remains at an optimal level. With too many mappings, you will slow down your query speed. The mapping statistics available in the Elasticsearch API can tell you how close you are to this limit, and you can reconfigure Elasticsearch to allow for more mappings. You should note, that increasing this limit can have unintended side effects. Proceed with caution!

Mapping Exceptions Count

Mapping exceptions occur when Elasticsearch is not able to map a given field. This will greatly impact query performance and cause errors every single time this field is ingested by the cluster, if that field has now changed. This most commonly happens when a field has changed from one type to another (for example, a numeric value to a string). We’ve written all about mapping exceptions to help you better understand how to prevent this from happening in the future. Mapping exceptions are a fundamental part of your mapping statistics that will let you know as soon as something is wrong with a document. With this level of visibility, you’ll be able to trace the source and either fix the information or reindex your documents as needed.

Summary

Your mapping statistics are an essential ingredient in your Elasticsearch monitoring. Engineering and DevOps teams will almost always focus on the obvious metrics, like CPU, memory, network, and so on. These alerts can differentiate between a perfectly functioning cluster and a broken system.

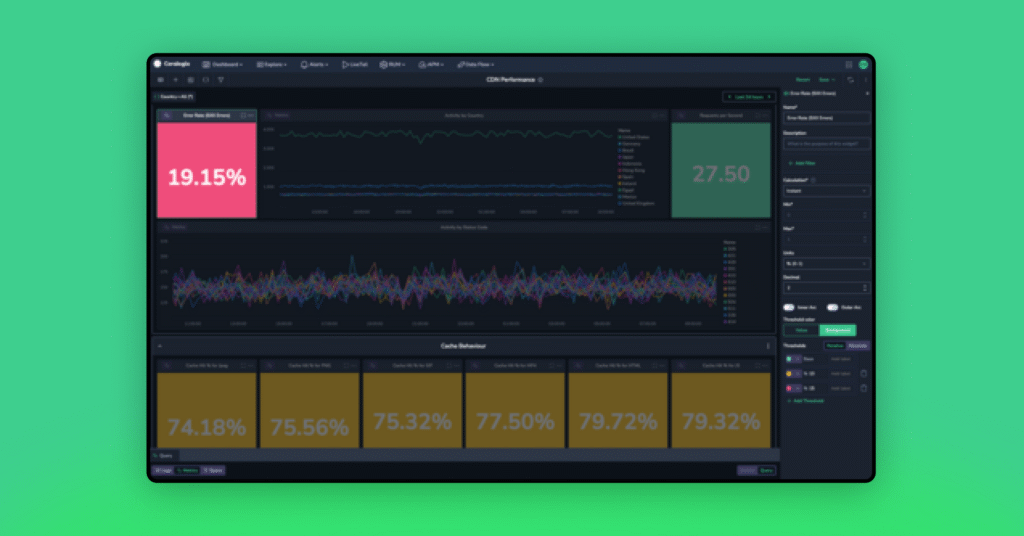

Optimizing your Elasticsearch cluster is a complex task that can be very time-consuming. If these kinds of operational concerns are a distraction from the core goals of your business, you may wish to outsource your logging and metrics to a SaaS solution. A great solution is Coralogix, with machine learning, complex alerting, and much more that will help you scale your technology as you scale your ambitions.