The Coralogix Operator: A Tale of ZIO and Kubernetes

As our customers scale and utilize Coralogix for more teams and use cases, we decided to make their lives easier and allow them to set up their Coralogix account using declarative, infrastructure-as-code techniques.

In addition to setting up Log Parsing Rules and Alerts through the Coralogix user interface and REST API, Coralogix users are now able to use modern, cloud-native infrastructure provisioning platforms.

Part of this is managing Coralogix through Kubernetes custom resources. These custom resources behave just like any other Kubernetes monitoring resource; their main benefit is that they can be packaged and deployed alongside services deployed in the cluster (through a Helm chart, for example), and their definition can be managed by source code management tools. This also means that the process for modifying Coralogix rules and alerts can be the standard code review process applied to application changes. This is a big win on all fronts: better auditing, better change management leveraging pull requests and reviews, easier rollbacks, and more.

Intro

To demonstrate this functionality, let’s define an example alert. We start by defining it in a custom AlertSet resource:

apiVersion: "coralogixstg.wpengine.com/v1" kind: AlertSet metadata: name: test-alertset-1 spec: alerts: - name: test-alert-2 description: "Testing the alerts operator" isActive: false severity: WARNING filters: filterType: TEXT severities: - ERROR - CRITICAL metadata: applications: - production subsystems: - my-app - my-service text: "authentication failed" condition: type: MORE_THAN parameters: threshold: 120 timeframe: 10Min groupBy: host notifications: emails: - [email protected] - [email protected] integrations: []

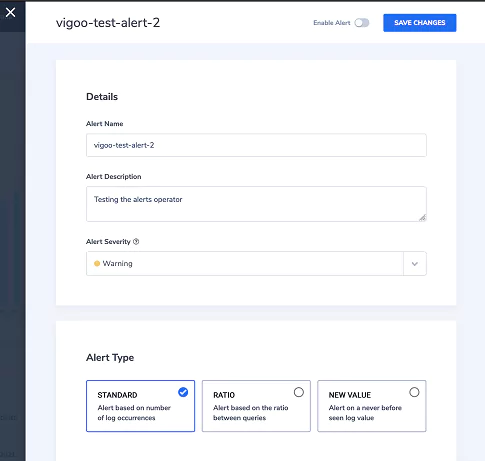

This resource describes several alerts that will be created in the Coralogix account associated with the Kubernetes cluster. The structure of the resource mirrors the Coralogix alert definition interface.

Once the resource definition is saved in a file, it can be applied and persisted to Kubernetes using kubectl:

kubectl apply -f test-alertset-1.yaml

The new alert immediately appears on the Coralogix user interface:

How is this possible with Kubernetes? The Operator pattern can be used to extend the cluster with custom behavior. An operator is a containerized application running in the cluster that uses the Kubernetes API to react to particular events. Once installed and deployed in a cluster, the operator will automatically register its custom resources, allowing users to immediately start working with the Coralogix resources without any additional setup.

In the above use case, the operator would watch the event stream of Coralogix-specific custom resources and apply any addition/modification/deletion through the Coralogix API to the user’s account.

Operators can be written in any language. While other operator frameworks and libraries exist, especially for Go, we wanted to use Scala and ZIO to implement the Coralogix Operator in order to enjoy Scala’s expressive type system and the efficiency and developer productivity proven by many of our existing Scala projects.

Birth of a New Library

So, faced with the task of writing a Kubernetes Operator with ZIO, we investigated the optimal way to communicate with the Kubernetes API.

After evaluating several existing Java and Scala Kubernetes client libraries, none of them checked all of our boxes. All had different flaws: non-idiomatic data models, having to wrap everything manually in effects, lack of support for custom resources, and so forth. We felt we could do better and decided to create a new library, an idiomatic ZIO native library to work with the Kubernetes API.

This new library is developed in parallel with the Coralogix Operator, which already supports numerous features and runs in production. Both are fully open source. The library itself will soon be officially released.

Meet zio-k8s

Let’s have a closer look at our new library, called zio-k8s. It consists of several sub-projects:

- The client library (zio-k8s-client), written using a mix of generated and hand-written code. The hand-written part contains a generic Kubernetes client implementation built on sttp. On top of that, generated data models and ZIO modules provide access to the full Kubernetes API;

- Support for optics libraries (zio-k8s-client-quicklens, zio-k8s-client-monocle), allowing for easy modification and interrogation of the generated data model;

- The custom resource definition (CRD) support (zio-k8s-crd), which is an sbt plugin providing the same data model and client code generator functionality for custom resource types;

- And finally, the operator library (zio-k8s-operator), which provides higher level constructs built on top of the client library to help to implement operators.

We have some more ideas for additional higher-level libraries coming soon, so stay tuned. Meanwhile, let’s dive into the different layers.

Client

As mentioned above, the client library is a mix of generated code and a core generic Kubernetes client. To get the feel of it, let’s take a look at an example:

The following code will reimplement the example from the Kubernetes documentation for running Jobs in the cluster.

First, we need to import a couple of the Kubernetes data models:

import com.coralogix.zio.k8s.model.batch.v1.{Job, JobSpec} import com.coralogix.zio.k8s.model.core.v1.{Container, PodSpec, PodTemplateSpec} import com.coralogix.zio.k8s.model.pkg.apis.meta.v1.ObjectMeta

and also the ZIO module for working with Job resources:

import com.coralogix.zio.k8s.client.batch.v1.jobs import com.coralogix.zio.k8s.client.batch.v1.jobs.Jobs

Now we will create a helper function that builds up the Job resource for calculating the digits of PI:

def job(digits: Int): Job = Job( metadata = ObjectMeta(generateName = "demo-job"), spec = JobSpec( backoffLimit = 4, template = PodTemplateSpec( spec = PodSpec( restartPolicy = "Never", containers = Vector( Container( name = "pi", image = "perl", command = Vector("perl", "-Mbignum=bpi", "-wle", s"print bpi($digits)" )))))))

As we can see the Kubernetes data models are simple case classes and their names and fields fully match the Kubernetes names, so using YAML examples to build up the fully typed Scala representation is very straightforward.

One special feature to mention is the use of a custom optional type instead of Option in these data models. This provides the implicit conversion from T to Optional[T] which significantly reduces the boilerplate in cases like the one above while still reducing the scope of this conversion to the Kubernetes models only. In practice, the custom optional type works just like the built-in one, and conversion between them is also straightforward.

Next, let’s create the ZIO program that will create a Job in the Kubernetes cluster:

val runJobs: ZIO[Jobs with Clock, K8sFailure, Unit] = for { job <- jobs.create(job(2000), K8sNamespace.default) name <- job.getName _ <- waitForJobCompletion(name) } yield ()

The first thing to note is that each resource has its own ZIO layer. In this example, we can see that runJobs requires the Jobs module in its environment. This lets us access the resources in a resourcename.method fashion (like jobs.create) and additionally provides nice documentation of what resources a given effect needs access to.

The second interesting thing is getName. The Kubernetes data schema is full of optional values and in practice many times we want to assume that a given field is provided. We’ve already seen that for constructing resources the custom optional type tries to reduce the pain of this. The getter methods are the other side of it, they are simple getter effects unpacking the optional fields, failing the effect in case it is not defined. They can be used in places where optional fields are mandatory for the correct operation of the code, like above where we have to know the newly created Job resource’s name.

To finish the example, let’s see how the waitForJobCompletion can be implemented!

def waitForJobCompletion(jobName: String): ZIO[Jobs with Clock, K8sFailure, Unit] = { val checkIfAlreadyCompleted = for { job <- jobs.get(jobName, K8sNamespace.default) isCompleted = job.status.flatMap(_.completionTime).isDefined _ <- ZIO.never.unless(isCompleted) } yield () val watchForCompletion = jobs.watchForever(Some(K8sNamespace.default)) .collect { // Only look for MODIFIED events for the job given by name case Modified(item) if item.metadata.flatMap(_.name).contains(jobName) => // We need its status only item.status } // Dropping updates until completionTime is defined .dropWhile { case None => true case Some(status) => status.completionTime.isEmpty } // Run until the first such update .runHead .unit checkIfAlreadyCompleted race watchForCompletion }

This is a good example of being able to take advantage of ZIO features when working with a ZIO-native Kubernetes client. We define two effects: one that gets the resource and checks if it has already been completed and another that starts watching the changes of Job resources to detect when the currently running Job becomes completed. Then we can race the two checks to guarantee that we detect when the job’s completionTime gets set.

The watch functionality is built on ZIO Streams. For a given resource type, watchForever provides an infinite stream of TypedWatchEvents. With the stream combinators, we can simply express the logic to wait until the Job with a given name has been modified in a way that it’s completionTime is no longer undefined.

Under the Hood

The code generator takes the Open API definition of the Kubernetes API, processes it, and generates Scala source code by building ASTs with Scalameta. For the data models, these are case classes with the already mentioned getter methods, with implicit JSON codecs and support for resource metadata such as kind and apiVersion.

In addition, for each resource, it generates a package with a type alias representing the set of ZIO layers required for accessing the given resource. Let’s see how it looks like for Pod resources:

type Pods = Has[NamespacedResource[Pod]] with Has[NamespacedResourceStatus[PodStatus, Pod]] with Has[NamespacedLogSubresource[Pod]] with Has[NamespacedEvictionSubresource[Pod]] with Has[NamespacedBindingSubresource[Pod]]

Each resource has either a NamespacedResource or a ClusterResource interface and optionally a set of subresource interfaces. The code generator also generates accessor functions to the package for each operation provided by these interfaces. This combination lets us use the syntax shown above to access concrete resources, such as jobs.create(), but also to write polymorphic functions usable to any resource or subresource.

Custom Resource Support

The zio-k8s-crd sbt plugin extends the client to support custom resources. The idea is to put the Custom Resource Definition (CRD) YAMLs to the project’s repository and refer to them from the build definition:

lazy val root = Project("demo", file(".")) .settings( // ... externalCustomResourceDefinitions := Seq( file("crds/crd-coralogix-rule-group-set.yaml"), file("crds/crd-coralogix-loggers.yaml"), file("crds/crd-coralogix-alert-set.yaml") ), ) .enablePlugins(K8sCustomResourceCodegenPlugin)

The plugin works very similar to the code generation step of zio-k8s-client itself, generating model classes and client modules for each referenced CRD. The primary difference is that for custom resources the data models are generated with guardrail – a Scala code generator for OpenAPI. This supports a wide set of features so it should be good enough to process arbitrary custom resource definitions.

Operator Framework

The zio-k8s-operator library builds on top of the client providing higher-level constructs that help to implement operators. One of these features performs the registration of a CRD. By simply calling the following effect:

registerIfMissing[AlertSet](alertsets.customResourceDefinition)

We can guarantee that the custom resource AlertSet, with the definition alertsets.customResourceDefinition, both generated by the zio-k8s-crd plugin is registered within the Kubernetes cluster during the operator’s startup.

Another feature that is useful for operators is to guarantee that only a single instance runs at the same time. There are several ways to implement this, the operator library currently provides one such implementation that is based on creating a resource tied to the pod’s life. For the library’s user, it is as simple as wrapping the ZIO program in a Leader.leaderForLife block or using a ZManaged resource around the application provided by Leader.lease.

Defining an operator for a given resource with this library is simply done by defining an event processor function with the type:

type EventProcessor[R, E, T] = (OperatorContext, TypedWatchEvent[T]) => ZIO[R, OperatorFailure[E], Unit]

Then, create an operator with Operator.namespaced. To keep the primary operator logic separated from other concerns we also define operator aspects, modifiers that wrap the operator and can provide things like logging and metrics. A logging aspect is provided with the library out of the box, with the following signature:

def logEvents[T: K8sObject, E]: Aspect[Logging, E, T]

It is based on zio-logging and can be simply applied to an event processor with the @@ operator:

def eventProcessor(): EventProcessor[ Logging with alertsets.AlertSets with AlertServiceClient, CoralogixOperatorFailure, AlertSet ] = ??? Operator.namespaced( eventProcessor() @@ logEvents @@ metered(metrics) )(Some(namespace), buffer)

Summary

Coralogix users can already take advantage of tighter integration with their infrastructure and application provisioning processes by using the Coralogix Operator. Check out the tutorial on our website to get started.

As for zio-k8s, everything we’ve described in this post is already implemented, available under the friendly Apache 2.0 open-source license. There are some remaining tasks to make it as easy to use as possible and cover most of the functionalities before an official 1.0 release, but it’s very close. PRs and feedback are definitely welcome! And as mentioned, this is just the beginning; we have many ideas in store about how to make the Kubernetes APIs easily accessible and ergonomic for ZIO users. Stay tuned!

Both the library and the Coralogix Operator can be found on GitHub.