Using Log Data to Prevent Lambda Cold Starts

AWS Lambda enables you to run serverless functions in the AWS cloud, by manually triggering functions or by creating trigger events. To ensure your Lambda functions are running smoothly, you can monitor metrics that measure performance, invocations, and concurrencies. However, even if you continuously monitor, once in a while you are going to run into what’s termed a Lamba cold start. There are various ways to prevent AWS Lambda cold starts. This article explains how to achieve this with log data.

AWS Lambda Performance Monitoring

Monitoring your functions is necessary to verify that functions are being called correctly, that you are allocating the right amount of resources, and to locate bottlenecks or errors. In particular, you should monitor how Lambda interacts with other services, including calls and request times.

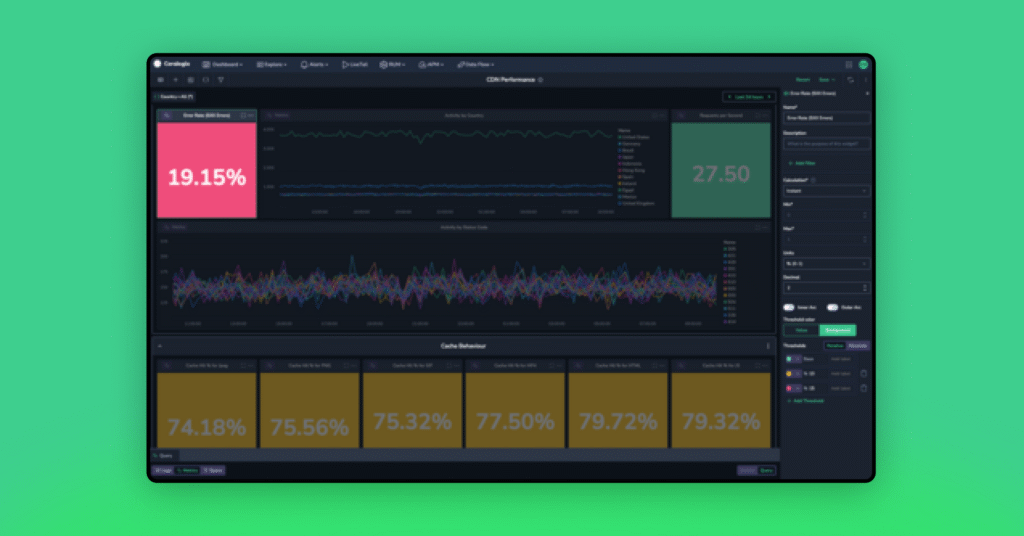

Fortunately, Lambda exposes metrics that you can access through the CloudWatch console or ingest with third-party log management tools like Coralogix. These metrics include:

- Invocation—includes invocations, function errors, delivery failures, and throttles. You can use these metrics to gauge the rate of issues during runtime and determine possible causes for poor performance.

- Performance—includes duration and iterator age. You can use these metrics to better understand the performance of single invocations, average performance, or maximum performance.

- Concurrency—includes concurrent executions on both provisioned and unreserved resources. You can use these metrics to determine the necessary capacity of simultaneous invocations and to measure whether you are efficiently provisioning resources.

What Is an AWS Lambda Cold Start?

A Lambda cold start occurs when a new container instance needs to be created for a function to run. Cold starts can happen if it’s the first time running the function, because the function hasn’t run recently, or because the function’s execution places a demand that exceeds the current available capacity of existing instances.

During a Lambda cold start, the function package is loaded into a container, the appropriate configurations are made, and the container is deployed. This process generally takes anywhere from 400 milliseconds to a few seconds, depending on your function language, memory allocation, and code size.

Once you have a function instance, AWS retains it for an indefinite amount of time. If you call the function again, it is already available and can run instantly, eliminating cold starts for that invocation. However, if you exceed the amount of time AWS allows between invocations, your function instance is eliminated. When this happens, AWS must initialize a new instance before your function can run, resulting in a cold start.

3 Ways to Prevent Lambda Cold Starts Using Log Data

When using Lambda, you’re paying for computing time of every second that your function is initializing and running. This means that every time a cold start occurs, you will get charged for that non-productive initialization time. This isn’t a big deal if you’re only occasionally running functions but once you run a function a few million times, this cost quickly adds up.

To ensure that your costs stay low and your performance stays high, you should try to minimize cold starts as much as possible. The following tips provide a fundamental basis for achieving this. Once you’ve implemented these tips, you can move on to more advanced Lambda cold starts optimization methods.

1. Instrumenting Your Lambda Functions and Measuring Performance

To measure your performance you need to deploy and invoke functions in the conditions you want to measure. You can then analyze your metrics to determine performance. This requires invoking your function multiple times, under many conditions, and aggregating the resulting data. The easiest way to do this is through instrumentation.

Two instrumentation tools you should consider are Gatling and AWS X-Ray. Gatling enables you to repeatedly invoke your function and view the resulting metrics in a report with accompanying visualizations. You can also use it to view raw results.

X-Ray enables you to examine end-to-end request traces via the AWS Console. This data includes HTTP calls and AWS stack calls. X-Ray also provides a visualization of your initialization time and a map of your serverless application components that you can use to narrow down bottlenecks.

With the data collected from your instrumentation, you can identify how efficiently your functions are operating and make changes to increase performance. In particular, these metrics can help you identify when your applications are initiating cold starts, providing an accurate baseline for function provisioning requirements.

2. Using CloudWatch Events to Reduce Frequency of Cold Starts

Outside of provisioning function instances, your best bet for reducing cold starts is to try to keep your functions warm. You can do this by periodically invoking the function and sending a request to a small handler function that contains warming logic.

An example handler function is:

const warmer = require('lambda-warmer')

exports.handler = async (event) => {

if (await warmer(event)) return 'warmed'

return 'I’m warm'

}

To use this function, you also need to add a few lines to your event trigger configuration:

myWarmer:

name: myWarmer

handler: myWarmer.handler

events:

- schedule:

name: warmer-schedule

rate: rate(10 minutes)

enabled: true

input:

warmer: true

concurrency: 1

The best way to do this is with CloudWatch Events. You can use this service, in combination with a Cron job to ping functions every 5-15 minutes. However, this method only works for one function instance.

If you need to keep concurrent instances warm, you need to adopt other tooling and use it to interface with CloudWatch Events. One option to consider is Lambda Warmer, a module for Node.js that enables you to warm multiple functions and define custom concurrency levels. It also integrates with the Serverless Framework and AWS SAM.

3. Keep Shared Data in Memory by Loading Outside the Main Event Handler Function

An additional strategy you can employ is to use log data to identify functions that are loading dependencies at invocation. When you have to load dependencies for each invocation, runtime increases. However, you can store these dependencies in your warm function container outside of your handler logic. Any code that is executed outside of the handler will persist in container storage.

Using your log data as a guide, you can modify function packages to use this method. This eliminates the need to import dependencies with each invocation, speeding runtime. Making this change can significantly boost your function performance when used in combination with the above method for warming. Although this strategy does not affect cold starts it does enable you to boost overall performance.

Conclusion

To avoid cold starts, you can instrument your Lambda functions and measure performance. You can also use CloudWatch events to reduce the frequency of cold starts. Finally, you can keep shared data in memory, by loading outside the main event handler function. This serves to lower costs and improve efficiency, creating a more scalable and robust system.