Why a No-Index Observability Architecture is Essential

The Hidden Cost Crisis in Observability

When was the last time you asked about the architecture behind your observability provider? For most IT professionals whether in development, operations, or security, it’s not a question that naturally comes up. Yet, this architectural detail could be the difference between insight at scale and runaway costs.

People are drawn to the features, the shiny things. They promise to unlock insight, drive faster response times, and tighten security. You visualize them improving your workflow and driving outcomes. But fast forward a few quarters: logs and traces accumulate rapidly, and you start wondering where is all this data being stored? Indexed storage? High-cost tiers?

The bills begin to rise. Then they spike. Suddenly your observability budget is out of control. Leadership raises red flags. You’re under pressure from finance. You may even find yourself doing what many organizations quietly resort to: turning off telemetry to cut costs.

And just like that, your observability platform, the very tool meant to give you visibility, starts creating blind spots instead. This scenario is far too common, as I emphasized in a recent talk at an AWS User Group in Malta.

The Data Tsunami at Your Network Edge

Let’s consider a common scenario involving a Content Delivery Network (CDN) and a Web Application Firewall (WAF).

These systems generate enormous volumes of data:

- High-traffic websites generate 1-10 GB of CDN logs per day per million visitors (Cloudflare State of the Web Report, 2024)

- WAF logs are even larger, with entries ranging from 2-20 KB each compared to 0.5-2 KB for CDN logs (AWS WAF Documentation)

- During active attacks, WAFs can block 20-40% of incoming traffic, creating massive log spikes (Akamai State of the Internet Report, 2024)

- Most organizations can only afford to retain 1-5% of their CDN/WAF logs with traditional observability solutions (Gartner Market Guide for Observability, 2024)

Meanwhile, 43% of breaches now begin at the web edge – precisely where this data originates. (Verizon Data Breach Investigations Report (2024)

Visibility Fails Where It’s Needed Most

This leads to our first major problem: The area requiring the most visibility, the network edge, is where traditional observability makes you go blind.

The Vicious Cycle of Traditional Observability

Every interaction with your CDN and WAF produces valuable telemetry:

- CDN logs detail cache status, client IPs, user agents, and more

- WAF logs record threats, rules matched, and attack signatures

- Edge logs show connection-level traffic and protocol behavior

According to Cloudflare Radar (2024), the average enterprise website faces over 2,000 bot attacks daily, with each attack generating hundreds of WAF log entries.

These high-volume logs spike during attacks and traffic surges, exactly when you need the most insight. But because traditional platforms index all this data, costs grow linearly (or worse) with volume.

Why Edge Telemetry is Uniquely Challenging

Unlike application logs which you control, CDN and WAF logs:

- Are generated by third parties

- Come in large, irregular volumes

- Must be retained for long periods

- Contain dense and critical information

The Tradeoff Triangle: Cost, Coverage, Performance

Traditional platforms propose three cost saving strategies, each flawed:

- Index everything: Powerful but extremely expensive

- Sample data: Saves money but introduces blind spots

- Shorten retention: Lowers cost but hinders investigations

The second core problem emerges: All of these are forms of compromise—and in security, compromise equals vulnerability.

The Coralogix Solution: Breaking the Tradeoff

Coralogix rethinks observability from the ground up. Instead of forcing tradeoffs, it introduces three architectural innovations:

- TCO Optimizer – Smart routing of logs to three storage tiers

- Direct Archive Query – Instant querying of archived data with no rehydration

- Streama Technology – Real time processing before storage, reducing volume and cost

At the Malta AWS User Group, I ran a live demo using hotel WiFi. Risky? Sure. But it worked flawlessly, highlighting that high performance doesn’t require an index.

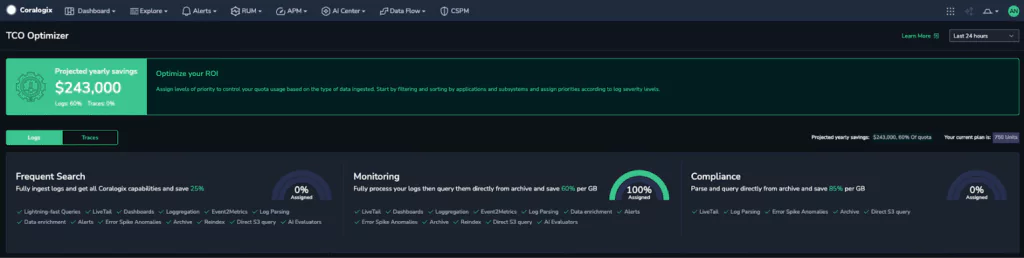

TCO Optimizer: $243K Annual Savings via Smarter Storage

This feature really got the Malta audience’s attention. I demonstrated how Coralogix’s TCO Optimizer routes all CDN and WAF logs to the Monitoring Tier, which is technically low cost object storage.

Nothing in this demo is indexed. We are using a built in cost optimisation tool within the Coralogix platform allowing you to manage your data costs in a declarative manner.

As shown in the screenshot, this real world configuration projects annual savings of $243,000 by eliminating indexing entirely and using intelligent tiering:

- Frequent Search: Critical logs requiring instant search

- Monitoring: For logs powering dashboards and alerts but don’t need millisecond-level search

- Compliance: For long term retention that you can query.

In the demo, every single log from CDN and WAF sources, over 111 million entries was processed and stored in the Monitoring Tier. All analytics, alerts, and dashboards were powered from this cost effective tier, with no indexing required.

When I revealed this setup in Malta, the response was immediate. A CTO pulled me aside and admitted they’d been struggling with rising costs and aggressive data purging just to stay under budget.

Business outcome: Predictable, optimized costs with full data retention and zero indexing, eliminating budget surprises and blind spots.

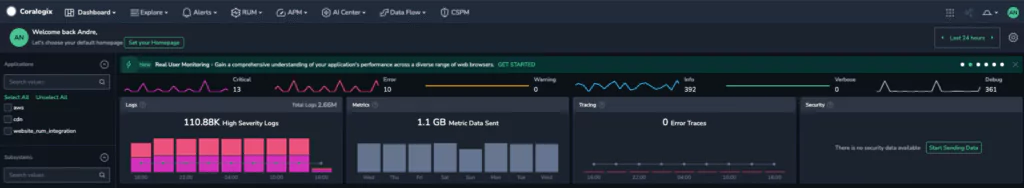

2.5 Million Daily Logs: Full Insight, No Sampling

In this demo, we process 2.5M+ logs per day from CDN and WAF sources. This data powers dashboards that track:

- Bot mitigation

- Account takeover attempts

- Suspicious login behaviors

An attendee in Malta asked: “Are you pre-filtering this data? Aggregating the logs in some way?”

I clarified that we’re not aggregating or discarding anything—we’re analyzing everything before storage, thanks to our Streama technology. This enables us to handle massive log volumes without indexing, while still supporting full analytics and real-time alerts.

Outcome: Full security visibility. No sampling. No skipped insights.

Analyze Archived Logs Instantly – No Rehydration Needed

Most platforms make you rehydrate archived data, slow, expensive, and cumbersome. Coralogix removes that step. You query archived data directly.

“You’re querying straight from archive?” someone asked in Malta. “Exactly”

This led to questions about indices growing over time, and how these things need to be maintained. All this adds to the build up of resources and cost as things scale over time.

It’s the very reason Coralogix exists.

Even over weak WiFi, performance was exceptional even over 3 days worth of data.

Thanks to our architecture, teams can:

- Keep all telemetry in cost-effective storage

- Investigate security events without delays

- Maintain compliance without analytics bottlenecks

Outcome: Fast, thorough investigations, without rehydration costs or prep.

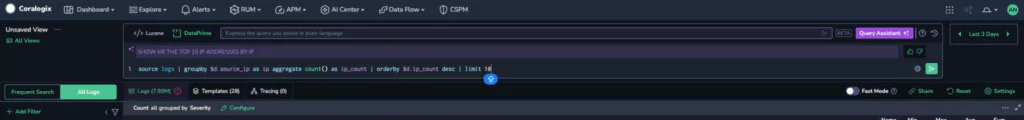

Explore Logs with Plain English – No SQL Required

Coralogix’s DataPrime interface lets you query using natural, readable syntax.

- Write queries in natural language

- Build insights without knowing query syntax

- Explore a week of logs in seconds

This feature received enthusiastic feedback in Malta, especially from leaders managing teams with mixed technical skills.

Outcome: More teammates can use observability data, increasing ROI and collaboration.

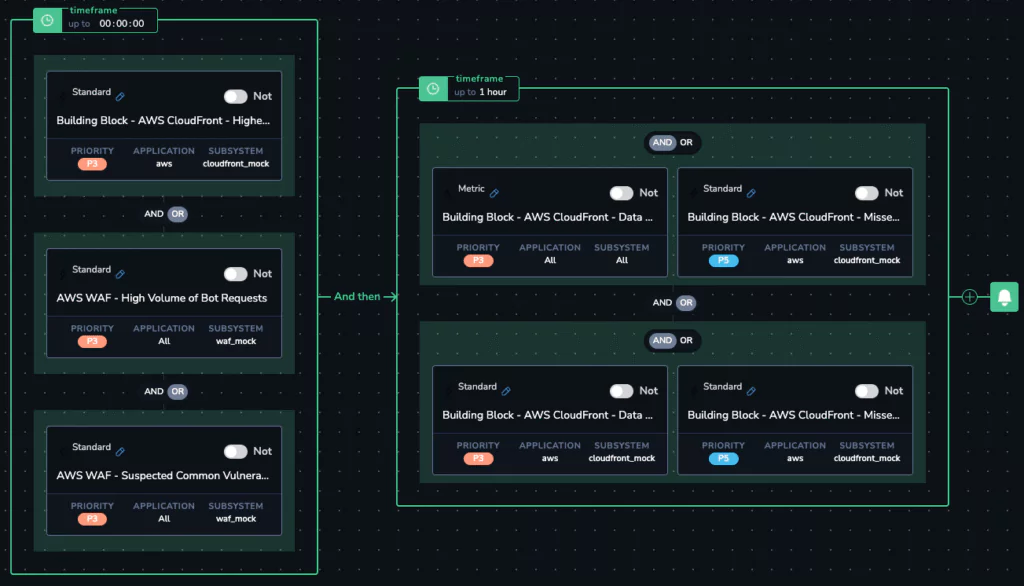

Complete Security Coverage Without Exceptions

With full data access, this opens up many opportunities. Take Flow Alerts, for example—here’s how we enable teams to:

- Think like a security lead

- Build out realistic threat scenarios using:

- Repeating threat patterns from the same IP

- Geographic anomalies in traffic

- Surges in 4xx/5xx errors

- Unauthorized cache purges

- High-risk bot attacks

These alerts, composed with AND/OR logic over defined time windows, run against 100% of your data, not just samples.

Outcome: Reduced risk, faster detection, and no blind spots.

The No-Index Advantage: Observability Without Compromise

Coralogix isn’t just a better tool – t’s a different architecture that eliminates the compromises of traditional observability:

- Complete data coverage, not samples

- Extended retention, not shortened windows

- Predictable costs, not unexpected overages

- Fast performance, not delayed rehydration

Especially for CDN and WAF logs, where volumes are huge and stakes are high, the Coralogix architecture provides what others can’t: observability that actually scales.

Stop Compromising, Start Observing

You shouldn’t have to disable telemetry to control costs. You shouldn’t have to choose between insight and budget.

With Coralogix’s no-index architecture, you don’t have to.