Explainable AI: How it Works and Why You Can’t Do AI Without It

What Is Explainable AI (XAI)?

Explainable AI is the ability to understand the output of a model based on the data features, the algorithm used, and the environment of the model in question, in a human-comprehensible way. It makes it possible for humans to analyze and understand the results provided by ML models.

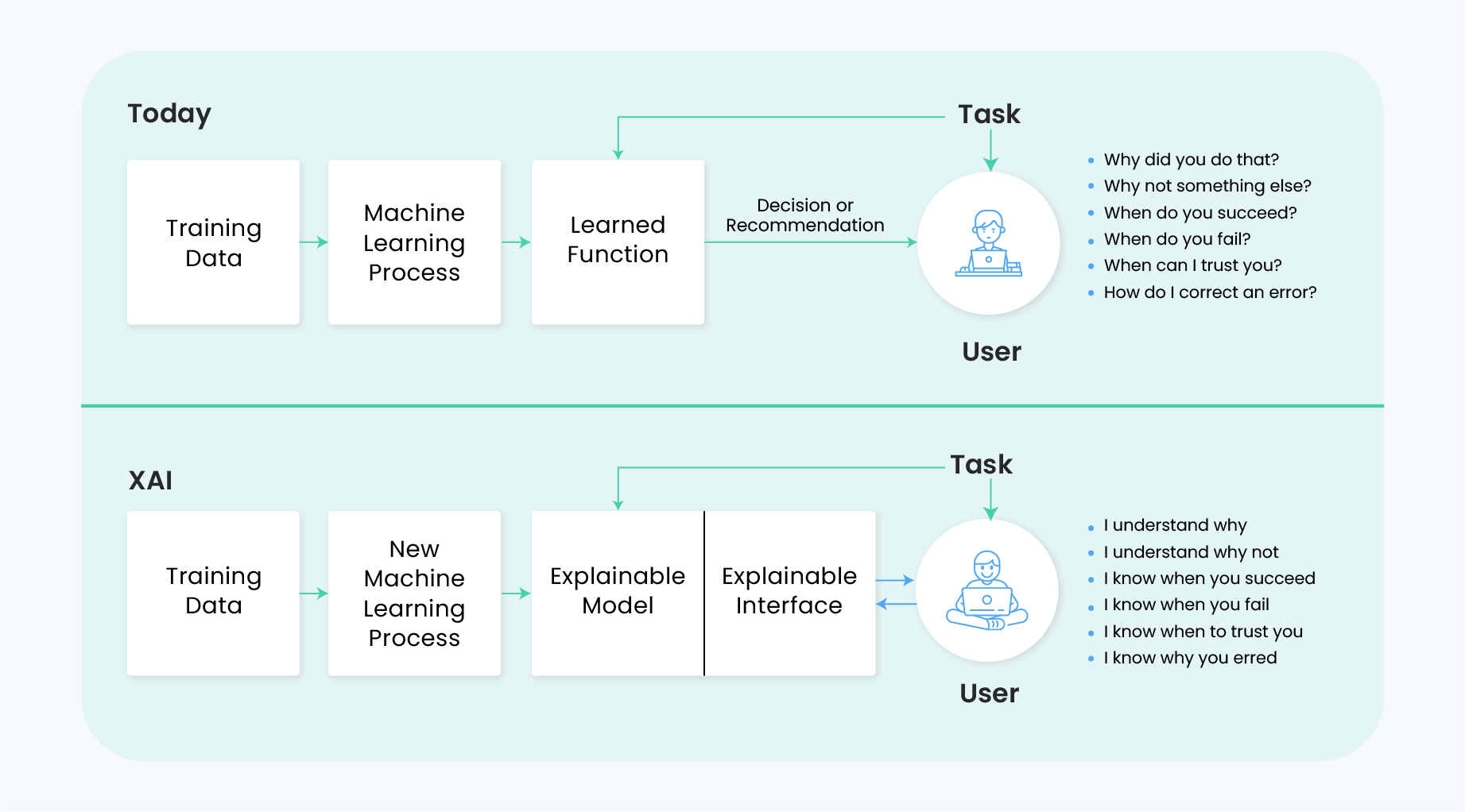

This illustration from the US agency DARPA shows the challenges addressed by XAI:

XAI is the solution to the problem of “black-box” models, which do not make it clear how they arrive at specific decisions. It is a set of methods and tools that allow humans to comprehend and trust the results of AI models and the output they create.

It’s important to point out that explainability isn’t just for machine learning engineers or data scientists – it’s for everyone. Any explanation of the model should be understandable for any stakeholder – regardless of whether they’re a data scientist, business owner, customer, or user. Therefore, it should be both simple and information-rich.

6 Ways Explainability Can Benefit Your Organization

- Trust: People generally trust things that they are familiar with or have pre-existing knowledge of. Therefore, if they don’t understand the inner workings of a model, they can’t trust it, especially in high-risk domains like healthcare or finance. It is impossible to trust a machine learning model without understanding how and why it makes its decisions and whether these decisions are justified.

- Regulations and Compliance: Regulations to protect consumers of technology require that a strong level of explainability be achieved before the general public can use it. For instance, the European Union regulation 679 gives consumers the “right to an explanation of the decision reached after such assessment and to challenge the decision” if it was affected by AI algorithms. Also, data scientists, auditors, and business decision-makers alike must ensure their AI complies with company policies, industry standards, and government regulations.

- ML Fairness and Bias: When it comes to correcting model fairness and bias, there is really no way to detect where it’s coming from in the data without model explainability. And with the prevalence of bias and vulnerabilities in ML models, understanding how a model works is the first priority before it can be deployed to production.

- Debugging: Without understanding the “bug” feature or algorithm, it would be impossible to achieve the desired output. Hence, model explainability is essential in debugging a model during its development phase.

- Enhanced Control: When you understand how your models work, you get to see unknown vulnerabilities and flaws. Then the ability to rapidly identify and correct mistakes in low-risk situations becomes easy to do.

- Ease of Understanding and the Ability to Question: Understanding how the model’s features affect the model output helps you further question and improve the model.

Explainable AI Principles

The National Institute of Standards (NIST) is US agency that works as part of the Department of Commerce. NIST defines the following four principles of explainable AI:

- Explanation – an AI system must explain each output by providing reasoning, evidence, or support.

- Meaningful – any explanation an AI system provides must be meaningful to the human stakeholder assessing it. The explanation should make sense to users.

- Explanation accuracy – an AI system’s explanation must accurately reflect the process it used to arrive at the output.

- Knowledge limits – an AI system must limit itself to operate under the conditions it was created. It must not provide any output unrelated to its training data and knowledge.

These principles aim to define the output expected from an explainable AI system. However, they do not specify how the system should reach this output.

How Does Explainable AI Work?

XAI is categorized into the following three types:

- Explainable data – clarifying the data used to train the model, the type of data and content, the reasons it was chosen, how fairness was assessed, and whether it required any effort to remove bias.

- Explainable predictions – specifying all model features activated or used to reach a certain output.

- Explainable algorithms – revealing the individual layers the model consists of and explaining how they contribute to a prediction or an output.

Currently, explainable data is the only category that drives XAI for neural networks. Explainable algorithms and predictions are still undergoing research and development.

Here are the two main explainability approaches:

- Proxy modeling – involves using a different model, like a decision tree, to approximate another model. The result is an approximation, which can differ from the model’s results.

- Design for interpretability – involves designing models that are easy to explain. However, it can reduce the model’s overall accuracy or predictive power.

XAI aims to enable users to understand the rationale behind a model’s decisions, but the techniques used to achieve explainability can severely limit the model. Common explainable techniques include Bayesian networks, decision trees, and sparse linear models.

Ongoing research is applied to find ways to make regular, unexplainable models more meaningful to users. For example, incorporating graph techniques like knowledge graphs to make the model more explainable.

AI Explainability Challenges and Solutions

Here are the main challenges of XAI:

- Confidentiality – some algorithms are confidential, include a trade secret, or could pose a security risk if revealed. However, confidentiality makes it difficult to ensure an AI system does not learn a biased perspective based due to the training data, goal function, or model.

- Complexity – in some cases, an AI algorithm is well understood by experts. However, the algorithm’s high complexity makes it difficult to explain in layman’s. However, XAI can help solve this issue by creating alternative, simpler algorithms.

- Reasonableness – algorithms can sometimes use real data to make unreasonable or biased decisions. However, XAI is expected to confirm that an AI system’s decisions are fair. Since there are gaps in training data, it can hinder the ability to assess decision-making and ensure an AI system does not acquire biased or unbiased world views.

- Fairness – in addition to understanding how an algorithm works, users must also learn the system’s moral or legal code. However, the perception of fairness is contextual and relies on the data fed to ML algorithms, making it difficult to explain these aspects of decision-making.

Here are two methods that can potentially help overcome the challenges of XAI in offering a meaningful explanation:

- Model-agnostic technique – implementing this strategy to a complete set of algorithms or learning ways can help treat the internal workings of XAI as an unknown black box. You can apply it to many model types to interpret more common patterns across all data points (for example, using decision trees to approximate models) or to interpret individual data points.

- Model-specific technique – implementing this strategy to specific sets of algorithms can help treat the inner workings of XAI as a white box. You can apply it to specific models to interpret general patterns across all or individual data points.

Explainability Methods and Techniques

There are various explainability methods such as Shap, Partial Dependence Plot, LIME, ELI5. These can be characterized into three types: global explainability, local explainability, and cohort explainability.

Global Explainability

The Global Approach explains the model’s behavior holistically. Global explainability helps you know which features in the model contribute to the model’s overall predictions. During model training, global explainability provides information about what features the model used in making decisions to stakeholders. For example, the product teams looking at a recommendation model might want to know which features (relationships) motivate or engage customers most.

Local Explainability

Local interpretation helps understand the model’s behavior in the local neighborhood, i.e. It gives an explanation about each feature in the data and how each feature individually contributes to the model’s prediction.

Local explainability helps in finding the root cause of a particular issue in production. It can also be used to help you discover which features are most impactful in making model decisions. This is important, especially in industries like finance and health where the individual features are almost as important as all features combined. For example, imagine your credit risk model rejected an applicant for a loan. With Local explainability, you can know why this decision was made and how to better advise the applicant. It also helps in understanding the suitability of the model for deployment.

Cohort Explainability

Somewhere between global and local explainability lies Cohort (or Segment) explainability. This explains how segments or slices of data contribute to the model’s prediction. During model validation, Cohort explainability helps explain the differences in how a model is predicting between a cohort where the model is performing well versus a cohort where the model is performing poorly. It also assists when trying to explain outliers as outliers occur in a local neighborhood or data slice.

Note: Both Local and Cohort (Segment) explainability can be used to explain outliers.