Breaking down larger, monolithic software, services, and applications into microservices have become a standard practice for developers. While this solves many issues, it also creates new ones. Architectures composed of microservices create their own unique challenges.

In this article, we are going to break down some of the most common. More specifically, we are going to assess how observability-based solutions can overcome many of these obstacles.

Observability vs Monitoring

We don’t need to tell you that monitoring when working with microservices is crucial. This is obvious. Monitoring in any area of IT is the cornerstone of maintaining a healthy, usable system, software, or service.

A common misconception is that observability and monitoring are interchangeable terms. The difference is that while monitoring gives you a great picture of the health of your system, observability takes these findings and provides data with practical applications.

Observability is where monitoring inevitably leads. A good monitoring practice will provide answers to your questions. Observability enables you to know what to ask next.

No App Is An Island

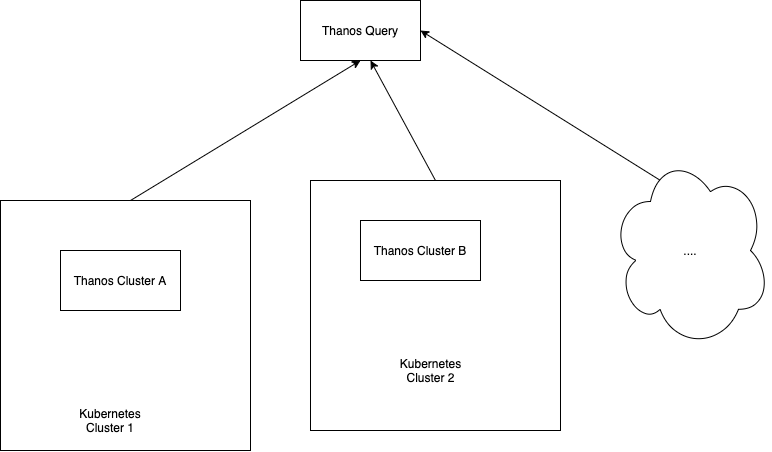

In a microservices architecture, developers can tweak and tinker with individual apps without worrying about this leading to the need for a full redeploy. However, the larger the microservice architecture gets the more issues this creates. When you have dozens of apps, worked on by as many developers, you end up running a service that relies on a multitude of different tools and coding languages.

A microservice architecture cannot function if the individual apps lack the ability to communicate effectively. For an app in the architecture to do its job, it will need to request data from other apps. It relies on smooth service-to-service interaction. This interaction can become a real hurdle when each app in the architecture was built with differing tools and code.

In a microservice-based architecture you can have thousands of components communicating with each-other. Observability tools give developers, engineers, and architects, the power to observe the way these services interact. This can be during specific phases of development or usage, or across the whole project lifecycle.

Of course, it is entirely possible to program communication logic into each app individually. With large architectures though this can be a nightmare. It is when microservice architectures reach significant size and complexity that our first observability solution comes into play- a service mesh.

Service Mesh

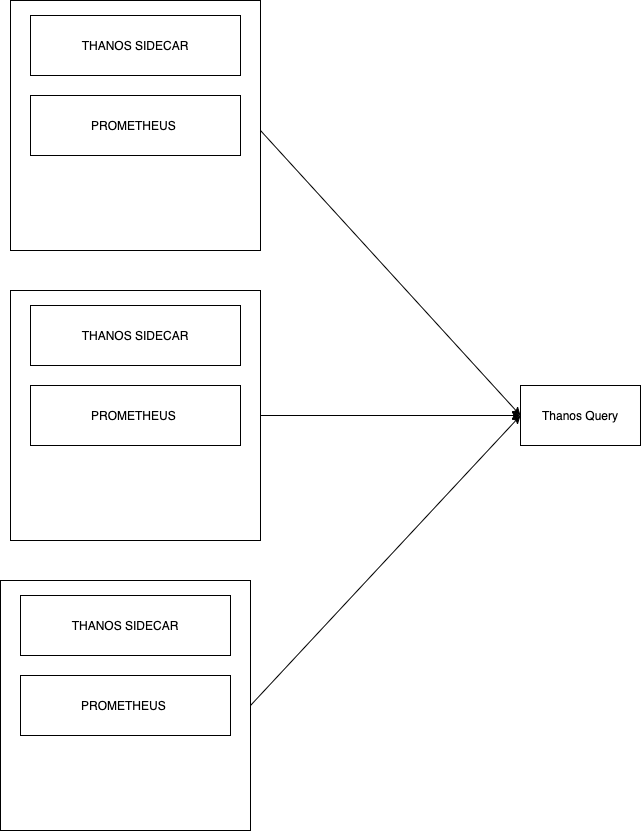

A service mesh works inter-service communication into the infrastructure of your microservice architecture. It does this using a concept familiar to anybody with knowledge of networks- proxies.

What does a service mesh look like in your cluster?

Your service mesh takes form as an array of proxies within the architecture, commonly referred to as sidecars. Why? Because they run alongside each service instead of within them. Simple!

Rather than communicate directly, apps in your architecture relay information and data to their sidecar. The sidecar will then pass this to other sidecars, communicating using a common logic embedded into the architecture’s infrastructure.

What does it do for you?

Without a service mesh, every app in your architecture needs to have communication logic coded in manually. Service meshes remove (or at least severely diminish) the need for this. Also, a service mesh makes it a lot easier to diagnose communication errors. Instead of scouring through every service in your architecture to find which app contains the failed communication logic, you instead only have to find the weak point in your proxy mesh network.

A single thing to configure

Implementing new policies is also simplified. Once out there in the mesh, new policies can be applied throughout the architecture. This goes a long way to safeguarding yourself from scattergun changes to your apps throwing the wider system into disarray.

Commonly used service meshes include Istio, Linkerd, and Consul. Using any of these will minimize downtime (by diverting requests away from failed services), provide useful performance metrics for optimizing communication, and allow developers to keep their eye on adding value without getting bogged down in connecting services.

The Three Pillars Of Observability

It is generally accepted that there are three important pillars needed in any decent observability solution. These are metrics, logging, and traceability.

By adhering to these pillars, observability solutions can give you a clear picture of an individual app in an architecture or the infrastructure of the architecture itself.

An important note is that this generates a lot of data. Harvesting and administrating this data is time-consuming if done manually. If this process isn’t automated it can become a bottleneck in the development or project lifecycle. The last thing anybody wants is a solution that creates more problems than it solves.

Fortunately, automation and Artificial Intelligence are saving thousands of man hours every day for developers, engineers, and anybody working in or with IT. Microservices are no exception to this revolution, so there are of course plenty of tools available to ensure tedious data wrangling doesn’t become part of your day-to-day life.

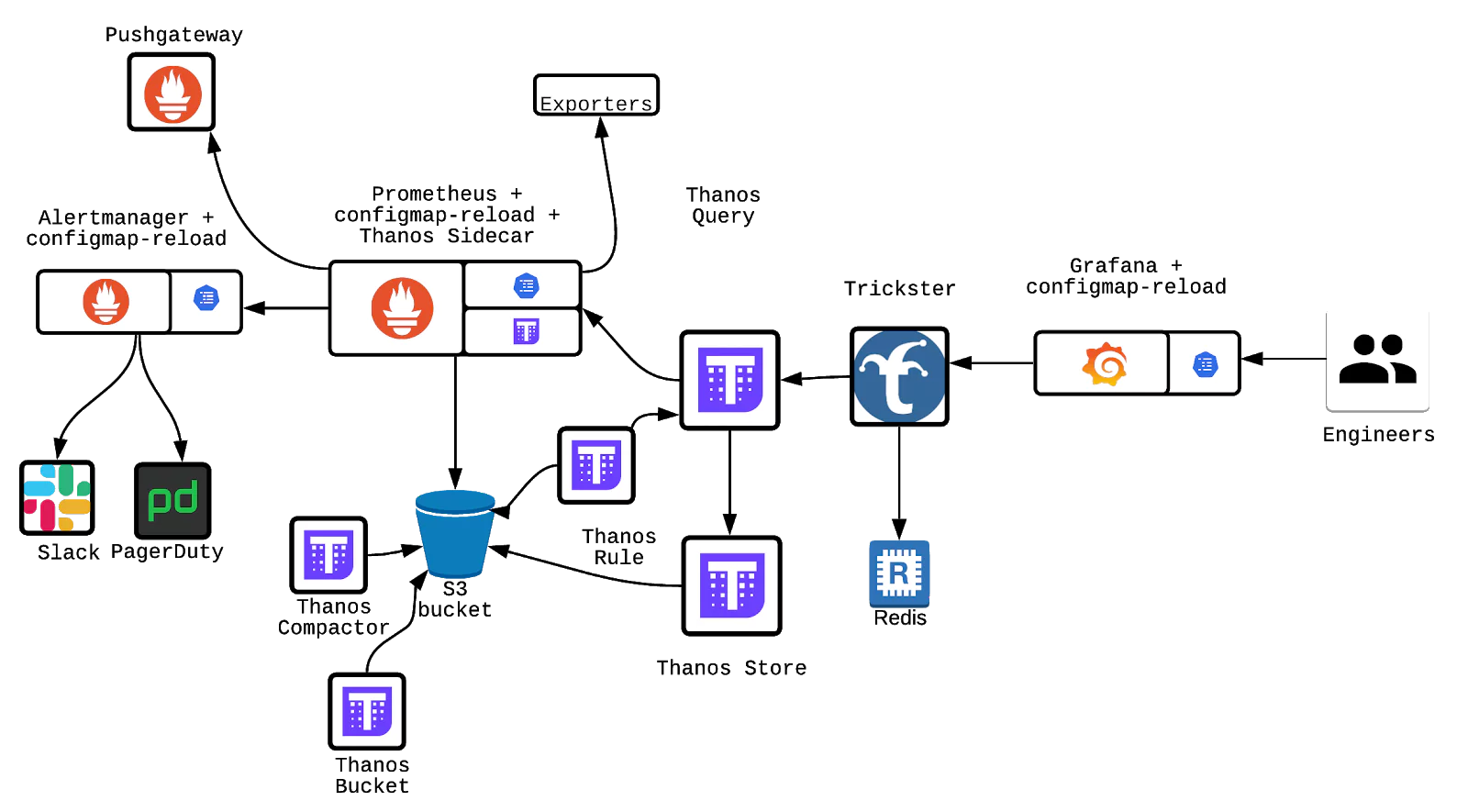

Software Intelligence Platforms

Having a single agent provide a real-time topology of your microservice architecture has no end of benefits. Using a host of built-in tools, a Software Intelligence Platform can easily become the foundation of the smooth delivery of any project utilizing a large/complex microservice architecture. These platforms are designed to automate as much of the observation and analysis process as possible, making everything from initial development to scaling much less stressful.

A great software intelligence platform can:

- Automatically detect components and dependencies.

- Understand which component behaviors are intended and which aren’t wanted.

- Identify failures and their root cause.

Tracking Requests In Complex Environments

Since the first days of software engineering and development, traceability of data has been vital.

Even in monolith architectures keeping track of the origin points of data, documentation, or code can be a nightmare. In a complex microservice environment composed of potentially hundreds of apps it can feel impossible.

This is one of the few areas in which a monolith has an operational advantage. When literally every bundle of code is compiled in a single artifact, troubleshooting or request tracking through the lifecycle is more straightforward. Everything is in the same place.

In an environment as complex and multidimensional as a microservices architecture, documentation and code bounces from container to container. Requests travel through a labyrinth of apps. Keeping tabs on all this migration is vital if you don’t want debugging and troubleshooting to be the bulk of your workload.

Thankfully, there are plenty of tools available (many of which are open source) to ensure tracking requests through the entire life-cycle is a breeze.

Jaeger and Zipkin- Traceability For Microservices

When developing microservices it’s likely you’ll be using a stack containing some DevOps tooling. By 2020 it is safe to assume that most projects will at least be utilizing containerization of some description.

Containers and microservices are often spoken of in the same context. There is a good reason for this. Many of the open source traceability tools developed for one also had the other very much in mind. The question of which best suits your needs largely depends on your containerization stack.

If you are leaning into Kubernetes, then Jaeger will be the most functionally compatible. In terms of what it does, Jaeger has features like distributed transaction monitoring and root cause analysis that can be deployed across your entire system. It can scale with your environment and avoids single points of failure by way of supporting a wide variety of storage back ends.

If you’re more Docker-centric, then Zipkin is going to be much easier to deploy. This ease of use is aided by the fact that Zipkin runs as a single process. There are several other differences, but functionally Zipkin fills a similar need to Jaeger. They both allow you to track requests, data, and documentation across an entire life-cycle in a containerized, microservices architecture.

Logging Frameworks

The importance of logging cannot be overstated. If you don’t have effective systems for logging errors, changes, and requests, you are asking for nothing short of chaos and anarchy. As you can imagine, in a microservices architecture potentially containing hundreds of apps from which bugs and crashes can originate a decent logging solution is a high priority.

To have effective logging observability within a microservices architecture requires a standardized, system-wide approach to logging. Logging frameworks are a great way to do this. Logging is so fundamental that some of the earliest open source tools available were logging frameworks. There’s plenty to choose from, and they all have long histories and solid communities for support and updates by this point.

The tool you need really boils down to your individual requirements and the language/framework you’re developing in. If you’re logging in .Net then something like Nlog, log4net, or Serilog will suit. For Java your choice may be between log4j or logback. There are logging frameworks targeting most programming languages. Regardless of your stack, there’ll be something available.

Centralizing Log Storage

Now that your apps have a framework in place to log deployments, requests, and everything else, you need somewhere to keep them until you need them. Usually, this is when something has gone wrong. The last thing you want to be doing on the more stressful days is wading through a few dozen apps-worth of log data.

Like almost every problem on this list, the reason observability is necessary for your logging process is due to the complexity of microservices. In a monolith architecture, logs will be pushed from a few sources at most. In a microservice architecture, potentially hundreds of individual apps are generating log data every second. You need to know not just what’s happened, but where it happened amongst the maze of inter-service noise.

Rather than go through the incredibly time-consuming task of building a stack to monitor all of this, my recommendation is to deploy a log management and analysis tool like Coralogix to provide a centralized location to monitor and analyze relevant log data from across the entirety of your architecture.

When errors arise or services fail, any of the dozens of options available will quickly inform you both the nature of the problem and the source. Log management tools hold your data in a single location. No more will you have to travel app to app searching for the minor syntax error which brought down your entire system.

In Short

There are limitless possibilities available for implementing a decent observability strategy when you’re working with microservices. We haven’t even touched upon many cloud-focused solutions, for example, or delved into the realms of web or mobile app-specific tools.

If you’re looking for the short answer of how to go about overcoming microservices issues caused by poor observability, it’s this: find a solution that allows you to track relevant metrics in organized logs so everything is easily traceable.

Of course, this is highly oversimplified, but if you’re looking purely for a nudge in the right direction the above won’t steer you wrong. With so many considerations and solutions available, it can feel overwhelming when weighing up your options. As long as you remember what you set out to do, the journey from requirement to deployment doesn’t have to be an arduous one.

Microservices architectures are highly complex at a topological level. Always keep that in mind when considering your observability solutions. The goal is to enable a valuable analysis of your data by overcoming this innate complexity of microservices. That is what good observability practice brings to the table.