Serverless computing is becoming increasingly popular in software development due to its flexibility of development and the ability it affords to test out and run solutions with minimal overhead cost. Vendors like AWS provide various tools that enable businesses to develop and deploy solutions without investing in or setting up hardware infrastructures.

In this post, we’ll cover the many different services AWS observability tools provides for supporting serverless computing.

AWS Service Descriptions

To break down which service to choose, we will first look at each of these services’ capabilities and tradeoffs. Each of the tools described here works as a serverless computing engine, but they each have different features that will provide advantages and disadvantages depending on your specific use case.

Ideally, users will understand the project’s requirements before choosing a service to ensure the service meets your needs. Here is a brief description of each of the services we will compare:

Elastic Compute Cloud (EC2)

EC2 is an implementation of infrastructure-as-a-service (IaaS) that allows users to deploy virtual computing environments or instances. You can create instances using templates of the software configuration called Amazon Machine Images (AMIs).

Customizing EC2 is an option, making it a powerful option for experienced users. With the flexibility of EC2 comes additional complexity compared to other solutions when it comes to setting up and maintaining the service. AWS uses EC2 to provide both Fargate and Lambda services to AWS users.

Fargate with ECS and EKS

AWS Fargate provides a way to deploy your serverless solution using containers. Fargate is an implementation of container-as-a-service (CaaS). Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS) are other AWS-managed services used to manage containers. In this article, both ECS and EKS are discussed as separate deployment options since they have different features.

Running Fargate on both ECS and EKS allows you to run the required application without provisioning, monitoring, or managing the compute infrastructure as required when using EC2. Docker containers package applications that either ECS or EKS can then launch.

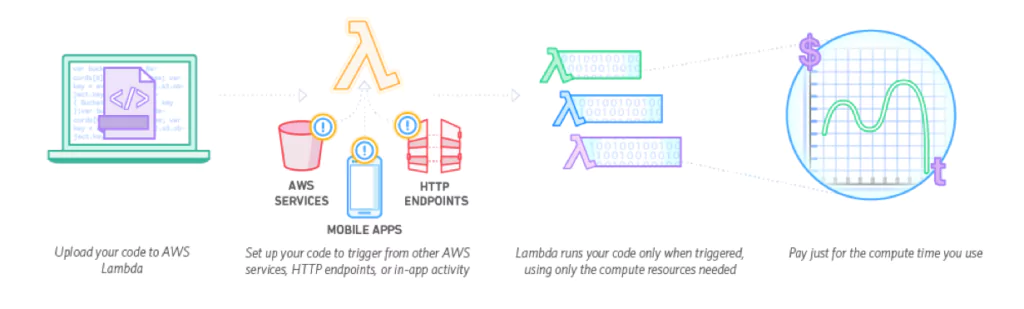

Lambda

Lambda is a compute service that runs without provisioning or managing servers. Strictly speaking, it is an implementation of a function-as-a-service (FaaS). It is a stateless service which will run on an automatically provisioned infrastructure when it is triggered. Many AWS services have a built-in ability to trigger Lambda functions, making them highly functional in AWS designs.

[table id=65 /]

Scalability and Launch Methodology

Each compute service is able to scale to meet the demands of the workload, though each type will scale using a different method. In the case of Fargate and EC2, the user is required to set up autoscaling, while in Lambda this will occur automatically.

EC2

Users may automatically add or remove EC2 instances by configuring EC2 auto-scaling. There are different auto-scaling types used for EC2, including fleet management, scheduled scaling, dynamic scaling, and predictive scaling.

Fleet management will automatically detect EC2 instances that are unhealthy or impaired in some way. AWS automatically terminates and replaces detected functions with new instances to ensure consistent behavior of your compute function.

If the load on EC2 is predictable, users can configure scheduled scaling to add or remove EC2 instances ahead of time. Otherwise, typically users will employ dynamic auto-scaling and predictive auto-scaling individually or together. Dynamic auto-scaling uses the demand curve of your application to determine the required provisioning of your instance. Predictive auto-scaling uses machine learning implemented by AWS to schedule EC2 instance scaling based on anticipated load changes.

Fargate with ECS

Fargate runs tasks (which carry out the required compute logic) within a cluster. The ECS scheduler is responsible for ensuring tasks run and gives options for how a scheduler may instantiate the task. Scheduler options include: using a service to run and maintain a user-specified number of tasks simultaneously, using a CloudWatch Events rule to trigger a task periodically, creating a custom scheduler within ECS which can use third-party schedulers, or manually running a task using the RunTask command from the AWS Console or another AWS service.

Using ECS, tasks may run as always-on. Using tools like ECS services will also ensure that it will restart with a cleared memory cache even if the task fails. While Fargate with ECS cannot be triggered by as many other AWS services as Lambda can, continuously running the task allows you to use library functions to listen for events in other services. For example, AWS Kinesis and AWS SQS can both send events to a task listening for events.

ECS also has auto-scaling capabilities using the AWS Application Auto Scaling service. ECS publishes metrics to CloudWatch, including average CPU and memory usage. These are then used to scale out services according to settings to help deal with peak demand and low utilization periods. Users may also schedule scaling for known changes in usage.

Fargate with EKS

Amazon EKS allows the configuration of two types of auto-scaling on Fargate pods running in the cluster. The EKS cluster can scale with both the Kubernetes Vertical Pod Autoscaler (VPA) and the Kubernetes Horizontal Pod Autoscaler (HPA).

The VPA will automatically adjust CPU and memory settings for your Fargate pods. The automatic adjustments help improve resource allocation within your EKS cluster so pods can work together on the same node more efficiently. To use the VPA, users must install the Kubernetes metric server, which tracks the resource usage in the cluster. The VPA then uses this information to adjust the pod configuration.

Horizontal scaling allows the node to add or remove Fargate pods from the node based on CPU utilization. During setup, users configure a requested utilization percentage, and the HPA will automatically scale to try and hit the given target. Using the Kubernetes aggregation layer, users can also plug in third-party tools such as Prometheus to aid in task scaling and visibility into usage.

Once tasks are running in pods, functionality within the Fargate task is like using ECS. Users may ensure at least some pods are always on having functions running which track events from other AWS services like AWS Kinesis or AWS SQS. Again, there are fewer options for automatic triggering of Fargate functions running in pods than is available for Lambdas.

Lambda

Lambda functions are scalable by design. These functions will launch more instances automatically to handle demand increases. You can limit the number of concurrently running functions if required for your needs, having as little as one instance up to a maximum of 1000 concurrent Lambda functions running at once per AWS account. AWS may increase this number upon request.

When first launched, a Lambda instance requires extra time, or cold-start time, to set up the function’s instance. Once an instance exists, it can launch a new function more quickly. Instances live for 15 to 30 minutes, after which time they are closed if not in use.

You can invoke Lambdas directly using the AWS CLI, Console, and AWS SDK, or use one of the many different AWS services that can trigger Lambda functions. Services that can trigger Lambda include API Gateway, DynamoDB streams, S3 streams, SQS, and many other services. For a complete list, see the AWS website.

Compute Costs

Compute costs in AWS are typically a driving factor in which service to use for creating functions. In general, there is a break-even point for each type of function where one compute service will be the same cost as another.

This point is based on how many requests your function must handle in a period of time, how long each computation must run for, and the amount of memory required to handle your workload. Below is a breakdown of the pricing types available for the compute service types and when it is ideal to choose one option over another.

EC2

When running EC2, the instance requires time to set up resources before use. This initial setup time has some latency. But using the instance repeatedly, startup times will be faster after the initial latency. With EC2, users pay for computing capacity while the instance is running.

Among other considerations, users should consider the tradeoff between instance startup latency and cost in the system’s design. EC2 is well suited for long-running tasks, and a required long-running task may be less costly running in EC2 than other services.

AWS has four pricing models for the user to choose from. These models are On-Demand, Spot Instances, Reserved Instances, and Dedicated Hosts.

In On-Demand pricing, similar to Lambda and Fargate, you pay for what you use based on the region your EC2 instance is deployed. You pay either by the hour or by the second, depending on your EC2 instance setup. This pricing model is well suited for users who cannot predict the load that their EC2 instances will require, or if you have short spiky loads, or while you are developing a new compute function.

Spot instances work similarly to On-Demand pricing in that you pay for what you use. However, users can set a price limit on what they want to pay to run their EC2 instance. If the compute cost is above the user’s set limit, the instance will not run (or will terminate). This could save users significantly if they do not require real-time processing or if the cost to run instances is the most important development factor.

Reserved instance pricing means the user has reserved capacity on the AWS servers. The cost to run the instances is significantly less than On-Demand pricing, but users should only consider this model if they have predictable or steady loads.

With Dedicated Host pricing, users can request a physical EC2 server that will run only their EC2 instances. This is especially useful if users have strict compliance requirements since it gives users control over the physical system their compute code runs on. Pricing with dedicated hosts can be done On-Demand or as a Reserved Instance.

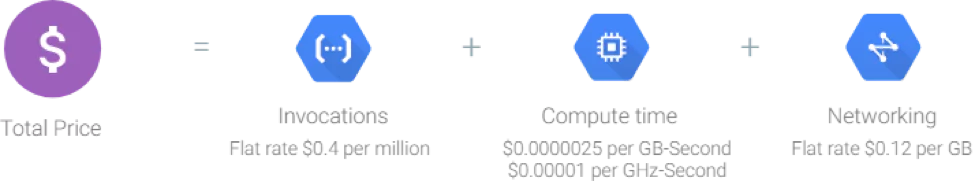

Fargate with ECS and EKS

Like with Lambdas, there is a tradeoff between a task’s duration and the allocated memory resources. Cost per unit time will increase with a higher memory allocation for the function. AWS calculates pricing in the same way for both ECS and EKS clusters running Fargate.

Containers running Fargate tasks will cost users based on the number of vCPUs (virtual CPUs) allocated and memory resources used. Runtime is from when the container pulls images from the ECR until the task terminates. AWS rounds task and pod duration to the nearest second and charges users for a minimum of 1 minute of runtime for each new task or pod. The cost per vCPU hour also depends on the region in which the service is running.

Users can also request spot pricing, which has the same cost model based on vCPU and duration, but both tasks and pods will terminate if the cost is above a specified limit. The spot price is determined by long-term EC2 trends looking at both supply and demand to calculate current EC2 capacity. AWS calculates the spot price at the beginning of each instance-hour for your running instance.

The user may also choose between different pricing models. Choices are to use either on-demand pricing where users pay for run time to the nearest second as the pod uses compute capacity (like with ECS), or savings plans where users make commitments to a consistent amount of usage for 1 or 3 years.

Lambda

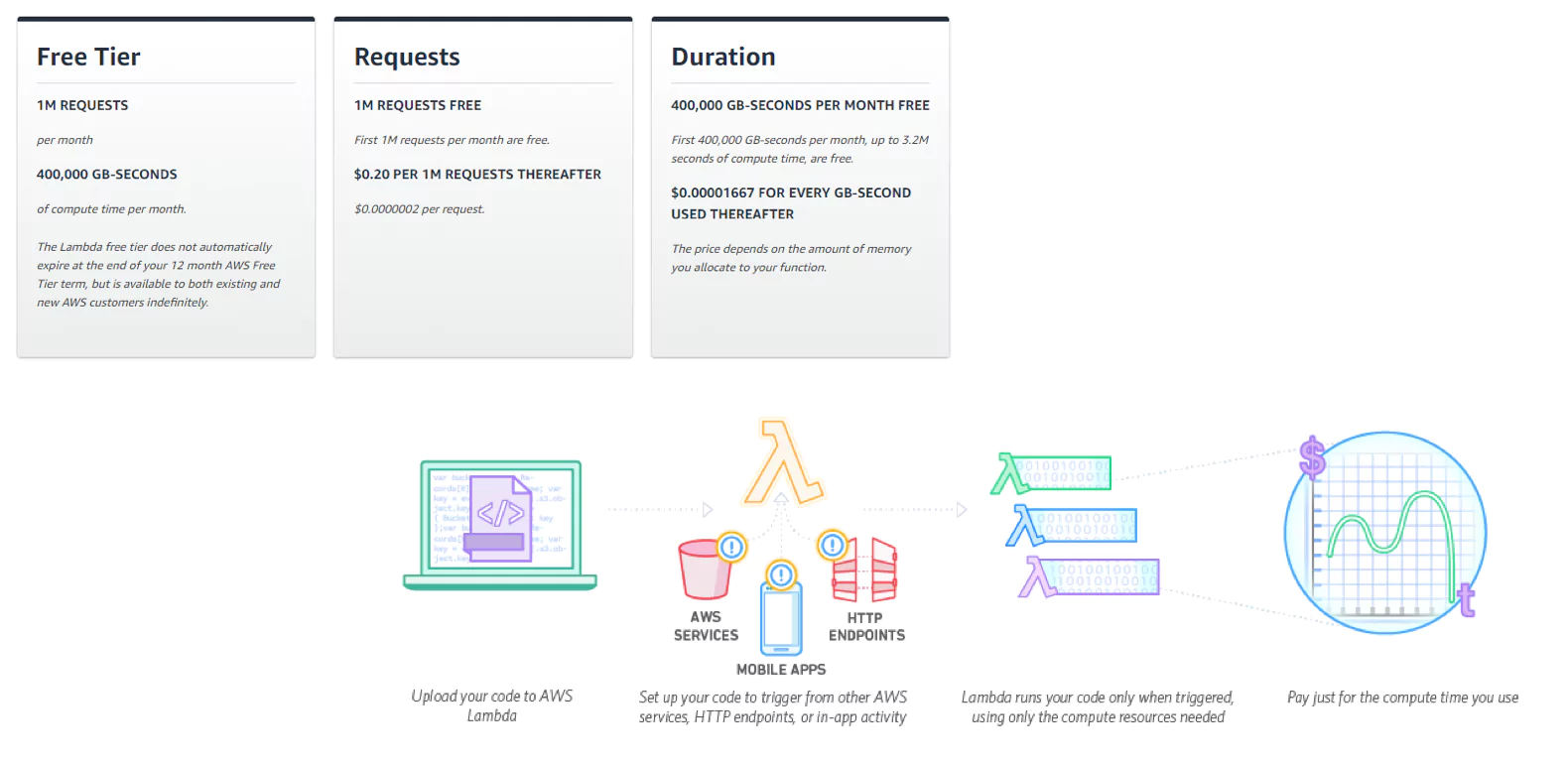

AWS Lambdas are limited duration functions. They can only run for a maximum of 300 seconds before the function will timeout and shut down the process, even if the function is in the middle of a process. Depending on what triggers the Lambda, there may be shorter duration limits. If an API Gateway triggers Lambda, the function may only live for 30 seconds.

Lambda bases its pricing on how long a function runs. AWS previously charged the user for every 100ms the function would run. In December 2020, this cost model changed to charging to the nearest 1ms.

Memory limits of the Lambda also affect the cost and runtime of the function. The higher the memory, the faster the function may run, but the more cost per 100ms. Users must analyze their functions to find the most efficient balance between run time, memory requirements, and cost.

Lambda is a proper solution for short-lived, fast tasks but is less suited for long-running tasks. If you are using the Lambda to call another function or API, which may take significant time to respond, you will be increasing the required Lambda duration.

Security

In all cases, security is a shared responsibility between AWS and the user. AWS ensures the security of the infrastructure running in the cloud. You can learn more about how AWS achieves its compliance standards on the AWS compliance programs page.

When you set up any of the compute resources, you must provide permissions to allow the function or container to perform actions on other AWS resources. To do this, you must set up IAM roles assumed by the service while running. Each of the compute options uses IAM. The differences between them are in how the roles are created and maintained.

EC2

EC2 computing requires users to configure and maintain security on multiple parts of the service. Users have full root access to virtual instances and are responsible for securing them. Recommended security practices include disabling password-only access for guests and adding multi-factor authentication (MFA) for access to the instance. Users are also responsible for updating and patching the virtual instance, and this includes security updates.

Amazon maintains a firewall that it forces all traffic to transmit through. EC2 users must set explicit permissions to allow traffic through the firewall to the virtual instance. The firewall settings are not accessible through the virtual instance directly. Instead, users configure using their certificate and key available in the AWS console.

Your AWS secret access key obtained with your AWS user must sign commands to grant API access for launching and terminating virtual machines, changing firewall permissions, and other security functions. This user must have IAM permissions set to perform these maintenance and setup tasks.

AWS IAM is used within the running virtual instance to grant permission for EC2 to use and control other AWS services from within the instance.

Fargate with ECS

With Fargate running on ECS, the permissions are associated with the container the task runs in. The user can select an IAM role to use as the task role when creating the container. You must create the IAM role before you can complete the creation of the container.

The IAM role is formed separately from the Fargate task’s deployment and made using the AWS CLI or manually using the AWS Console. Each container can have its own IAM permissions, restricting service access to the user’s requirements.

Fargate with EKS

Amazon EKS supports IAM for authentication and VPC to isolate clusters, running a single control plane for each cluster. In September 2020, AWS implemented the ability to assign EC2 security groups to individual pods running on EKS clusters. Using security groups for pods, the user can run applications with different network security requirements on shared compute nodes. Before this update, users had to run nodes separately if they required different security requirements.

For running a node with pods on an EKS cluster, users must configure several policies in IAM. The user must configure the pod execution role, which allows the pod to make AWS API calls needed for the pod to set itself up. For example, this role will allow the pod to pull required container images from AWS Elastic Container Registry (ECR).

Lambda

While you can create a lambda using the AWS console, it is more common to use a framework such as Serverless to create Lambda services. The Serverless framework enables the setup of IAM roles that each lambda function or the lambda service will assume while running. The Serverless framework will recreate IAM role definitions each time you deploy the Lambda using Serverless. For more information on setting up IAM roles with serverless, see the Serverless IAM roles guide.

Suppose you are using a different method of deployment which does not integrate IAM role creation. In that case, you can create IAM roles manually in the AWS Console or the AWS CLI and link them to an existing lambda in the Lambda Console.

Summary

With the rising popularity of serverless computing, new tools are coming out all the time to support the organizations that are moving in that direction. Since each tool has different features and their own set of advantages and disadvantages, it’s important to have a clear understanding of the project’s scope before jumping in with the first serverless computing solution you run into.

Regardless of which serverless solution you decide is the best fit for your needs, Coralogix can seamlessly ingest and analyze all of your AWS data (and much, much more) for full observability and the most extensive monitoring and alerting solution in the market.