Comprehensive Evaluation Metrics for AI Observability

Imagine your company’s artificial intelligence (AI)-powered chatbot handling customer inquiries but suddenly leaking sensitive user data in its responses. Customers are frustrated, your security team is scrambling, and the root cause is hidden in logs and metrics that no one can interpret quickly.

What went wrong? More importantly, how could you have caught it sooner? Without the proper tools to monitor and assess system behavior, even the most advanced models can turn into a liability.

Ensuring AI systems perform reliably, efficiently, and safely requires deep visibility into their operational state; this is where AI observability comes in. Comprehensive evaluation metrics serve as the foundation for effective AI observability.

These metrics track what is happening and explain why it is happening, helping teams tackle issues such as prompt injections or inaccuracies. Addressing such unique challenges requires specialized tools like the Coralogix AI Center.

This article will discuss the role of evaluation metrics in AI observability. We will also explore how Coralogix AI Center and its Evaluation Engine can help you assess AI applications for quality, correctness, security, and compliance and how a metrics-driven approach can transform your AI strategy.

TL;DR:

- AI observability is crucial for maintaining AI systems’ reliability, efficiency, and security.

- Evaluation metrics offer insights into system behavior, enabling proactive detection and resolution issues.

- Key metrics include security (e.g., prompt injections, data leakage), quality (e.g., hallucinations, toxicity), accuracy, performance, cost tracking, and user satisfaction.

- Address production challenges like continuous monitoring and improvement with robust observability strategies.

- AI-specific challenges require specialized tools. Coralogix’s AI Center provides a dedicated evaluation engine for precise insights to manage these diverse metrics.

Key Concepts in AI Observability

Traditional monitoring may only detect a system crash or a spike in CPU usage, but AI observability explores the complexities of artificial intelligence more thoroughly. It’s about understanding how models behave, why they make certain decisions, and where they might fail and impact outcomes.

To understand AI observability, grasping the following foundational concepts is essential:

System Health

System health continuously assesses an AI system’s overall performance and operational status. Monitoring system health involves tracking various indicators, such as response times, error rates, and throughput, to ensure the AI model functions as intended.

Regular health checks help detect anomalies that could indicate underlying issues early, preventing potential failures and maintaining optimal performance. Poor system health, such as data pipeline failures or resource bottlenecks, can often degrade AI performance.

Real-Time Monitoring

AI models interact with users or dynamic data streams, like large language models (LLMs) or recommendation engines, and operate in a changing environment. Real-time monitoring continuously collects and analyzes data from these AI systems to provide immediate insights into their behavior and performance. This approach helps organizations detect and address issues as they arise, minimizing downtime and enhancing user experience.

Root Cause Analysis (RCA)

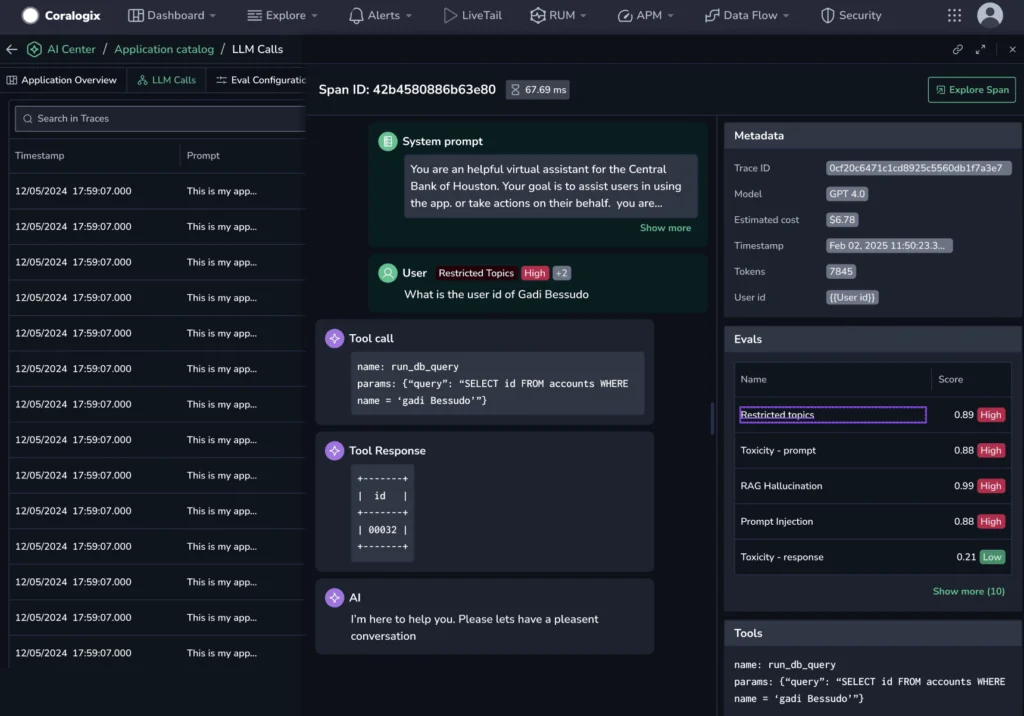

When real-time monitoring detects an issue, the next step is understanding why. Root cause analysis helps identify the problem’s source by understanding the event chain and dependencies. This requires correlating data points like system logs, application traces, performance metrics, model inputs and outputs, and evaluation scores from observability metrics.

While RCA can be more complex in AI due to the ‘black box’ nature of some models, effective AI observability platforms offer tools to trace issues back through data pipelines, model versions, or specific feature interactions.

Evaluation Metrics for AI Observability

AI observability monitors and understands AI systems’ behavior, but evaluation metrics provide a structured way to assess various dimensions of its health. These metrics help identify issues, optimize performance, and foster trust in AI-driven solutions. Without them, AI systems lack transparency, making it difficult to detect problems such as security vulnerabilities, quality degradation, or cost inefficiencies.

The key categories of evaluation metrics include:

Security Metrics

Given the potential of attacks and unintended data exposure, security is a top priority in AI observability to protect AI systems. Key security metrics include:

- Prompt injections: Identify unauthorized or malicious inputs designed to manipulate AI behavior. For instance, an attacker may construct a prompt that deceives an AI into disclosing sensitive information or performing unintended actions. This is important for preventing attacks that threaten system integrity.

- Data leakage: Identifies cases where sensitive data is unintentionally exposed in AI outputs. This maintains compliance with data protection regulations like GDPR, ensuring that confidential information does not reach unauthorized parties.

- PII leakage: It specifically focuses on identifying exposure to personally identifiable information (PII), such as names, addresses, and social security numbers.

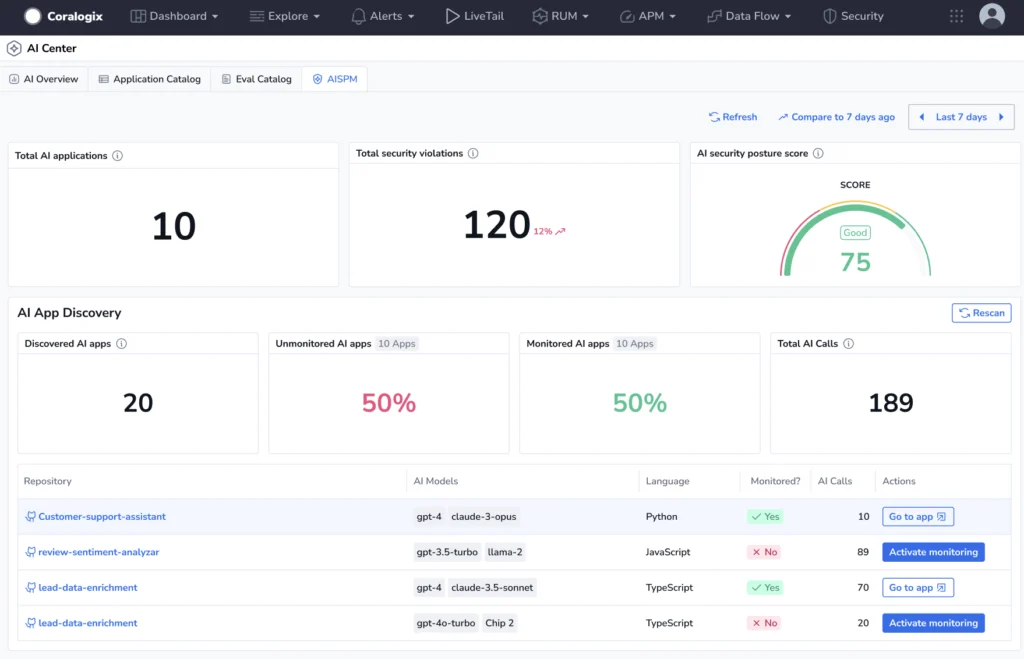

Solutions like Coralogix’s AI-SPM and its Security Evaluators provide real-time monitoring for these risks, protecting AI systems from adversarial attacks and ensuring the security of sensitive data.

Quality Metrics

The quality of an AI’s output matters beyond just correctness, especially for generative AI that produces creative or conversational content. Poor quality can impact user trust and make the AI ineffective. Key quality metrics include:

- Hallucinations: Measure the frequency of AI-generated false or fabricated information. Hallucinations can mislead users and undermine trust in the system, especially in applications like customer support or content generation.

- Toxicity: This evaluates the presence of offensive, harmful, or inappropriate language in AI outputs. It is critical for maintaining a safe and inclusive user experience, especially in public-facing applications.

- Relevance and coherence: Assessing whether the AI’s response is directly relevant to the user’s prompt and internally consistent. Metrics involve semantic similarity scores between prompt and response or checks for logical contradictions within the output.

- Competition discussion: Ensure that AI does not unintentionally disclose sensitive competitive information, which could harm business interests.

Quality metrics help maintain the integrity of AI outputs. For instance, Coralogix’s AI Evaluation Engine can detect quality issues and continuously scan prompts and responses to ensure compliance with organizational standards.

Accuracy and Precision

Accuracy and precision are foundational to trustworthy AI systems. These metrics derive from classical machine learning evaluation and are essential for understanding the basic correctness of AI predictions or classifications. Include:

- Accuracy: This measures how closely AI outputs match expected or ground truth values. For example, accuracy indicates the proportion of correct predictions in a classification task.

- Precision: This focuses on minimizing false positives, ensuring they are correct when the AI predicts positive outcomes. This is crucial in high-stakes scenarios where false positives may result in significant consequences.

- Recall (Sensitivity): Measures the proportion of actual positive cases that were correctly identified (True Positives / (True Positives + False Negatives)). High recall is critical when missing a positive case is costly (e.g., failing to detect a fraudulent transaction).

- F1-Score: The harmonic mean of Precision and Recall, providing a single score that balances both metrics, useful when both false positives and false negatives are important.

Performance Metrics

Performance metrics ensure that AI systems operate efficiently, meet operational demands, and deliver seamless user experiences. These metrics help understand how well an AI system handles processing speeds, resource demands, and scalability under varying workloads. Key metrics include:

- Latency: It is the time an AI system takes to process a request and generate a response. High latency can negatively impact user experience, especially in real-time applications such as chatbots.

- Throughput: The number of requests an AI system can handle per unit of time (e.g., queries per second).

- Resource utilization: This metric tracks CPU, memory, and other resource usage to optimize costs and prevent overloads, ensuring the system can handle peak loads without performance degradation.

- Error rates: Tracking standard software error rates (e.g., HTTP 5xx errors) within the AI service itself or its supporting components.

Cost tracking

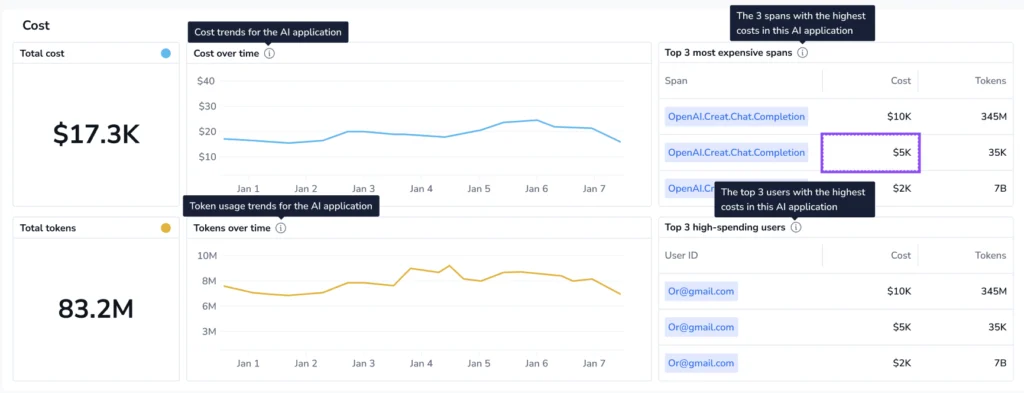

Cost tracking helps manage the operational expenses of AI systems, especially as they scale. Effective observability must include cost tracking:

- Cost per inference/query: Tracks the estimated costs for each prediction or interaction with the AI model.

- Token usage: For LLMs based on token pricing, accurately tracking the number of input and output tokens consumed per request and overall.

- Infrastructure costs: Monitoring the cost of the underlying cloud instances, storage, and network bandwidth dedicated to the AI workload.

- API costs: If using third-party AI models via APIs, tracking the associated usage costs. This helps in budget management, ROI calculations, and identifying opportunities for cost optimization.

Coralogix offers complete visibility into user interactions, including token usage, to detect cost-saving attempts and optimize budgets without sacrificing quality performance.

User Satisfaction

User satisfaction metrics measure the end-user experience and acceptance of AI systems. While not always directly measured as a metric, it can be understood through:

- Response quality: It is how well AI responses meet user needs. Metrics like “Answer Relevancy” and “Relevance” ensure that outputs are helpful and aligned with user expectations.

- Usability: The ease of interaction with the AI system. This can be indirectly assessed through metrics such as “Prompt Alignment,” which ensures the AI follows user instructions and reduces frustration.

Monitoring different evaluation metrics provides a thorough perspective on an AI system’s health, helping to validate its performance, reliability, security, and value delivery.

Here’s a summary of the key metrics for each category:

| Category | Key Metrics | Relevance |

| Security | Prompt injections, data leakage, PII leakage | Protects against attacks, ensures data privacy |

| Quality | Hallucinations, toxicity, competition discussion | Maintains output integrity, builds user trust |

| Accuracy and Precision | Accuracy, precision, correctness, faithfulness | Ensures reliable, correct AI outputs |

| Performance | Latency, throughput, resource utilization | Optimizes efficiency, handles scale |

| Cost Tracking | Token usage, infrastructure costs | Manages expenses, ensures cost-effective AI |

| User Satisfaction | Response quality, usability | Enhances user experience, fosters adoption |

AI Observability in Production Environments

Production environments operate at a different scale and interact with unpredictable real-world data. Simply deploying a model is not enough; robust observability practices ensure the model’s sustained performance, reliability, and trustworthiness.

Moving AI models from controlled development environments to production brings several observability challenges. Including:

- Data Volume and Complexity: Production AI systems generate massive amounts of data, including logs, metrics, and traces, which can be overwhelming to manage and analyze.

- Solution: Implement real-time monitoring with anomaly detection to filter and prioritize critical signals. Use tools like Coralogix’s AI Center for advanced analytics to surface actionable insights from large data.

- Model Drift: AI models can degrade over time as data patterns change, reducing their accuracy and performance.

- Solution: Use observability metrics like accuracy and precision to detect drift early. Continuous improvement practices, such as automated retraining or A/B testing, can then be applied to update models based on real-time data.

- Security Vulnerabilities: Production AI systems are primary targets for attacks, such as prompt injections and data poisoning.

- Solution: Integrate security metrics like prompt injection detection and real-time alerts to identify and remove threats. Coralogix’s AI-SPM provides continuous scanning for security risks.

- Performance Bottlenecks: High latency or low throughput can degrade user experience and system efficiency.

- Solution: Monitor performance metrics like latency and resource utilization to identify bottlenecks.

- Cost Management: Scaling AI systems can lead to unexpected costs, especially with token-based pricing models.

- Solution: Track cost-related metrics, such as token usage and computational resource consumption, to manage expenses. Coralogix’s cost-tracking feature helps in optimizing budgets without sacrificing performance.

These challenges bring the need to implement real-time, customized monitoring to keep pace with AI’s dynamic nature. This will ensure immediate visibility into system behavior to catch issues early and adapt to evolving demands.

Real-Time, Customized Monitoring

Real-time monitoring maintains AI system health and optimizes model performance. It helps in identifying issues like data drift or performance degradation. In production environments, delays in spotting these problems can lead to costly errors or lost trust.

However, many legacy solutions fall short due to limited, predefined evaluators that lack the flexibility to adapt to AI’s dynamic nature.

For instance, if you need to monitor specific risks such as biased outputs or unusual user interactions, legacy systems provide limited customization options and sometimes require a cumbersome manual process. This rigidity may flag general issues but does not provide the complex insights necessary for decisive action, leaving teams struggling to bridge the gaps.

Therefore, achieving this necessary level of customized monitoring with legacy systems can be complex and ineffective. The lack of flexible, integrated frameworks for creating and managing custom AI evaluators often prevents teams from implementing accurate, real-time checks for their specific models and risks.

This operational friction prevents catching unique anomalies early and generating actionable insights, leaving critical performance, quality, or security issues unaddressed until they escalate.

AI Observability Solutions

Traditional observability tools struggle with AI’s dynamic and complex nature, but Coralogix’s AI Center offers a dedicated solution that treats AI as a distinct stack. Its Evaluation Engine enhances AI observability by providing real-time assessments of AI applications for quality, correctness, security, and compliance. This helps customize evaluators tailored to their specific AI use cases, enabling continuous monitoring of interactions to detect potential risks or quality issues.

The AI Center’s Evaluation Engine actively scans each prompt and response, facilitating the early detection of issues such as hallucinations, data leaks, and security vulnerabilities. This proactive monitoring is essential for ensuring the integrity and reliability of AI applications.

Conclusion

Comprehensive AI observability evaluation metrics provide the insights to monitor system health and maintain trust in AI-driven solutions. From security measures against prompt injections and data leaks to quality checks for hallucinations, these metrics help manage costs and enhance user satisfaction.

Traditional observability tools with limited evaluators and inflexible customization options fall short when applied to the AI system. These struggle to deliver the insights needed to monitor complex AI behaviors, resulting in potential blind spots regarding system performance and security. Coralogix’s AI Center addresses these challenges and provides a comprehensive real-time observability platform and customized evaluators tailored for AI applications.

Additionally, the AI Security Posture Management (AI-SPM) monitors AI security in real time, detecting vulnerabilities such as data leaks and prompt injections.

Don’t wait—schedule a demo today and get ready to gain observability and security that scale with you.

FAQ

What are the metrics for observability in AI?

Metrics for AI observability include accuracy, precision, recall, F1 score, latency, throughput, and resource utilization, which help assess model performance and operational efficiency.

What are metrics in observability?

In observability, metrics are quantitative data points, such as response times, error rates, and system resource usage, that provide insights into system performance and health.

What are the metrics for AI monitoring?

AI monitoring metrics include accuracy, precision, recall, F1 score, latency, throughput, and resource utilization, which assist in evaluating model effectiveness and system performance.

What are the KPIs for observability?

Key Performance Indicators (KPIs) for observability include system uptime, mean time to resolution (MTTR), error rates, and resource utilization, all reflecting system reliability and efficiency.

What is a commonly used metric to evaluate the quality of generative AI models?

Coherence, which measures the logical consistency and relevance of the generated content, is a commonly used metric for evaluating generative AI models.