Fundamentally, there are logs that will be of intrinsic value to you, and others that are less business-critical. Are you aware of IT cost optimization? Are you aware of the logging cost to handle, analyze and store these different types of logs? Should you really have the same approach for mission-critical logs as you do for info or telemetry logs? Differentiating your approach for different logs is challenging.

If no two logs are truly the same then why should you treat them the same?

Slow Query Times can Increase Costs

In a multi-application system architecture, you’re going to have a variety of different applications with very different behaviors. It’s commonplace to have some applications which generate infrequent, important logs, whilst one application may be spewing out literally millions of low priority and noisy debug logs. If you’re storing all of your logs on one disk or a database, and it nears capacity, then performance will dip.

This becomes dangerous if you’re running machine learning algorithms on the “useful logs”, which rely on a fully functioning database. Unfortunately, seeing what is useful, what might be in the future, and what never will be, is tough. One application may generate so many different logs that assessing their severity is challenging in itself. It goes without saying that this difficulty worsens with multi-application architecture. Logs are unruly, and visualizing outputs to help decide what should be done with them is a tricky job in itself.

Where do I keep my logs?

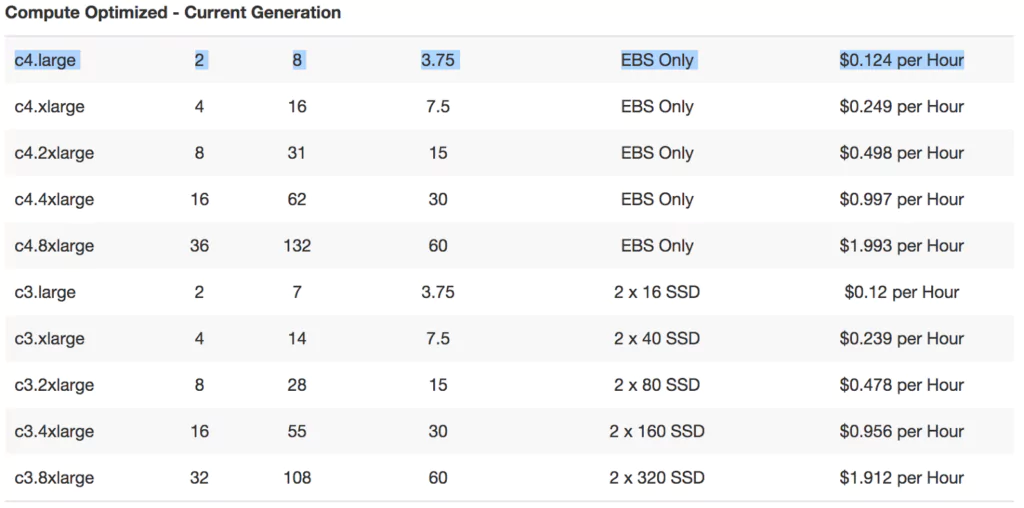

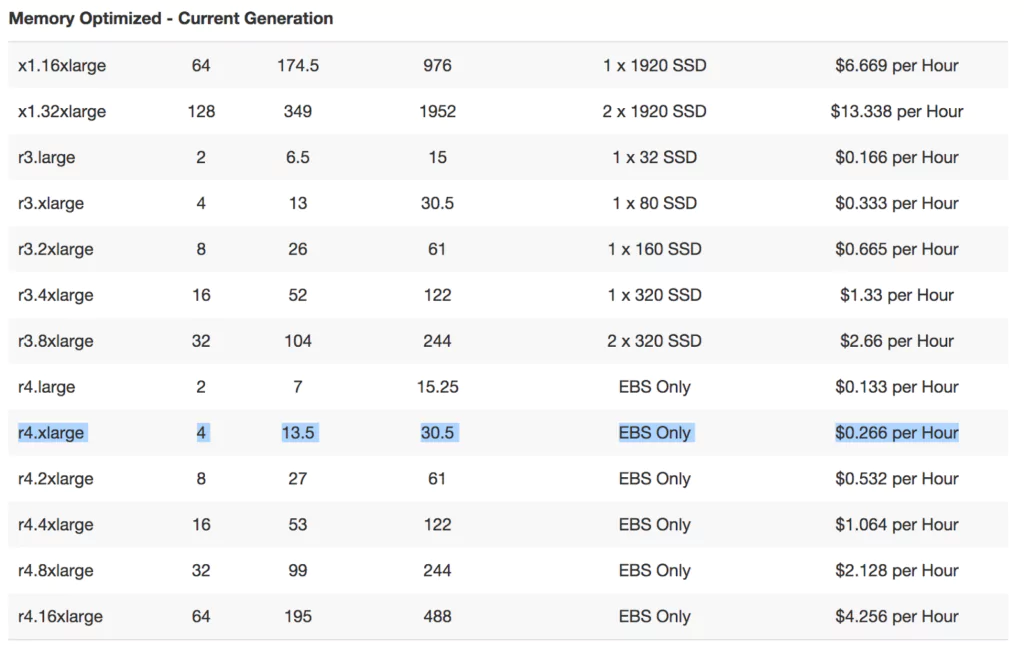

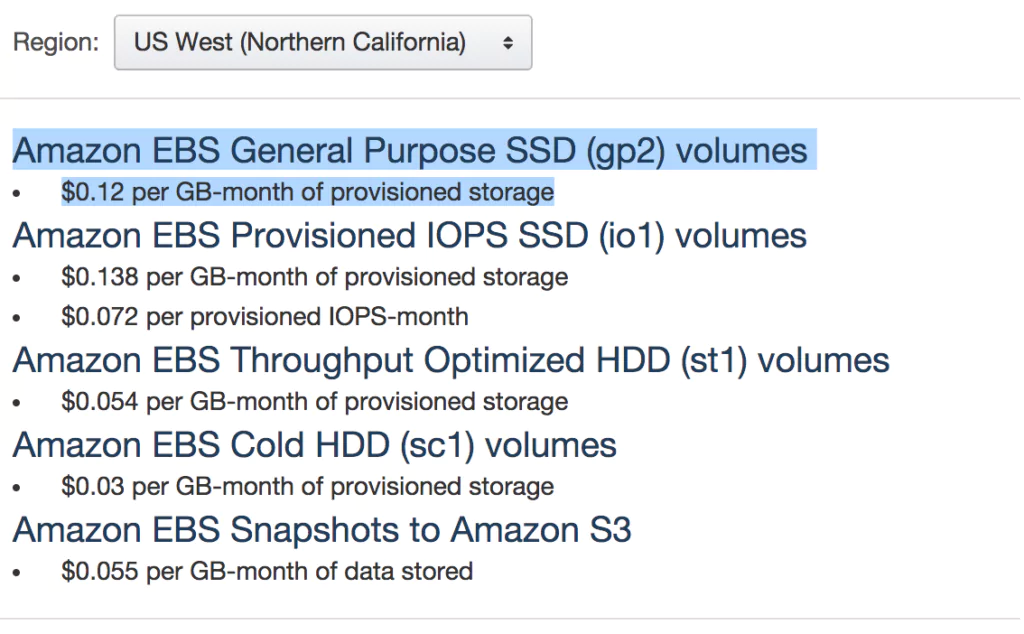

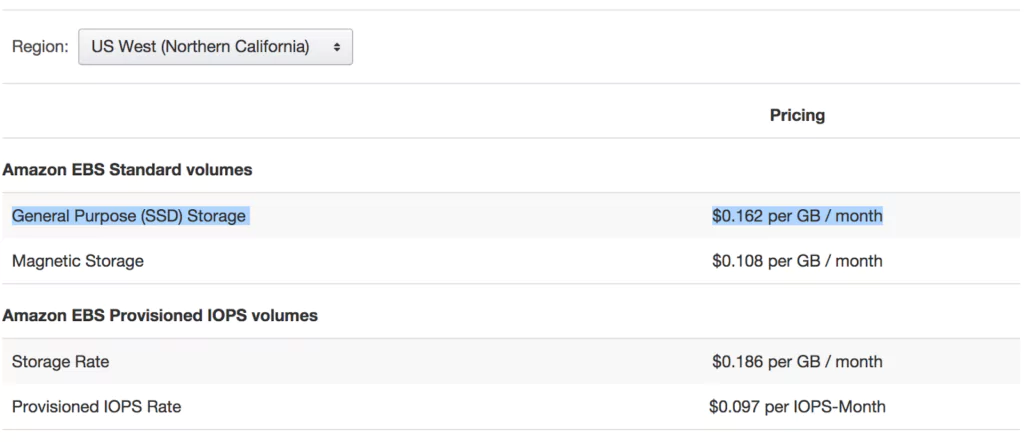

Provisioning for log storage isn’t easy and the wrong type can seriously impact your logging cost. You may only read a few log outputs, ignoring the rest. If you’re running advanced visualizations on your logs, then you should enable frequent reads. If you’re never accessing a certain type of log, then you might consider cold storage. This requires engineering time and effort. You should spend that time, focused on your product. Your storage bill is going to shoot up exponentially without clear visibility and control of which logs you index. This means that even for those logs that you are not interested in, you’re still paying the same amount.

The white noise of logs

Figuring out which logs are critical is a challenge with numerous complexities. This challenge pales in comparison to having all of these logs and asking someone or a service to make sense of them. With a wilderness of jumbled and incoherent log files, lacking context or recency, you’re likely to invest considerable time, energy, and money into getting something useful out of them. If you’re planning on using a machine learning solution to help differentiate between the critical and less critical logs, it’s likely to be a more long-winded process of trawling through gigabytes or more of defunct data before finding something of use.

How Coralogix can Help with your Logging Cost

Coralogix can allow you to take complete control of your logging solution. With the TCO Optimizer, you’ll be able to promote and demote your logs. This results in savings of up to 2/3rds of your logging bill. In a single view, you can see which of your outputs consume the most, broken down by the severity of the outputs themselves. You’ll be able to route those logs to different processing levels. For example, less important logs can simply be parsed and stored, but the most important logs can be processed as normal. This breaks the dichotomy of “drop” or “process” and allows you to take real control of your logging cost, with Coralogix.