The value of log files goes far beyond their traditional remit of diagnosing and troubleshooting issues reported in production.

They provide a wealth of information about your systems’ health and behavior, helping you spot issues as they emerge. By aggregating and monitoring log file data in real-time, you can proactively monitor your network, servers, user workstations, and applications for signs of trouble.

In this article, we’re looking specifically at Windows event logs – also known as system logs – from how they are generated to the insights they can offer, particularly in the all-important security realm.

Logging in Windows

If you’re reading this, your organization will likely run Windows on at least some of your machines. Windows event logs come from the length and breadth of your IT estate, whether that’s employee workstations, web servers running IIS, cluster managers enabling highly available services, Active Directory or Exchange servers, or databases running on SQL Server.

Windows has a built-in tool for viewing log files from the operating system and the applications and services running on it.

Windows Event Viewer is available from the Control Panel’s Administrative Tools section or by running “eventvwr” from the command prompt. From Event Viewer, you can view the log files generated on the current machine and any log files forwarded from other machines on the network.

When you open Event Viewer, choose the log file you want to view (such as application, security, or system). A list of the log entries is displayed together with the log level (critical, error, warning, information, verbose).

As you might expect, you can sort and filter the list by parameters such as date, source, and severity level. Selecting a particular log entry displays the details of that entry.

When using audit policies, you can control the types of logged events. Suppose you aim to identify and stop cyber-attacks at the earliest opportunity. In that case, it’s essential to apply your chosen policy settings to all machines in your organization, including individual workstations, as hackers often target these.

Furthermore, if you’re responsible for archiving event logs for audit or regulatory purposes, ensure you check the properties for each log file and configure the log file location, retention period, and overwrite settings.

Working with windows event logs

Although Event Viewer gives you access to your log data, you can only view entries for individual machines, and you need to be logged into the machine in question to do so.

However, for all but the most minor operations, logging into or connecting to individual machines to view log files regularly is impractical.

At best, you can use this method to investigate past security incidents or diagnose known issues, but you miss out on the opportunity to use log data to spot early signs of trouble.

If you want to leverage log data for proactive monitoring of your systems and to perform early threat detection, you first need to forward your event logs to a central location and then analyze them in real-time.

There are various ways to do this, including using Windows Event Forwarding to set up a subscription or installing an agent on devices to ship logs to your chosen destination.

Forwarding your logs to a central server also simplifies the retention and backup of log files. This is particularly helpful if regulatory schemes, like HIPAA, require storing log entries for several years after they were generated.

Monitoring events

When using log data proactively, it pays to cast a wide net. The types of events that can signal something unexpected or indicate a situation that deserves close monitoring include:

- Any change in the Windows Firewall configuration. As all planned changes to your setup should be documented, anything unexpected should trigger alarm bells.

- Any change to user groups or accounts, including creating new accounts. Once an attacker has compromised an account, they may try to increase their privileges.

- Successful or failed login attempts and remote desktop connections, mainly if these are outside business hours or from unexpected IP addresses or locations.

- Password lockouts. These may indicate a brute force attempt, so it’s always worth identifying the machine in question and further investigating whether it was just an honest mistake.

- The application allows listing. Keep an eye out for scripts and processes that don’t usually run on your systems, as these may be added to facilitate an attack.

- Changes to file system permissions. Look for changes to root directories or system files that should not routinely be modified.

- Changes to the registry. While you can expect some registry keys, such as recently used files, to change regularly, others, such as those controlling the programs that run on startup, could indicate something more sinister. Similarly, any changes to permissions to the password hash store should be investigated.

- Changes to audit policies. Hackers can ensure future activity stays under the radar by changing what events are logged.

- Clearing event logs. It’s not uncommon for attackers to cover their tracks. If logs are being deleted locally, it’s worth finding out why (while breathing a sigh of relief, you had the entries forwarded to a central location automatically and haven’t lost any data).

While the above is an exhaustive list, it demonstrates that you should set your Windows audit policies to log more than just failures.

Wrapping up

Windows event log analysis can capture a wide range of activities and provide valuable insights into the health of your system.

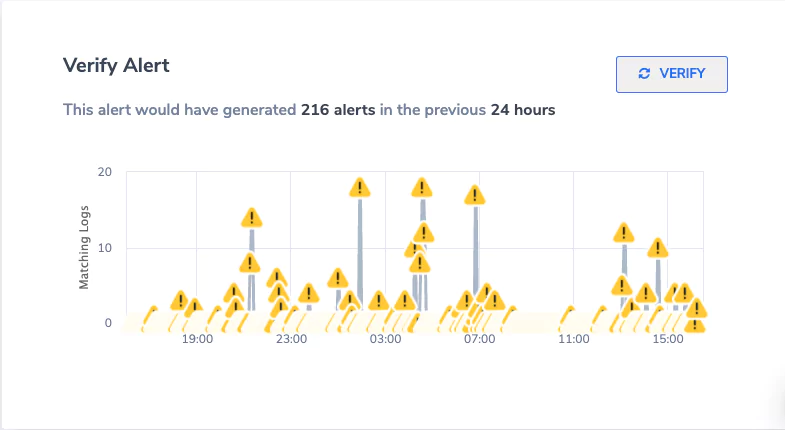

We’ve discussed some events to look out for and which you may want to be alerted to automatically. Still, cybersecurity is a constantly moving target, with new attack vectors emerging. You must continuously be on the lookout for anything unusual to protect your organization.

By collating your Windows event logs in a central location and applying machine learning, you can offload much effort to detect anomalies. Coralogix uses machine learning to detect unusual behavior while filtering out false positives. Learn more about log analytics with Coralogix, or start shipping your Windows Event logs now.