Log Observability and Log Analytics

Logs play a key role in understanding your system’s performance and health. Good logging practice is also vital to power an observability platform across your system. A log monitoring software, in general, involves the collection and analysis of logs and other system metrics. Log analysis involves deriving insights from logs, which then feeds into observability.

Observability, as we’ve said before, is really the gold standard for knowing everything about your system. In this article, we’re going to examine how to work with logs from collection through to analysis, as well as some ways to get the most out of your logs.

Working with logs

To carry out a log analysis, you first need to generate the logs, collect them, and then store them. These steps are vital for log analysis and observability.

Log generation

Nearly all applications and components in your system will generate logs by default. For the most part, it’s simply a matter of enabling logs and knowing where the application, platform, or network deposits them. Some providers, like AWS’s Cloudwatch, have freemium logging as standard, where you have to pay for more granular data.

Log collection

Collecting logs for analysis is the next logical step, once you’ve got your applications producing logs. You might think about writing a workflow to draw all of these logs into one place, but really, it’s much simpler to let an application do it for you. Coralogix has native integrations with numerous AWS services, GCP, Azure, Kubernetes, Java, Logstash, firewalls, and much more. These integrations allow Coralogix to collect the logs for you automatically.

Log storage and retention

If you’re implementing a logging solution from scratch, it’s difficult to know exactly which logs you want to keep and for how long. The longer you store log data for, the higher the cost. Retention lengths are often determined by compliance requirements, as well as external factors such as cyber threats.

Given the length of time hackers reportedly stay in a system before detection, longer log retention is becoming increasingly common. Fortunately, Coralogix has an ML-powered cost-optimization tool to ensure that you aren’t paying more for your logs than you need to.

Querying logs

Now that you’ve got the logs, querying them is the next step in your analysis. Querying logs is the least sophisticated way of analyzing log data, but sometimes it’s exactly what you need to find what you’re looking for.

Querying logs with syntax

Log queries are traditionally written in a structure that allows you to return a set number of corresponding results that meet the terms of your query. While this is relatively simple, it can become tricky. Logs, for example, can come in a structured or unstructured format. Different applications may present logs in different ways – AWS, Azure, and Google all have different logging guidelines – making a broader cross-system search more difficult.

Querying logs for observability

Coralogix has several capabilities that make querying logs an enabler for observability. Log Query allows for all log data under management to be queried in a single, uniform way. This means you could cross-compare logs from different applications and platforms in one query.

You can also query logs in Coralogix using SQL, which enables more complex syntax than you’ll find in visualization tools like Kibana. You can then visualize the results of these advanced queries in Kibana, getting you on your way to observability.

Aggregating logs

Log aggregation is an important part of log analysis and observability. It allows trends to be identified, which are pivotal to build an understanding of your system performance. Traditionally, this might be done in a single database but, as mentioned above, the lack of standardization between applications will make this a difficult target for log analysis.

From aggregation to insight

Aggregating logs is a powerful way of getting rid of the “noise” that a complex system creates. From known errors to new ones caused by your latest release, your log repository is a busy place. Manually digging through gigabytes of logs to isolate the relevant data, looking for a trend, will not get you far.

Loggregation automates log clustering using machine learning, grouping together noisy dumps of data into useful insights. You can customize the templates Loggregation uses to tailor your insights and supercharge your observability platform.

Log trends

Once your logs have been aggregated and clustered, you’re ready to spot the trends. Log trends are the peak of log analysis and a key part of observability.

Log trends use aggregated log data to help you identify bottlenecks, performance problems, or even cyber threats, based on data gathered over time.

Visualizing trends for observability

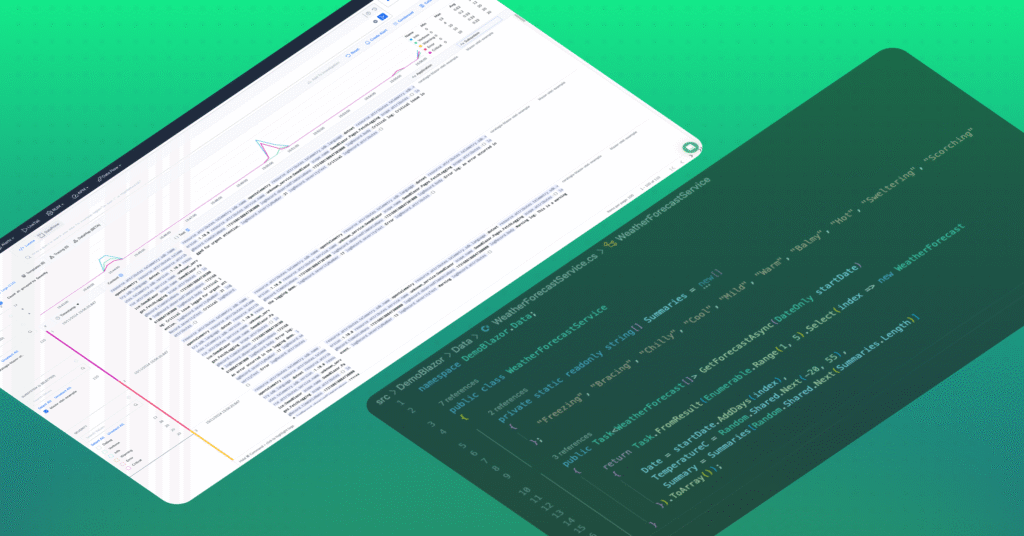

Observability gives you the true view of every aspect of your system and infrastructure, at any given time. This might be your firewall, your AWS infrastructure, or even metrics from your marketing system – all can be correlated to help understand where things are going right or wrong.

Coralogix not only aggregates and clusters this data for you but gives you the option to view real-time data in a native Kibana view. There are numerous other plugins available for tools such as Tableau or Grafana, so your team is comfortable viewing and manipulating the data.

After all, it’s easier to spot a spike on a graph than it is to see it in a few hundred rows of logs.

Identifying anomalies with log observability

With all of the above in place, you’re in a great position to carry out log analysis. However, it’s important to know how to use this capability to spot anomalies quickly. Once again, technology is here to help in some of the most critical areas prone to failures.

Imperceptible anomalies

Even with all your logs aggregated and nicely visualized on a Grafana dashboard, you might struggle to spot small deviations from your system’s baseline performance. That’s where machine learning tools can come to the rescue. For example, a rise in error volume is a key indicator that you’re having performance or authentication issues somewhere. Error Volume Anomaly and Flow Anomaly are two machine learning tools that formulate a baseline of your system and help identify when there’s a deviation from the norm.

Bouncing back from releases

When customer-facing platforms undergo hundreds of micro-releases per day, keeping track of their impact on your system is important. It makes rolling back to a stable point so much easier and helps you quantify the effect of a change on your system. Benchmark Reports and Version Tags allow you to trace every change to your system, the overall effect, and visualize the outcomes. Pretty cool, eh?

Don’t Do It Yourself

As you might have detected throughout this article, there is a lot of “heavy lifting” that goes into standing up log analytics for observability. It’s challenging to gather all of your logs, collate, store, aggregate, and then analyze all by yourself, or even with a team of devs. On top of that, you have to maintain logging best practices.

Do the smart thing. Coralogix is the enterprise-grade observability and logs analytics platform, built to scale for you. With machine learning and years of experience built-in, you won’t find a better companion to get you to total system observability.