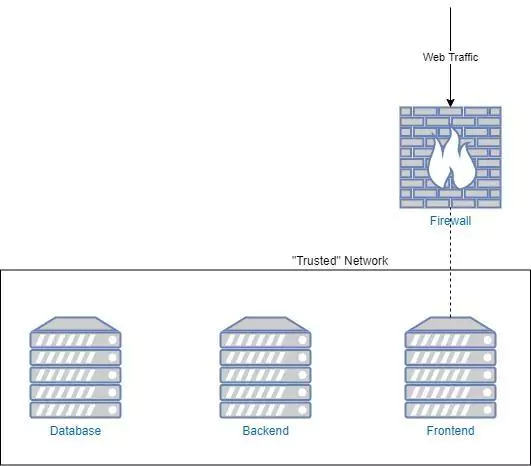

The wide-spread adoption of cloud infrastructure has proven to be highly beneficial, but has also introduced new challenges and added costs – especially when it comes to security.

As organizations migrate to the cloud, they relinquish access to their servers and all information that flows between them and the outside world. This data is fundamental to both security and observability.

Cloud vendors such as AWS are attempting to compensate for this undesirable side effect by creating a selection of services which grant the user access to different parts of the metadata. Unfortunately, the disparate nature of these services only creates another problem. How do you bring it all together?

The Coralogix Cloud Security solution enables organizations to quickly centralize and improve their security posture, detect threats, and continuously analyze digital forensics without the complexity, long implementation cycles, and high costs of other solutions.

The Security Traffic Analyzer Can Show You Your Metadata

Using the Coralogix Security Traffic Analyzer (STA), you gain access to the tools that you need to analyze, monitor and alert on your data, on demand.

Here’s a list of data types that are available for AWS users:

[table id=43 /]

Well… That doesn’t look very promising, right? This is exactly the reason why we developed the Security Traffic Analyzer (STA).

What can the Security Traffic Analyzer do for you?

Simple Installation

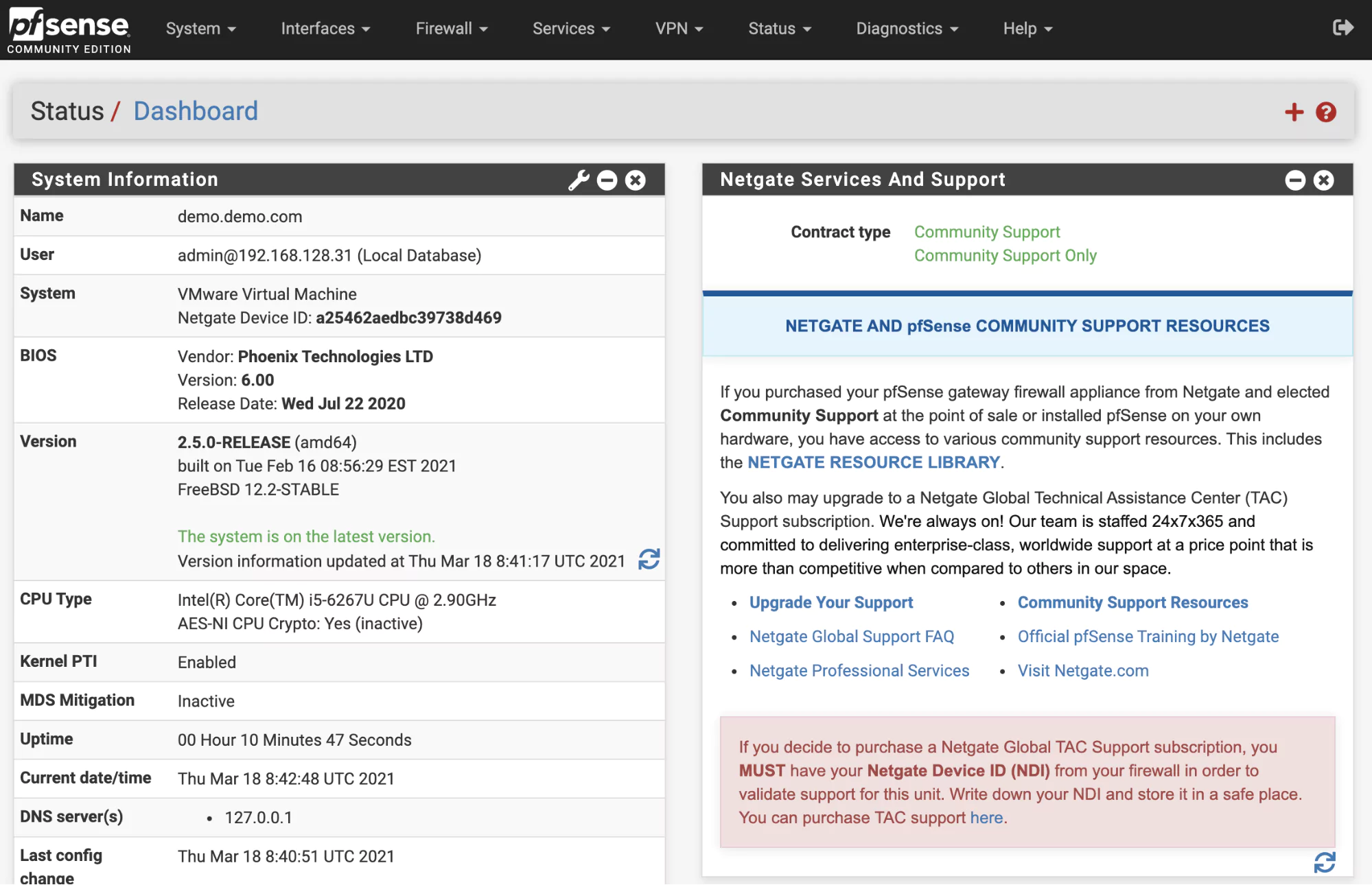

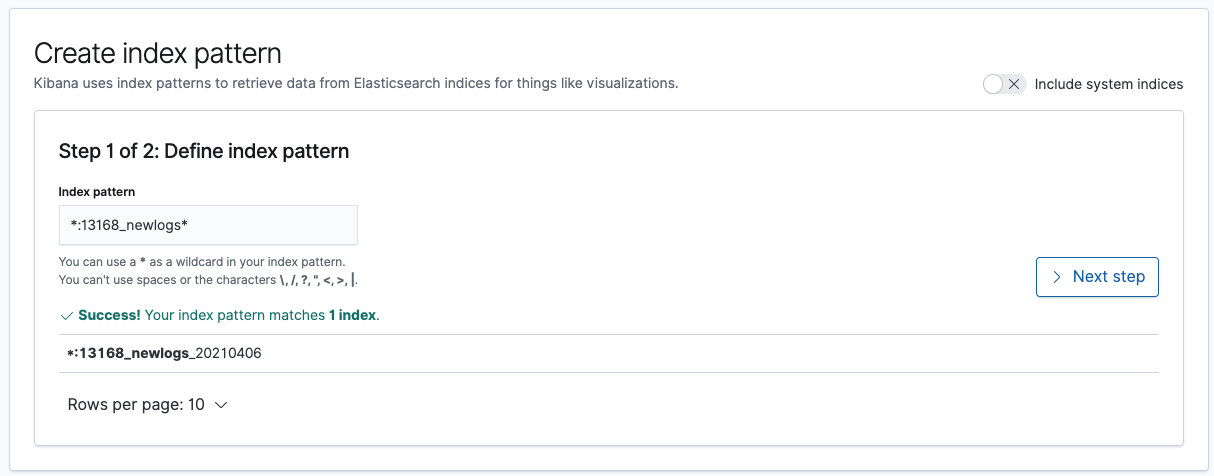

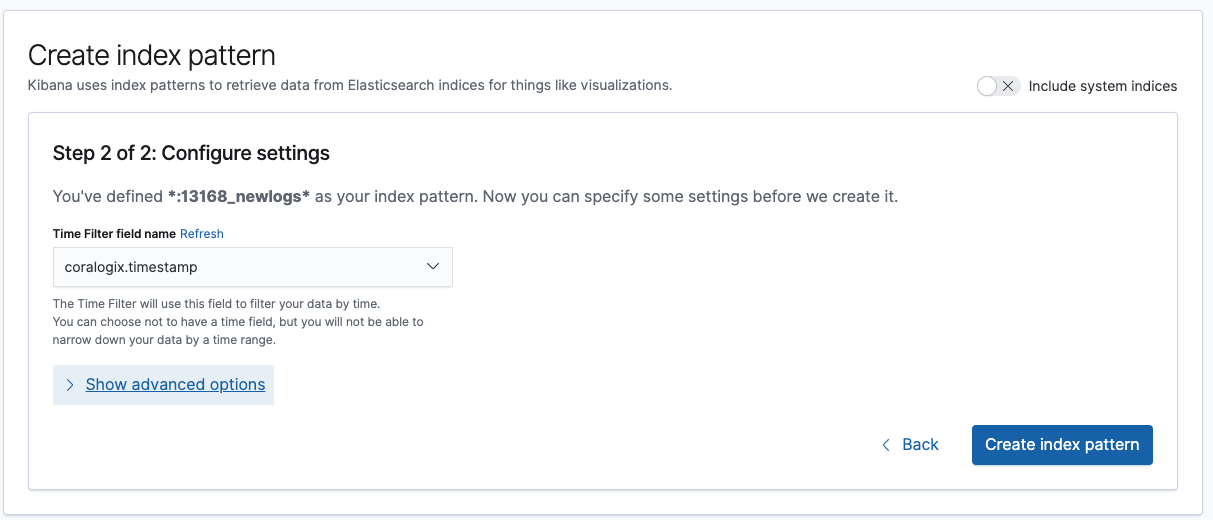

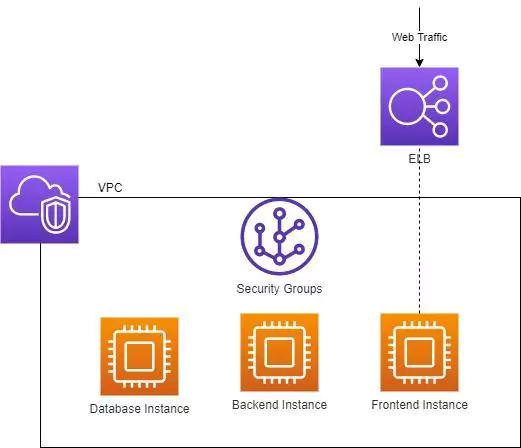

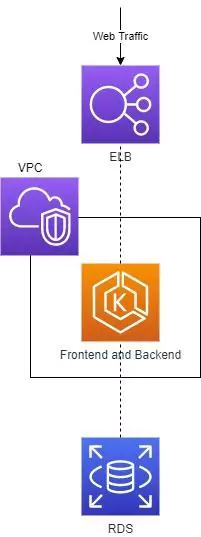

When you install the STA, you get an AWS instance and several other related resources.

Mirror Your Existing VPC Traffic

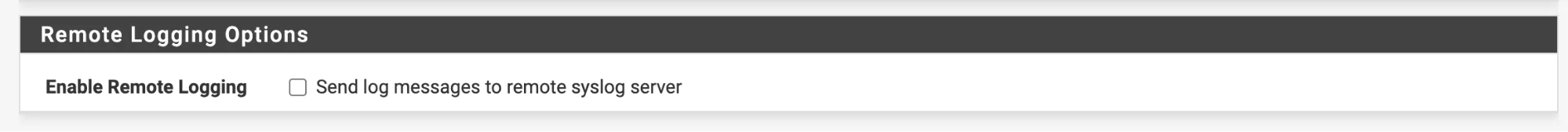

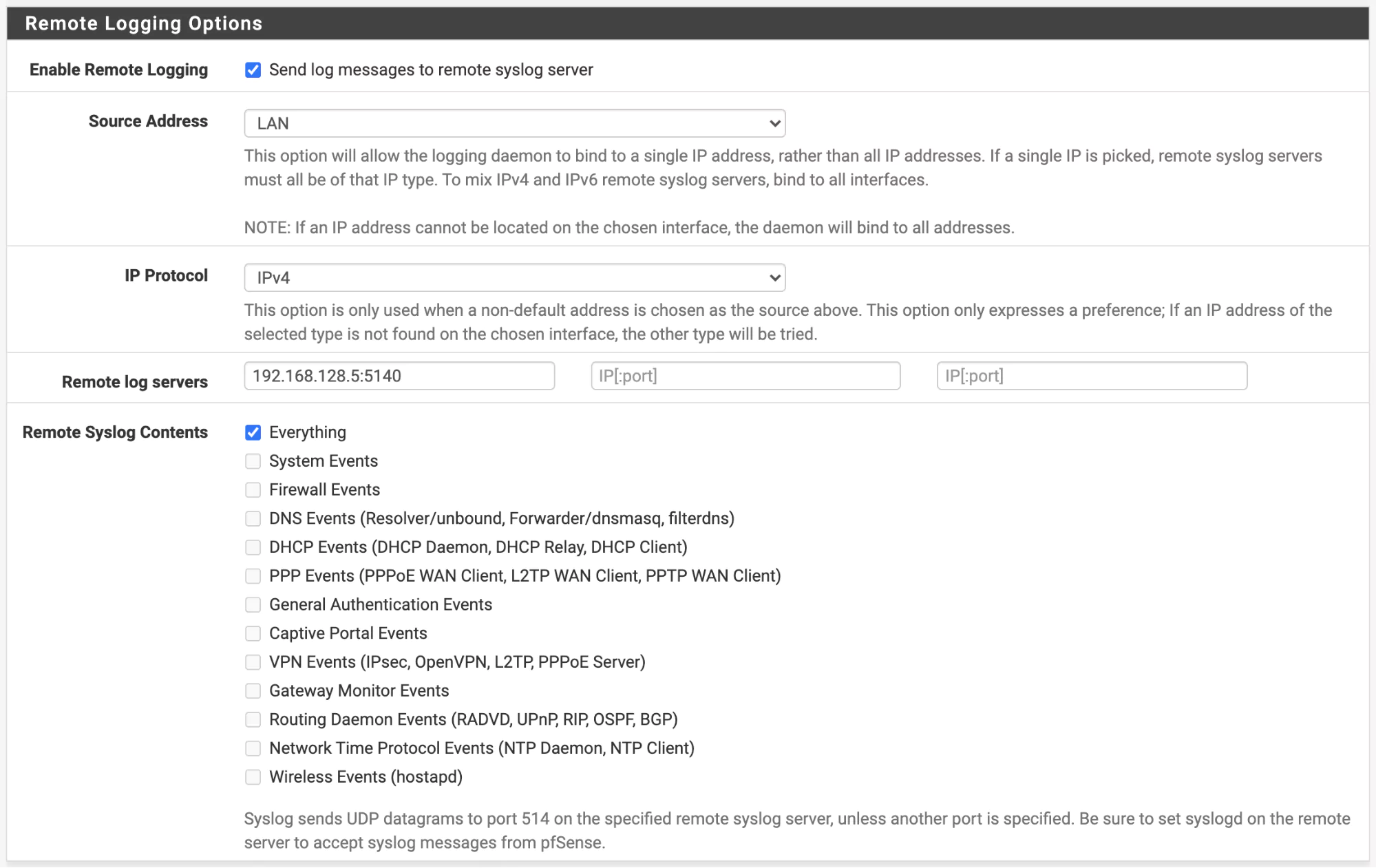

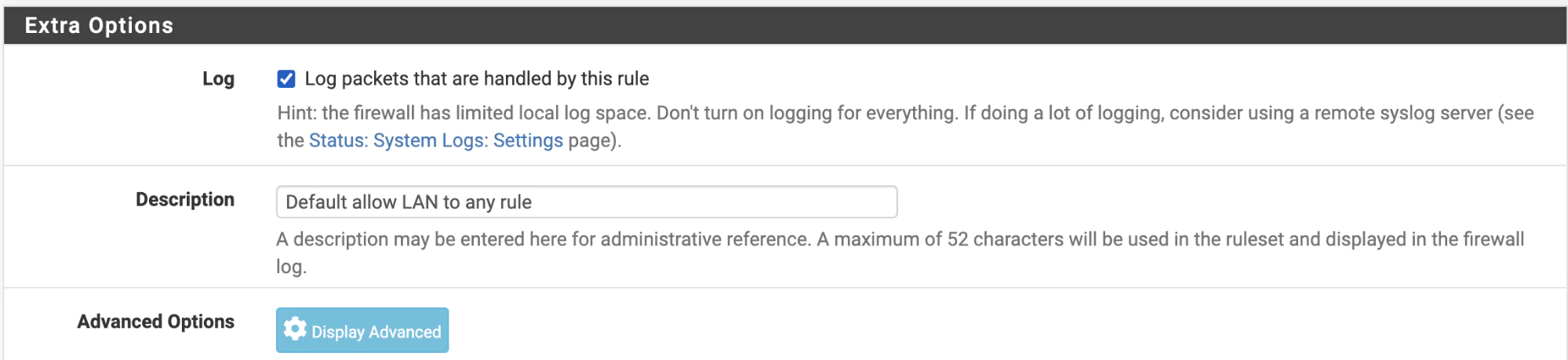

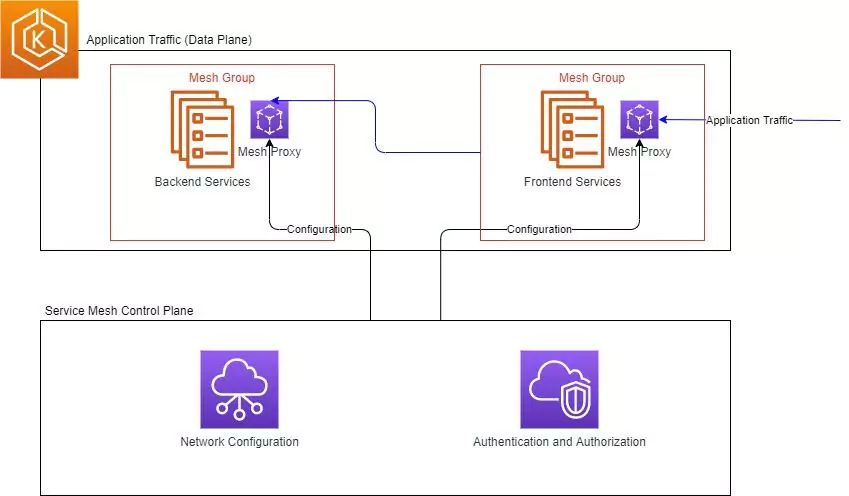

You can mirror your server traffic to the STA (by using VPC traffic mirroring). The STA will automatically capture, analyze and optionally store the traffic for you while creating meaningful logs in your Coralogix account. You can also create valuable dashboards and alerts. To make it even easier, we created the VPC Traffic Mirroring Configuration Automation handler which automatically updates your mirroring configuration based on instance tags and tag values in your AWS account. This allows you to declaratively define your VPC traffic mirroring configuration.

Machine Learning-Powered Analysis

The STA employs ML-powered algorithms which alert you to potential threats with the complete ability to tune, disable and easily create any type of new alerts.

Automatically Enrich Your Logs

The STA automatically enriches the data passing through it such as domain names, certificate names, and much more by using data from several other data sources. This allows you to create more meaningful alerts and reduce false-positives while not increasing false-negatives.

What are the primary benefits of the Coralogix STA?

Ingest Traffic From Any Source

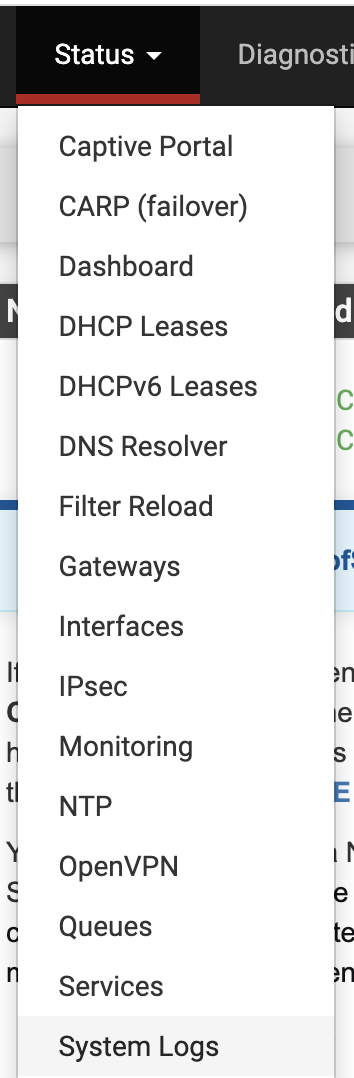

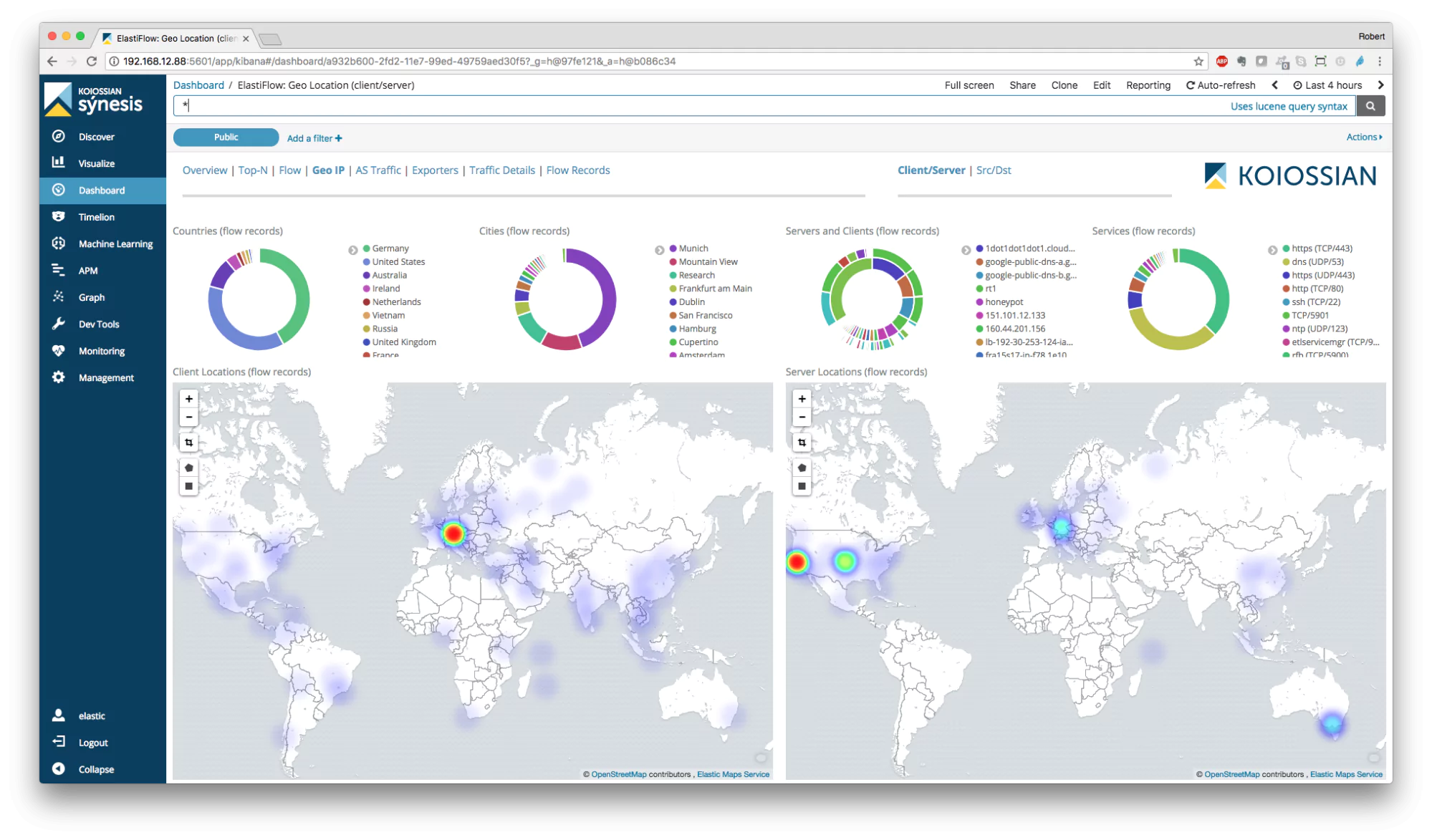

Connect any source of information to complete your security observability, including Audit logs, Cloudtrail, GuardDuty or any other source. Monitor your security data in one of 100+ pre-built dashboards or easily build your own using our variety of visualization tools and APIs.

Customizable Alerts & Visualizations

The Coralogix Cloud Security solution comes with a predefined set of alerts, dashboards and Suricata rules. Unlike many other solutions on the market today, you maintain the ability to change any or all of them to tailor them to your organization’s needs.

One of the most painful issues that usually deters people from using an IDS solution is that they are notorious for their high false-positive rate, but Coralogix makes it unbelievably easy to solve these kinds of issues. Dynamic ML-powered alerts, dashboards, and Suricata rules are just a matter of 2-3 clicks and you’re done.

Automated Incident Response

Although Coralogix focuses on detection rather than prevention, it is still possible to achieve both detection and better prevention by integrating Coralogix with any orchestration platform such as Cortex XSOAR and others.

Optimized Storage Costs

Security logs need to be correlated with packet data in order to provide needed context to perform deep enough investigations. Setting up, processing, and storing packet data can be laborious and cost-prohibitive.

With the Coralogix Optimizer, you can reduce up to 70% of storage costs without sacrificing full security coverage and real-time monitoring. This new model enables you to get all of the benefits of an ML-powered logging solution at only a third of the cost and with more real-time analysis and alerting capabilities than before.

How Does the Coralogix STA Compare to AWS Services?

Here’s a full comparison between the STA and all the other methods discussed in this article:

[table id=44 /]

(1) Will be added soon in upcoming versions

As you can see, the STA is already the most effective solution for gaining back control and access to your metadata. In the upcoming versions, we’ll also improve the level of network visibility by further enriching the data collected, allowing you to make even more fine-grained alerting rules.