Hybrid cloud architectures provide the flexibility to utilize both public and cloud environments in the same infrastructure. This enables scalability and power that is easy and cost-effective to leverage. However, an ecosystem containing components with dependencies layered across multiple clouds has its own unique challenges.

Adopting a hybrid log monitoring strategy doesn’t mean you need to start from scratch, but it does require a shift in focus and some additional considerations. You don’t need to reinvent the wheel as much as realign it.

In this article, we’ll take a look at what to consider when building a CDN monitoring stack or solution for a hybrid cloud environment.

Hybrid Problems Need Hybrid Solutions

Modern architectures are complex and fluid with rapid deployments and continuous integration of new components. This makes system management an arduous task, especially if your engineers and admins can’t rely on an efficient monitoring stack. Moving to a hybrid cloud architecture without overhauling your monitoring tools will only complicate this further, making the process disjointed and stressful.

Fortunately, there are many tools available for creating a monitoring stack that provides visibility in a hybrid cloud environment. With the right solutions implemented, you can unlock the astounding potential of infrastructures based in multiple cloud environments.

Mirroring Cloud Traffic On-Premise

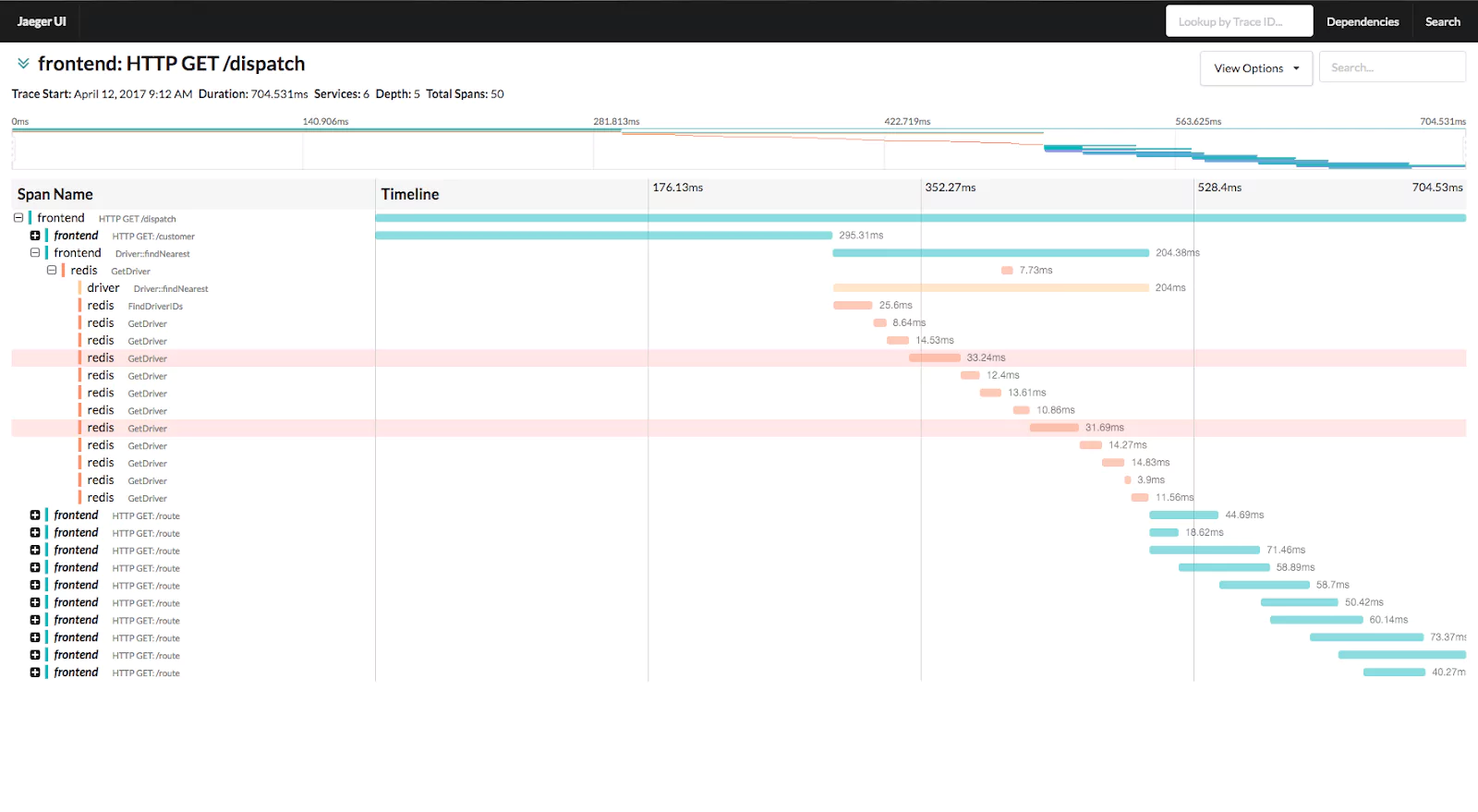

When implementing your hybrid monitoring stack, covering blind spots is a top priority. This is true for any visibility-focused engineering, but blind spots are especially problematic in distributed systems. It’s difficult to trace and isolate root causes of performance issues with data flowing across multiple environments. Doubly so if some of those environments are dark to your central monitoring stack.

One way to overcome this is to mirror all traffic to/between external clouds to your on-premise environment. Using a vTAP (short for virtual tap), capture and copy data flowing between cloud components and feed the ‘mirrored’ data into your on-premise monitoring stack.

Traffic mirroring with implemented vTAP software solutions ensures that all system and network traffic is visible, regardless of origin or destination. The ‘big 3’ public cloud providers (AWS, Azure, Google Cloud) offer features that enable mirroring at a packet level, and there are many 3rd party and open source vTAP solutions readily available on the market.

Packet-Level Monitoring for Visibility at the Point of Data Transmission

As mentioned, the features and tools offered by the top cloud providers allow traffic mirroring down to the packet level. This is very deliberate on their part. Monitoring traffic and data at a packet level is vital for any effective visibility solution in a hybrid environment.

In a hybrid environment, data travels back and forth between public and on-premise regions of your architecture regularly. This can make tracing, logging, and (most importantly) finding the origin points of errors a challenge. Monitoring your architecture at a packet level makes tracing the journey of your data a lot easier.

For example, monitoring at the packet level picks up on failed cyclic redundancy checks and checksums on data traveling between public and on-premise components. Compromised data is filtered upon arrival. What’s more, automated alerts when packet loss spikes allow your engineers to isolate and fix the offending component before the problem potentially spirals into a system-wide outage.

Verifying data integrity and authenticity in real-time quickly identifies faulty components or vulnerabilities by implementing data visibility at the point of transmission. There are much higher levels of data transmission in hybrid environments. As such, any effective monitoring solution must ensure that data isn’t invisible while in transit.

Overcoming the Topology Gulf

Monitoring data in motion is key, and full visibility of where that data is traveling from and to is just as important. A topology of your hybrid architecture is far more critical than it is in a wholly on-premise infrastructure (where it is already indispensable). Without an established map of components in the ecosystem, your monitoring stack will struggle to add value.

Creating and maintaining an up-to-date topology of a hybrid-architecture is a unique challenge. Many legacy monitoring tools lack scope beyond on-premise infrastructure, and most cloud-native tools offer visibility only within their hosted service. Full end-to-end discovery must overcome the gap between on-premise and public monitoring capabilities.

On the surface, it requires a lot of code change and manual reconfigurations to integrate the two. Fortunately, there are ways to mitigate this, and they can be implemented from the early conceptual stages of your hybrid-cloud transformation.

Hybrid Monitoring by Design

Implementing a hybrid monitoring solution post-design phase is an arduous process. It’s difficult to achieve end-to-end visibility if the components of your architecture are deployed and in use.

One of the advantages of having components in the public cloud is the flexibility afforded by access to an ever-growing library of components and services. However, utilizing this flexibility means your infrastructure is almost constantly changing, making both discovery and mapping troublesome. Tackling this in the design stage ensures that leveraging the flexibility of your hybrid architecture doesn’t disrupt the efficacy of your monitoring stack.

By addressing real-time topology and discovery in the design stage, your hybrid-architecture and all associated operational tooling will be built to scale in a complimentary manner. Designing your hybrid-architecture with automated end-to-end component/environment discovery as part of a centralized monitoring solution, as an example, keeps all components in your infrastructure visible regardless of how large and complex your hybrid environment grows.

Avoiding Strategy Silos

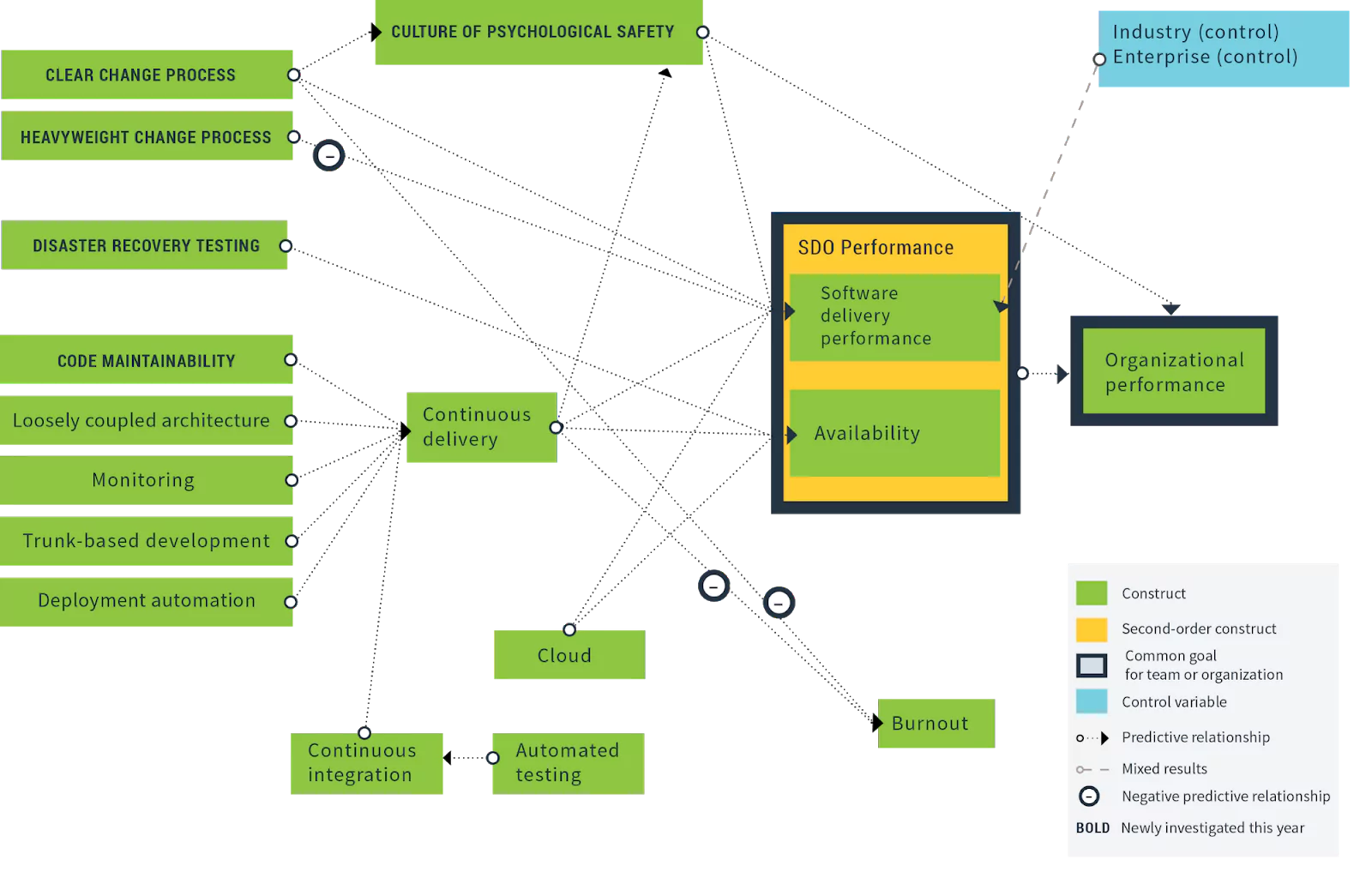

Addressing monitoring at the design stage ensures that your stack can scale with your infrastructure with minimal manual reconfiguration. It also helps avoid another common obstacle when monitoring hybrid-cloud environments, that of siloed monitoring strategies.

Having a clearly established, centralized monitoring strategy keeps you from approaching monitoring on an environment-by-environment basis. Why should you avoid an environment-by-environment approach? Because it quickly leads to siloed monitoring stacks, and separate strategies for your on-premise and publicly hosted systems.

While the processes differ and tools vary, the underpinning methodology behind how you approach monitoring both your on-premise and public components should be the same. You and your team should have a clearly defined monitoring strategy to which anything implemented adheres to and contributes towards. Using different strategies for different environments quickly leads to fragmented processes, poor component integration, and ineffective architecture-wide monitoring.

Native Tools in a Hybrid Architecture

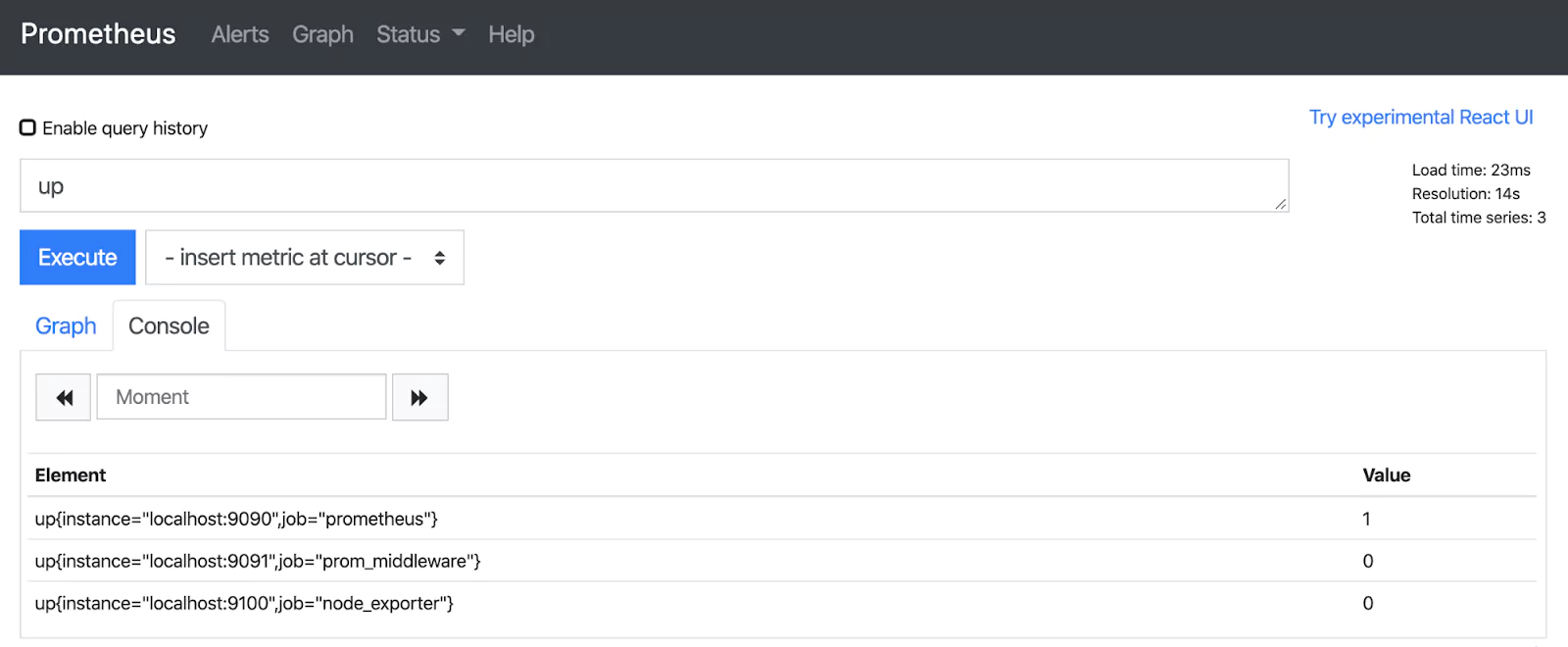

AWS, Azure, and Google all offer native monitoring solutions — AWS Cloudwatch, Google Stackdriver, and Azure Monitor. Each of these tools enables access to operational data, observability, and monitoring in their respective environments. Full end-to-end visibility would be impossible without them. While they are a necessary part of any hybrid-monitoring stack, they can also lead to vendor reliance and the aforementioned siloed strategies we are trying to avoid.

In a hybrid cloud environment, these tools should be part of your centralized monitoring stack, but they should not define it. Native tools are great at metrics collection in their hosted environments. What they lack in a hybrid context, however, is the ability to provide insight across the entire infrastructure.

Relying solely on native tools won’t provide comprehensive, end-to-end visibility. They can only provide an insight into the public portions of your hybrid-architecture. What you should aim for is interoperability with these components. Effective hybrid monitoring taps into these components to use them as valuable data sources in conjunction with the centralized stack.

Defining ‘Normal’

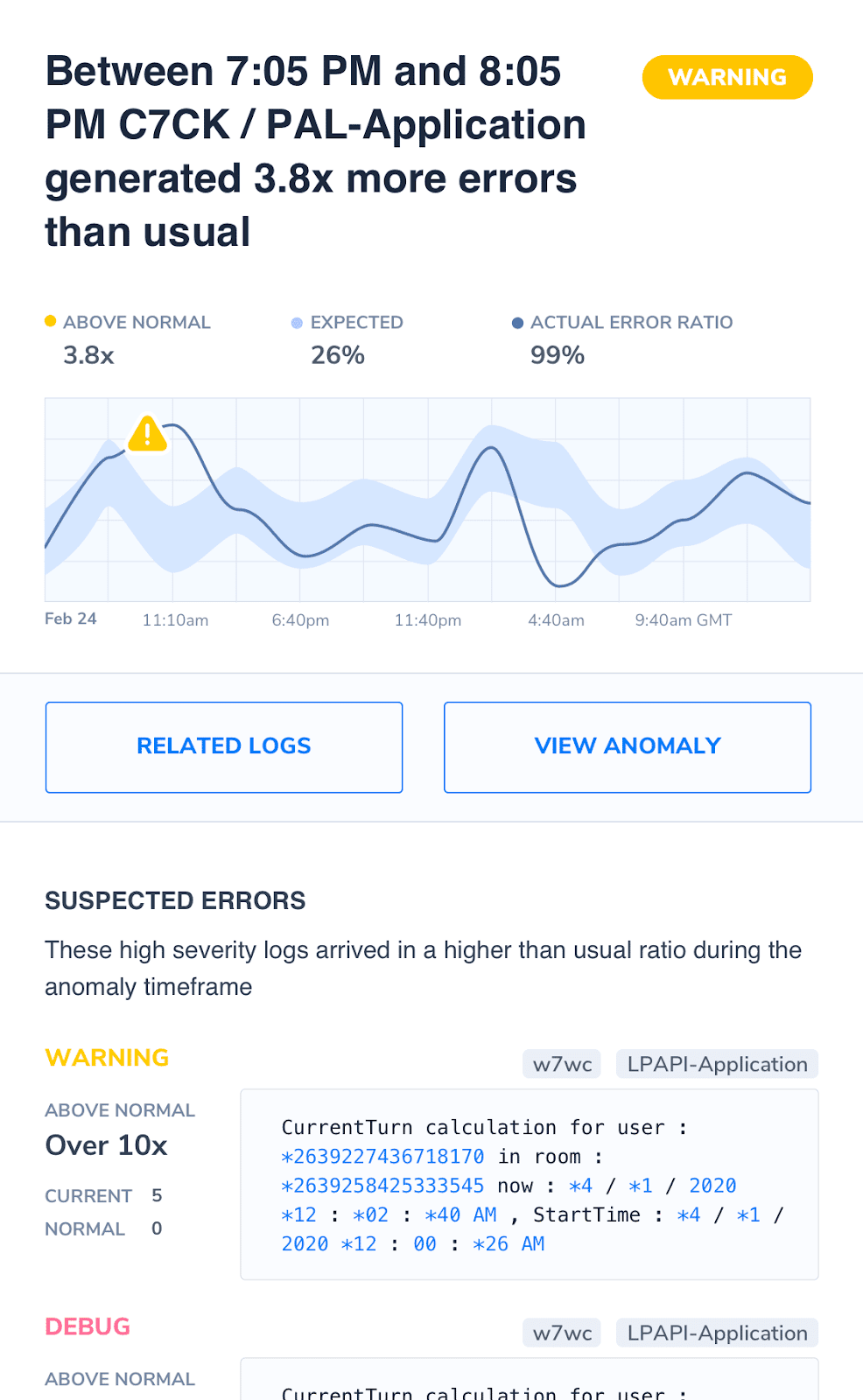

What ‘normal’ looks like in your hybrid environment will be unique to your architecture. It can only be established when analyzing the infrastructure as a whole. While the visibility of your public cloud components is vital, it is only by analyzing your architecture as a whole that you can define what shape ‘normal’ takes.

Without understanding and defining ‘normal’ operational parameters it is incredibly difficult to detect anomalies or trace problems to their root cause. Creating a centralized monitoring stack that sits across both your on-premise and public cloud environments enables you to embed this definition into your systems.

Once your system is aware of what ‘normal’ looks like as operational data, processes can be put in place to solidify the efficiency of your stack across the architecture. This can be achieved in many ways, from automating anomaly detection to setting up automated alerts.

You Can’t Monitor What You Can’t See

These are just a few of the considerations you should take when it comes to monitoring a hybrid-cloud architecture. The exact challenges you face will be unique to your architecture.

If there’s one principle to remember at all times, it’s this: you can’t monitor what you can’t see.

When things start to become overcomplicated, return to this principle. No matter how complex your system is, this will always be true. Visibility is the goal when creating a monitoring stack for hybrid cloud architecture, the same as it is with any other.