Cybersecurity is all about staying one step ahead of potential threats. With 1802 data compromises impacting over 422 million individuals in the United States in 2022, threat intelligence feeds are a key aspect of cybersecurity today.

These data streams offer real-time insights into possible security risks, allowing organizations to react quickly and precisely against cyber threats. However, leveraging threat intelligence feeds can be complicated.

This article will explain threat intelligence feeds, why they’re important, describe different types of threat intelligence feeds and how organizations use them to protect against cyber attacks.

Coralogix security offers a seamless and robust way to enrich your log data and more easily protect against a wide array of cyber threats.

What is a threat intelligence feed?

A threat intelligence feed is a comprehensive flow of data that sheds light on potential and existing cyber threats. It encompasses information about various hostile activities, including malware, zero-day attacks and botnets.

Security researchers curate these feeds, gathering data from diverse private and public sources, scrutinizing the information, and compiling lists of potential malicious actions. These feeds are not just a critical tool for organizations, but an essential part of modern security infrastructure.

Threat intelligence feeds assist organizations in identifying patterns related to threats and in modifying their security policies to match. They minimize the time spent gathering security data, provide ongoing insights into cyber threats, and supply prompt and accurate information to security teams.

By seamlessly integrating threat intelligence feeds into their existing security structure, organizations can preemptively tackle security threats before they evolve into significant issues.

Why are threat intelligence feeds important?

Threat intelligence feeds are pivotal in contemporary cybersecurity efforts. Let’s break down their significance:

- Real-time awareness: Threat intelligence feeds provide instantaneous updates on emerging threats. This timely intel equips security teams to act fast, curbing the potential fallout of an attack.

- Bolstered security measures: Gaining insights into the characteristics and behaviors of threats lets organizations adjust their security protocols. Threat intelligence feeds are the key to optimizing these measures for specific threats.

- Informed strategic choices: Offering essential insights and threat intelligence feeds to aid in crafting informed decisions about security investments, policies, and strategies. They guide organizations to focus on the most pressing vulnerabilities and threats, ensuring the optimal allocation of resources.

- Synergy with existing tools: Threat intelligence feeds can mesh with existing security technologies, prolonging their effectiveness and enhancing their ROI. This synergy is part of a broader strategy where observability and security work together to provide comprehensive protection.

- Anticipatory response: Supplying real-time threat data, these feeds allow security teams to nip threats in the bud before they balloon into significant issues. This foresight can translate into substantial cost savings by preventing major data breaches and reducing recovery costs.

- Industry-specific insights: Threat intelligence feeds can cater to specific industries, delivering unique insights pertinent to certain business domains. This specialized information can be invaluable in guarding against threats that loom larger in specific sectors.

Threat intelligence feeds are more than a mere information repository; they are a tactical asset that amplifies an organization’s prowess in threat detection, analysis, and response. By capitalizing on threat intelligence feeds, organizations can fortify their security stance, consistently staying a stride ahead of potential cyber dangers.

Types of threat intelligence

Organizations must understand the various kinds of threat intelligence, allowing them to opt for the feeds that suit their unique needs and objectives. Here’s a look at seven key types of threat intelligence:

- Tactical threat intelligence: Focusing on imminent threats, this type delivers detailed insights about specific indicators of compromise (IoCs). Common among security analysts and frontline defenders, tactical intelligence speeds up incident response. It includes IP addresses, domain names and malware hashes.

- Operational threat intelligence: This type is concerned with understanding attackers’ tactics, techniques, and procedures (TTPs). By offering insights into how attackers function, their incentives, and the tools they employ, operational intelligence lets security teams foresee possible attack approaches and shape their defenses accordingly.

- Strategic threat intelligence: Providing a wide-angle view of the threat environment, strategic intelligence concentrates on extended trends and burgeoning risks. It guides executives and decision-makers in comprehending the overarching cybersecurity scenario, aiding in informed strategic choices. This analysis often includes geopolitical factors, industry dynamics and regulatory shifts.

- Technical threat intelligence: Technical intelligence focuses on the minute details of threats, such as malware signatures, vulnerabilities, and attack paths. IT professionals utilize this intelligence to grasp the technical facets of threats and formulate particular counteractions, employing various cybersecurity tools to safeguard their businesses.

- Industry-specific threat intelligence: Some threat intelligence feeds are tailored to particular sectors such as finance, healthcare, or vital infrastructure. They yield insights into threats especially relevant to a defined sector, enabling organizations to concentrate on risks most applicable to their industry. This customized intelligence can be priceless in safeguarding against targeted onslaughts.

- Local threat intelligence: This type involves gathering and scrutinizing data from an organization’s individual environment. Organizations can carve out a tailored perspective of the threats peculiar to their setup by analyzing local logs, security happenings, and warnings. It assists in pinpointing and thwarting threats that bear direct relevance to the organization.

- Open source threat intelligence: Open source intelligence (OSINT) collects data from publicly accessible sources like websites, social media platforms, and online forums. Though potentially rich in information, it can lead to redundancy or cluttered data, demanding careful handling to maintain relevance and precision.

Organizations can cherry-pick the feeds that harmonize with their security requirements, industrial niche, and objective.

How do threat intelligence feeds work?

Threat intelligence feeds are more than just lists of threats; they are dynamic and complex systems that require careful management and integration. Here’s how they work:

- Collection and normalization: Threat intelligence feeds gather data from a diverse array of sources, including public repositories, commercial suppliers, and in-house data pools. The raw data, once gathered, undergoes normalization to a uniform format, priming it for subsequent analysis.

- Enrichment and analysis: Enrichment adds context to the data, like linking IP addresses with identified malicious undertakings. The enhanced data is then scrutinized to detect patterns, trends, and interconnections, thereby exposing novel threats and laying bare the strategies of the attackers.

- Integration and dissemination: Post-analysis, the intelligence must be woven into the organization’s standing security framework, granting various security instruments access. It is then disseminated to relevant stakeholders, ensuring timely response to emerging threats.

- Feedback and customization: A feedback loop allows continuous improvement, while customization enables organizations to focus on specific threats or industries. These processes ensure that the intelligence remains relevant, accurate, and valuable to the organization’s unique needs, aligning with a unified threat intelligence approach.

- Compliance and reporting: Threat intelligence feeds also play a role in adherence to regulations by furnishing comprehensive reports on threats and the overarching security stance, abiding by the regulatory mandates concerning cybersecurity.

Threat intelligence with Coralogix

Threat intelligence feeds are a cornerstone in cybersecurity, offering real-time insights and actionable data to combat evolving cyber threats. They enable organizations to proactively enhance security measures, ensuring robust protection against potential risks.

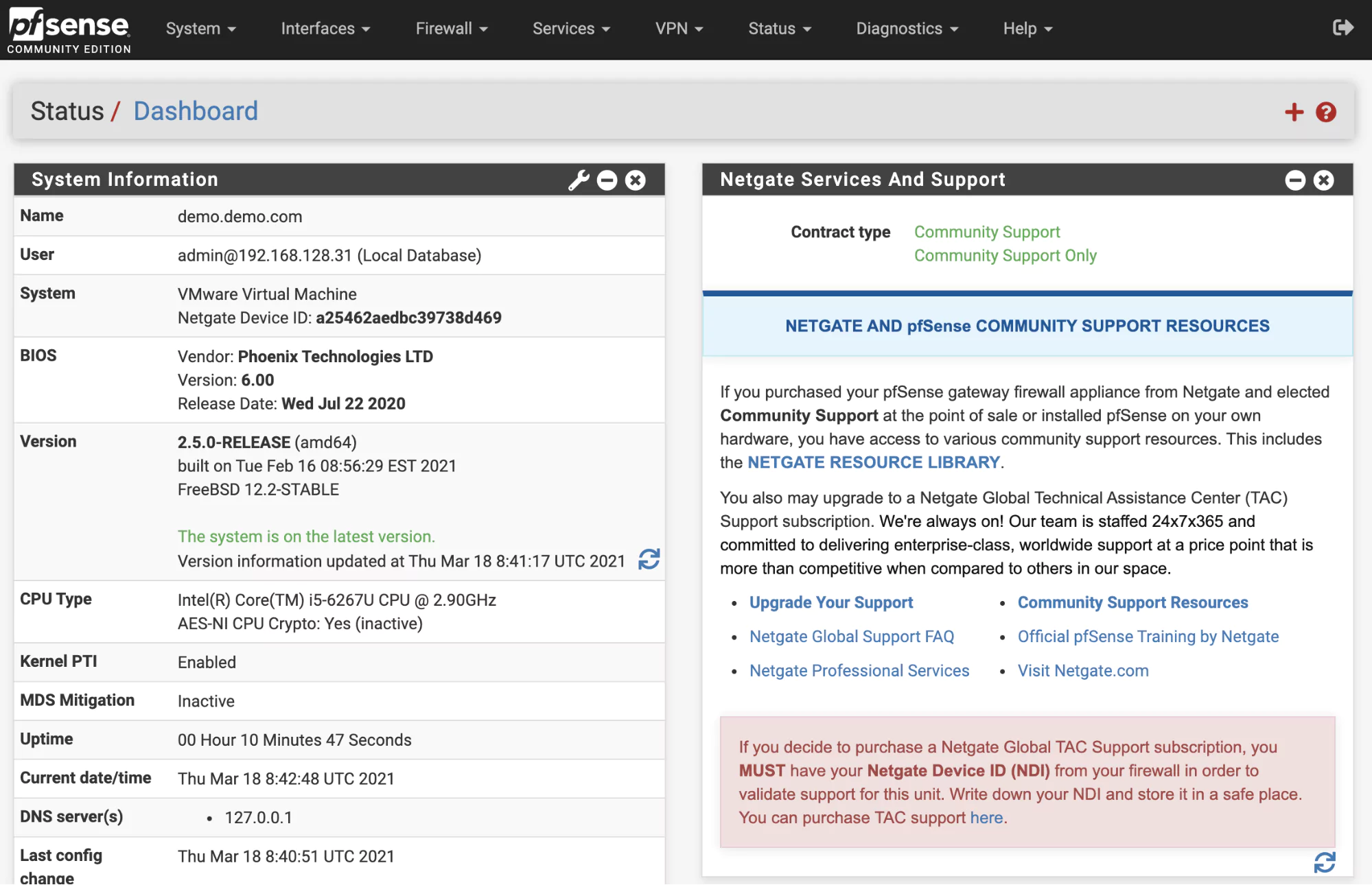

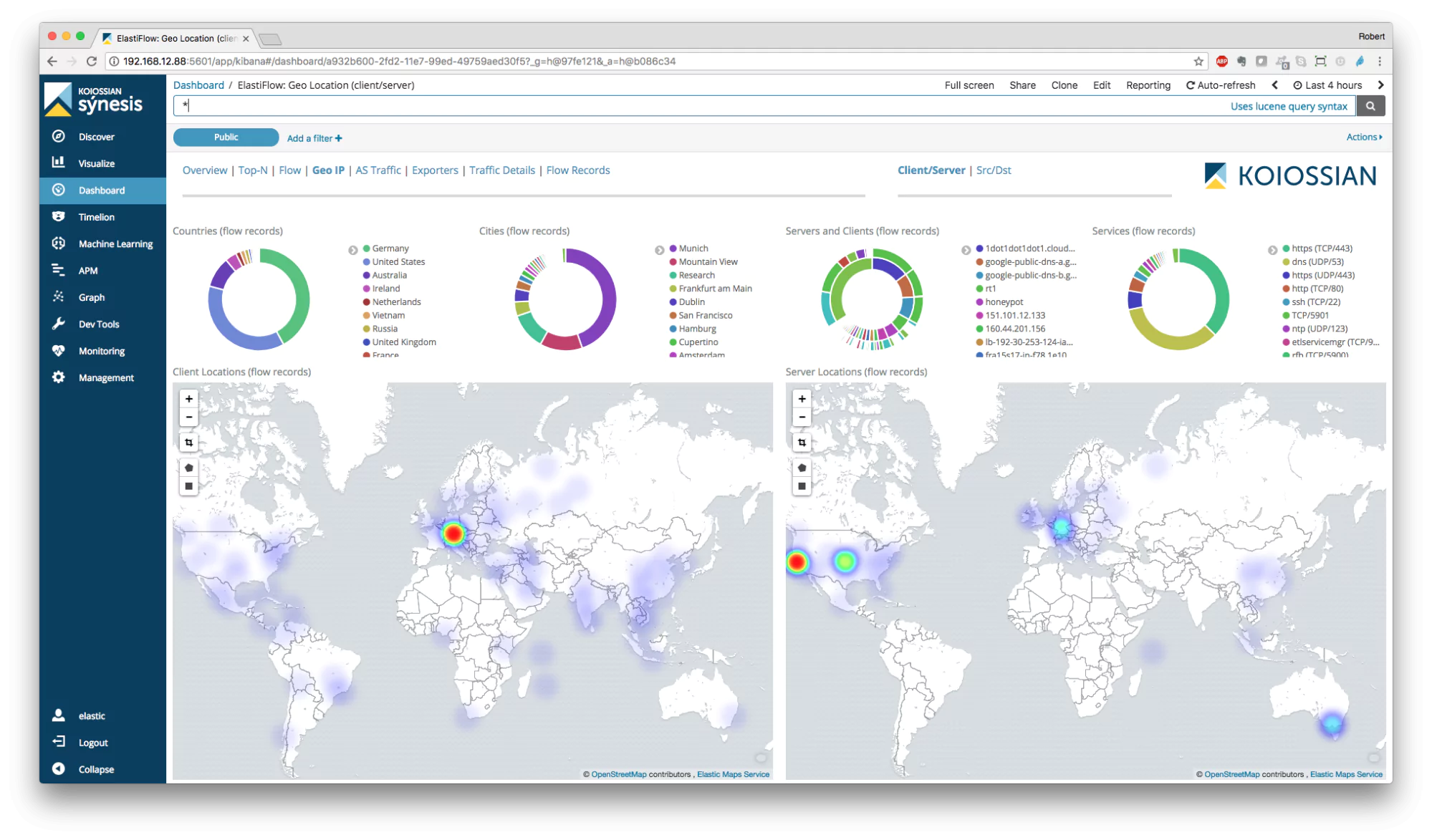

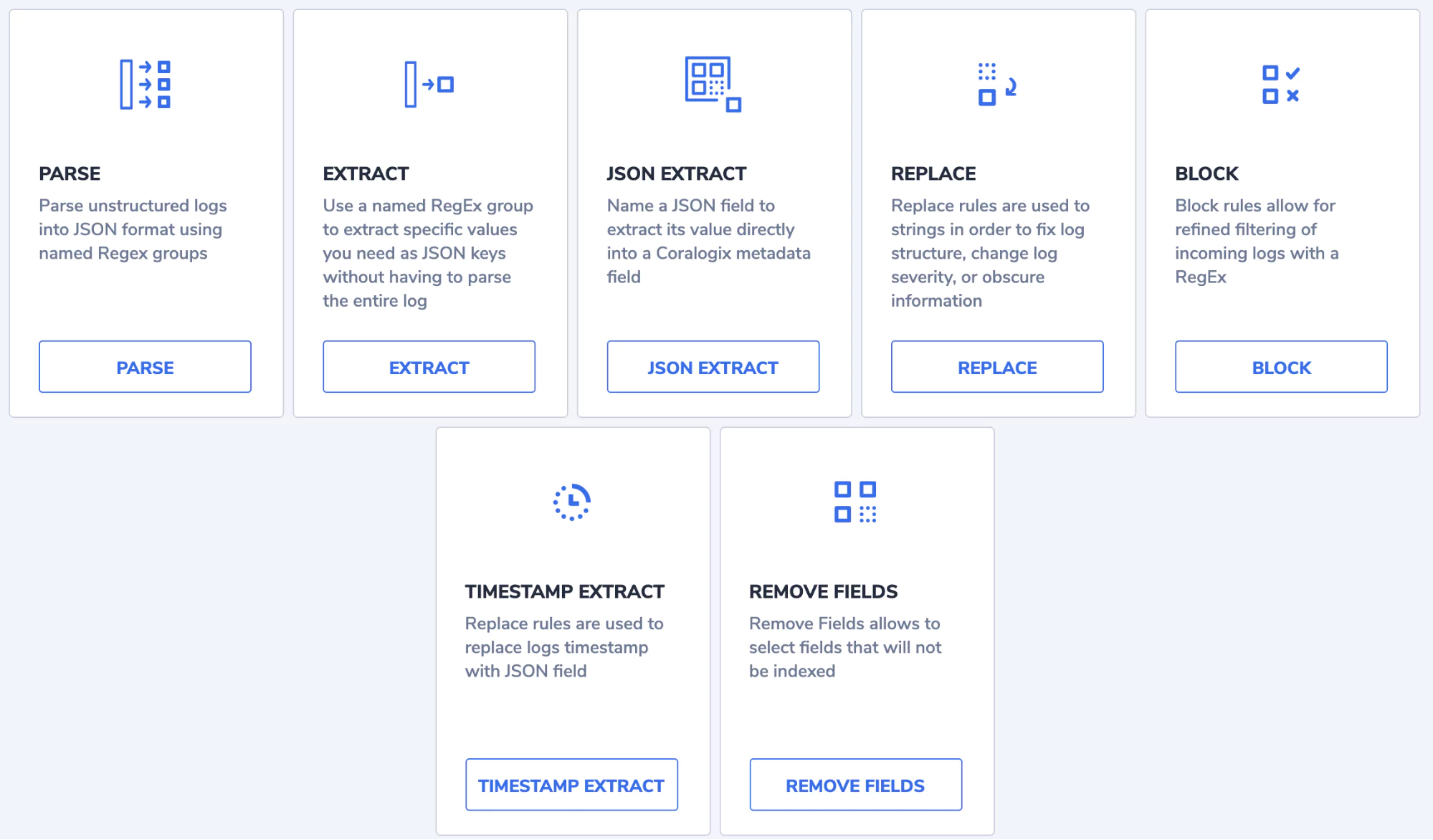

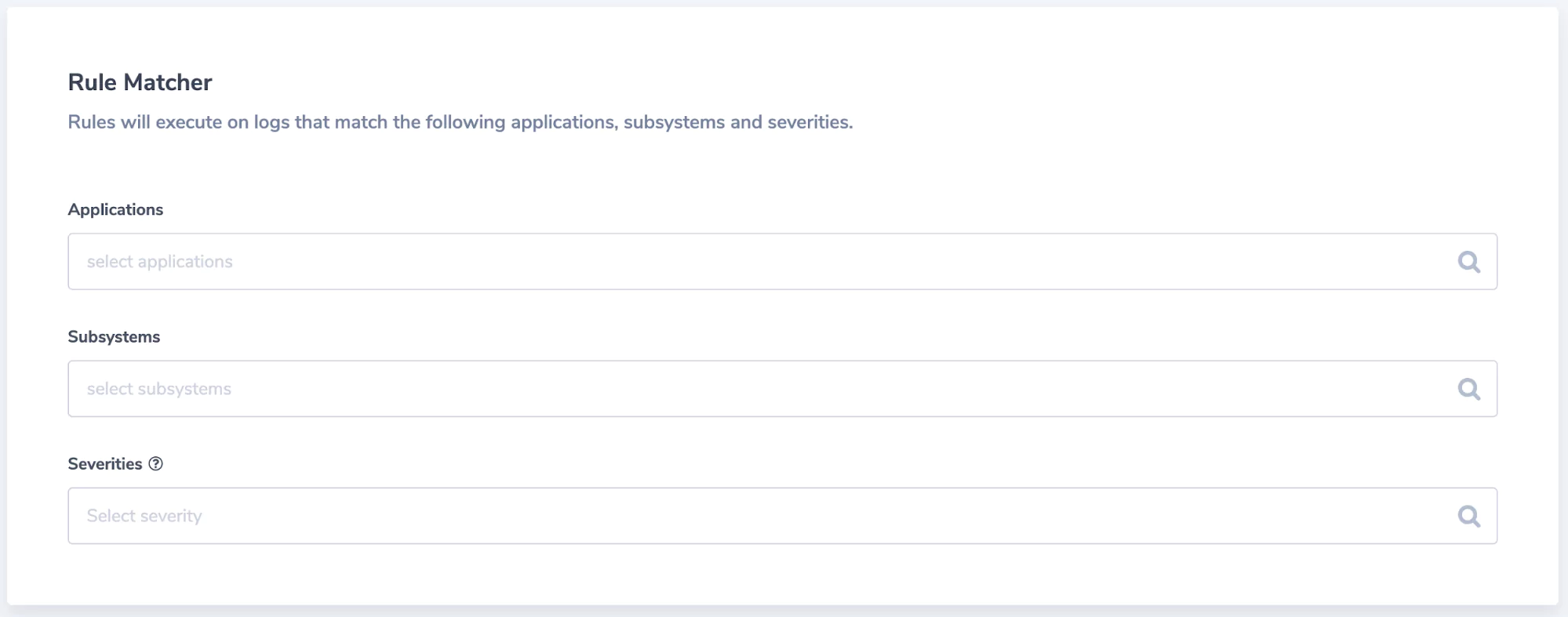

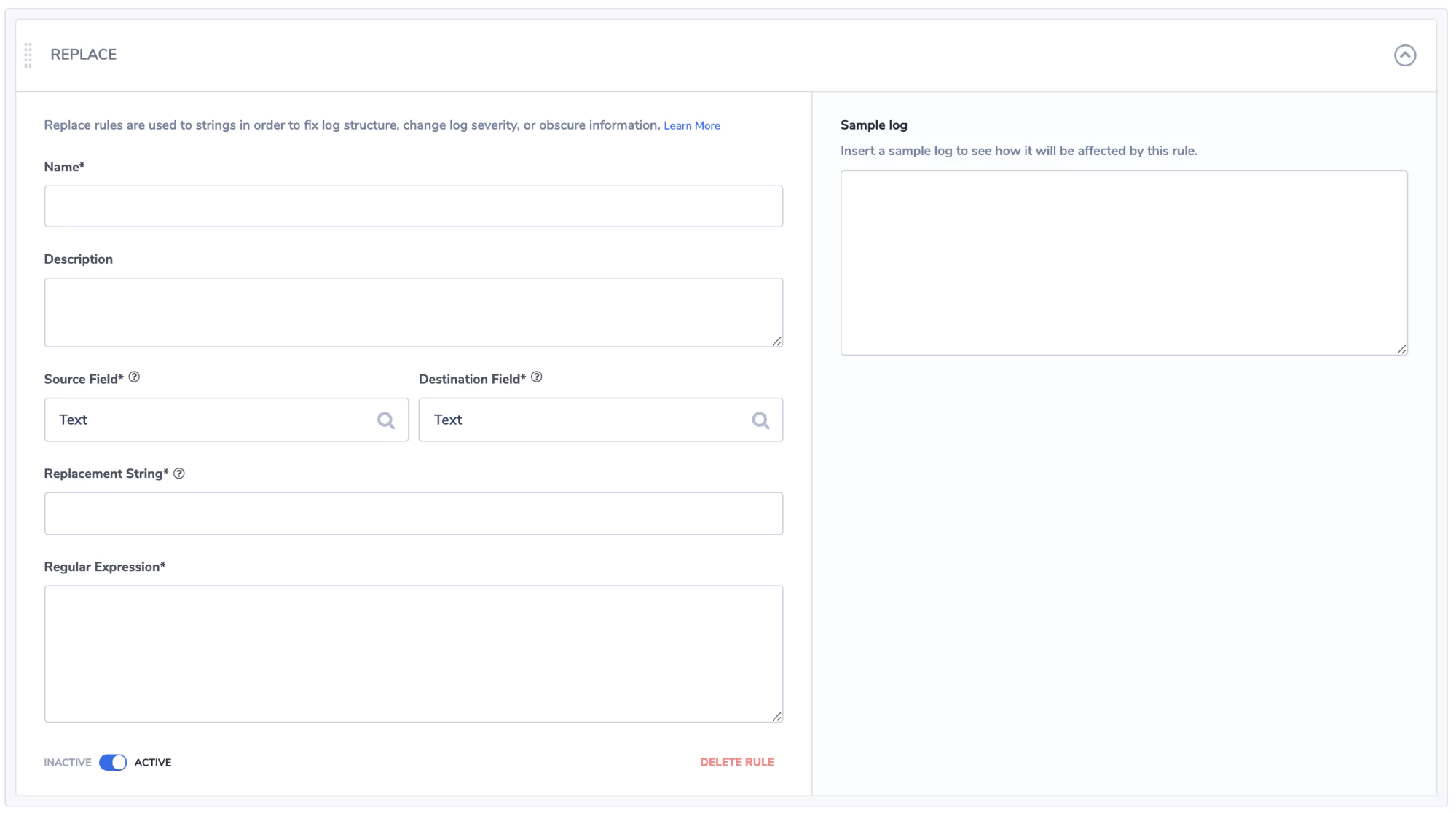

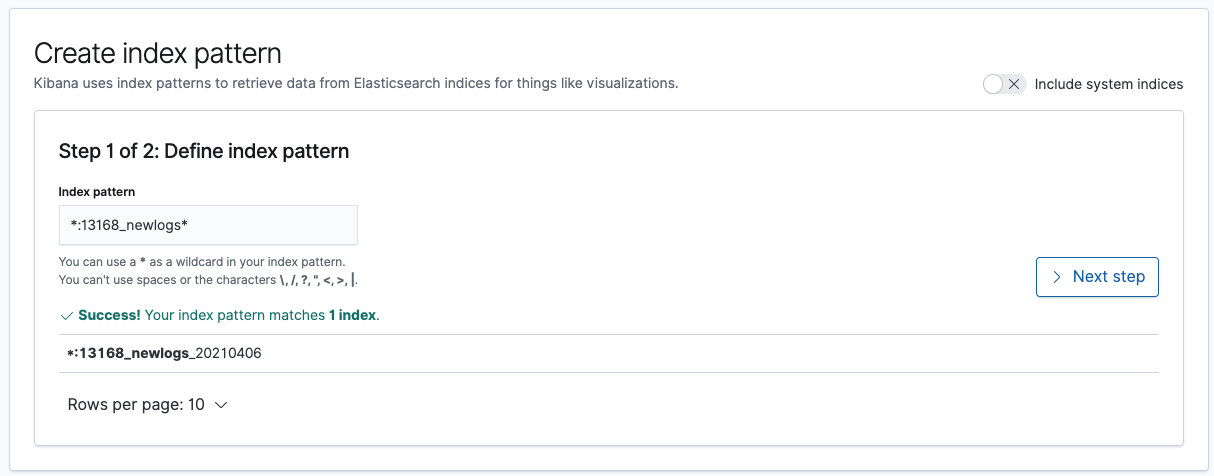

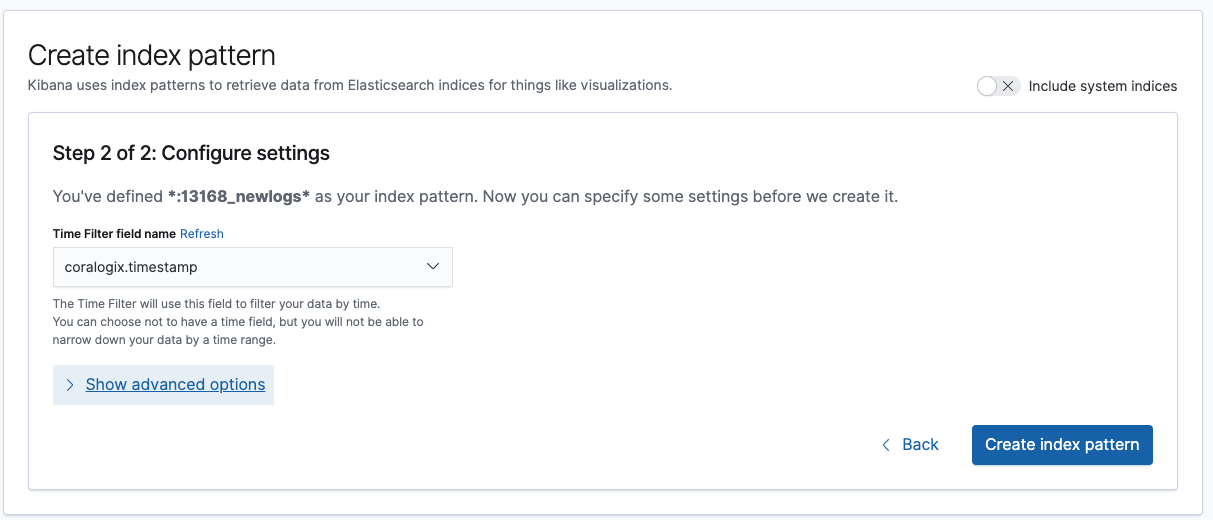

Coralogix’s Unified Threat Intelligence elevates this process by offering seamless integration with top threat intelligence feeds, curated by Coralogix security experts. Without any need for complex configurations or API integrations, Coralogix can automatically enrich log data with malicious indicators in real-time, facilitating efficient threat detection and alerting.

The enriched logs are stored to your own remote storage, allowing you to query directly from Coralogix with infinite retention and even research the data with external tools. Explore Coralogix security and discover how the platform can enhance your organization’s security posture and keep you one step ahead of potential threats.