SaaS Observability is a busy, competitive marketplace. Alas, it is also a very homogeneous industry. Vendors implement the features that have worked well for their competition, and genuine innovation is rare. At Coralogix, we have no shortage of innovation, so here are four features of Coralogix that nobody else in the observability world has.

1. Customer Support Is VERY Fast

Customer support is the difference between some interesting features and an amazing product. Every observability vendor has some form of customer support, but none of them are even close to our response time.

At Coralogix, we respond to customer queries in 19 seconds (median) and achieve <40 minute resolution times on average.

This is fine for now – but how will Coralogix scale this support?

We already have! Coralogix has over 2,000 customers, all of whom are getting the same level of customer support because we don’t tier our service. 1 gigabyte or 100 terabytes – everyone gets the same fantastic standard of service. Don’t believe me? Sign up for a trial account and test our service!

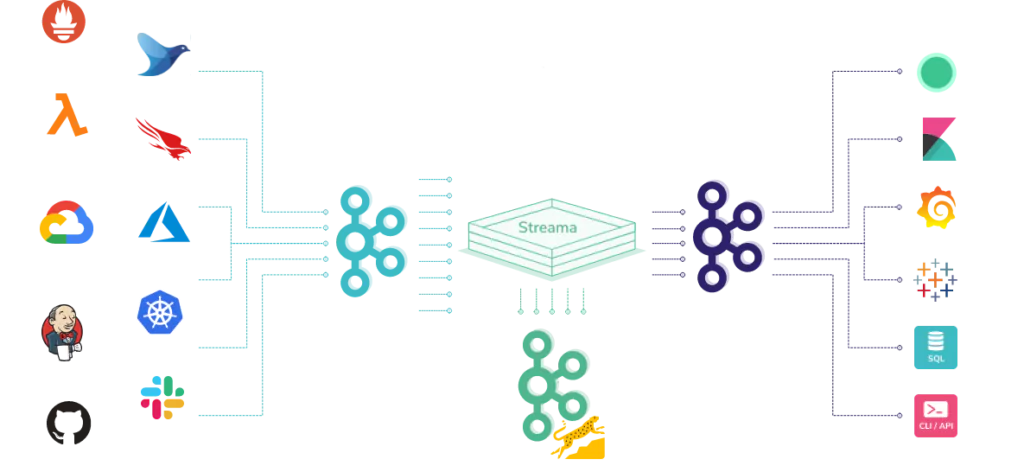

2. Coralogix is Built Differently

The typical flow for data ingestion follows a set of steps:

- Data is initially stored and indexed.

- Indexed data then triggers a series of events downstream, such as dashboard updates and triggering alarms.

- Finally, cost optimization decisions and data transformations are made.

This flow adds latency and overhead, which slows down alarms, log ingestion, dashboard updates, and more. It limits the decision-making capabilities of the platform. It’s impossible to skip indexing and go straight to archiving because every process depends on indexed data. At Coralogix, we saw that this wouldn’t work and endeavored to build our platform differently.

So how does Coralogix do it?

Coralogix leverages the Streama© architecture. Streama focuses on processing the data first and delaying storage and indexing until all the important decisions have been made.

It is a side-effect-free architecture, meaning it is entirely horizontally scalable, and adapts beautifully to meet huge daily demands. This means the Coralogix platform is exponentially more efficient.

3. Archiving and Remote Query

Many observability providers allow customers to archive their data in low-cost storage. In most providers, data is compressed and stored in low-cost storage, like Amazon S3. Customers need to rehydrate their data if they wish to access their data.

There are some key issues with this approach:

- Archived data is far less discoverable.

- Historical data may be held hostage by a SaaS provider using proprietary compression.

- Customers now have to pay again for a massive volume of data in hot storage.

So how does it work at Coralogix?

At Coralogix, we do not demand that data must be rehydrated before it can be queried. Instead, archives can be queried directly. Our remote query engine is fast. Up to 5x faster than Athena and capable of processing terabytes of data in seconds.

With support for schema on read and schema-on-write, Coralogix Remote Query is much more than a simple archiving solution. It’s an entire data analytics platform capable of processing Lucene, SQL, and DataPrime queries.

Does Remote Query save customers money?

In summary, yes. Customers are migrating to Coralogix daily, and they constantly report cost savings. One of the most interesting behaviors in new customers is their willingness to hold less data in “frequent search.” This means customers are paying for less data in hot storage because that data is still easily and instantly accessible in the archive.

This behavior shift and our TCO Optimizer regularly drive cost savings of between 40% and 70%. Speaking of…

4. The Most Advanced Cost Optimization on the Market

Most observability providers have a tiered solution, especially regarding cost optimization. Spending enough money unlocks certain features, like tiered storage. Our competitors need to gatekeep their cost optimization features because they are not architected for this type of early decision-making in the data process. This means they can only afford to optimize their biggest customers.

Coralogix is Perfect for the Cost Optimization Challenge

Our Streama© architecture means we can make very early decisions, long before storage and indexing. This allows us to make cost-optimization decisions for all of our customers. Whether it’s our Frequent Search, Monitoring, or Compliance use case, Coralogix and our unique architecture regularly drive down customer costs.

More than this, we also have features that allow our customers to transform their data on the fly. This allows them to keep only the necessary information and drop everything they don’t. For example, Logs2Metrics, allows our customers to transform their expensive logs into optimized metrics that can be retained for far longer at a fraction of the cost.

Coralogix is Different in All the Best Ways

Coralogix is more than just a full-stack observability platform with some interesting tools. It’s a revolutionary product that will scale to meet customer demands—every time. Our features, coupled with unprecedented customer support and incredible cost optimization make us one of the few observability providers that will help you to grow, help you to optimize, and, at the same time, save you money in the process.