Salesforce was the first of many SaaS-based companies to succeed and see massive growth. Since they first started out in 1999, Software-as-a-Service (SaaS) tools have taken the IT sector and, well the world, by storm. For one, they mitigate bloatware by moving applications from the client’s computer to the cloud. Plus, the sheer ease of use brought by cloud-based, plug-and-play software solutions has transformed all sorts of sectors.

Given the SaaS paradigm’s success in everything from analytics to software development itself, it’s natural to ask whether its Midas touch could improve the current state of data observability tools.

Heroku and the Rise of SaaS

Let’s start with a system that we’ve previously talked about, Heroku. Heroku is one of the most popular platforms for deploying cloud-based apps.

Using a Platform-as-a-Service approach, Heroku lets developers deploy apps in managed containers with maximum flexibility. Instead of apps being hosted in traditional servers, Heroku provides something called dynos.

Dynos are like cradles for applications. They utilize the power of containerization to provide a flexible architecture that takes the hassle of on-premises configuration away from the developer. (We’ve previously talked about the merits of SaaS vs Hosted solutions.)

Heroku’s dynos make scalability effortless. If developers want to scale their app horizontally, they can simply add more dynos. Vertical scaling can be achieved by upgrading dyno types, a process Heroku facilitates through its intuitive dashboard and CLI.

Heroku can even take scaling issues off the developer’s hands completely with its auto-scaling feature. This means that software companies can focus on their mission, providing high-quality software at scale without worrying about the ‘how’ of scalability or configuration.

Systems like Heroku give us a tantalizing glimpse of the power and convenience a SaaS approach can bring to DevOps. The hassle of resource management, configuration, and deployment are abstracted away, allowing developers to focus solely on coding.

SaaS is making steady inroads into DevOps. For example, Coralogix (which integrates with Heroku and is also available as a Heroku add-on), operates with a SaaS approach, allowing users to analyze logs without worrying about configuration details.

Not So SaaS-y Tooling

It might seem that nothing is stopping SaaS from being applied to all aspects of observability tooling. After all, Coralogix already offers a SaaS log analytics solution, so why not just make all logging as SaaS-y as possible?

Log collection is the fly in this particular ointment. Logging data is often stored in a variety of formats, reflecting the fact that logs may originate from very different systems. For example, a Linux server will probably store logs as text data while Kubernetes can use a structured logging format or store the logs as JSON.

Because every system has its own logging format, organizations tend to collect their logs on-premises is a big roadblock to the smooth uptake of SaaS. In reality, the variety of systems, in addition to the option to build your own system, is symptomatic of a slower move toward observability in the enterprise. However, this range of options doesn’t mean that log analysis is limited to on-prem systems.

What’s important to note is that organizations are really missing out on SaaS observability tooling. Why is this the case, when SaaS tools and platforms are so widespread? The perceived complexity of varying formats, combined with potential cloud-centric security concerns, might have a role to play.

Moving to Cloud-Based Log Storage with S3 Bucket

To pave the way to Software as a Service log collection, we need to stop storing logs on-prem and move them to the cloud. Cloud computing is the keystone of SaaS. Applications can be hosted on centralized computing resources and piped to thousands of clients.

AWS lets you store logs in the cloud with S3 Bucket. S3 is short for Simple Storage Service. As the name implies, S3 Bucket is a service provided by AWS that is specifically designed to let you store and access data quickly and easily.

Pushing Logs to S3 with Logstash and FluentD

For those who aren’t already using AWS, output plugins allow users to push existing log records to S3. Two of the most popular logging solutions are FluentD and Logstash, so we’ll look at those here. (Coralogix integrates with both FluentD and Logstash)

FluentD Plugin

FluentD contains a plugin called out_s3. This enables users to write pre-existing log records to the S3 Bucket. Out_s3 has several cool features.

For one, it splits files using the time event logs were created. This means the S3 file structure accurately reflects the original time ordering of log records and not just when they were uploaded to the bucket.

Another thing out_s3 allows users to do is incorporate metadata into the log records. This means each log record contains the name of its S3 Bucket along with the object key. Downstream systems like Coralogix can then use this info to pinpoint where each log record came from.

At this point, I should mention something that could catch new users out. FluentD’s plugin automatically creates files on an hourly basis. This can mean that when you first upload log records, a new file isn’t created immediately, as it would be with most systems.

While you can’t rely on new files being created immediately, you can change whether they are created more or less frequently by configuring the time key condition.

Logstash Plugin

Logstash’s output plugin is open source and comes under an Apache 2.0 license, meaning there are no restrictions on how you use it. It uploads batches of Logstash events in the form of temporary files, which by default are stored in the Operating System’s temporary directory.

If you don’t like the default save location, Logstash gives you a temporary_directory option that lets you stipulate a preferred save location.

Securing Your Logs

Logs contain sensitive information. A crucial question for those taking the S3 log storage route is making sure S3 Buckets are secure. Amazon S3 default encryption enables users to ensure that new log file objects are encrypted by default.

If you’ve already got some logs in an S3 Bucket and they aren’t yet encrypted don’t worry. S3 has a couple of tools that let you encrypt existing objects quickly and easily.

Encryption through Batch Operations

One tool is S3 Batch Operations. Batch Operations are S3’s mechanism for performing operations on billions of objects at a time. Simply provide S3 Batch Operations with a list of the log files you want to encrypt and the API performing the appropriate operation.

Encryption can be achieved by using the copy operation to copy unencrypted files to encrypted files in the same S3 Bucket location.

Encryption through Copy Object API

An alternative tool is the Copy Object API. This tool works by copying a single object back to itself using SSE encryption and can be run using the AWS CLI.

Although Copy Object is a powerful tool, it’s not without risks. You’re effectively replacing your existing log files with encrypted versions so make sure all the requisite information and metadata is preserved by the encryption.

For example, if you are copying log files larger than the multipart_threshold value, the Copy Object API won’t copy the metadata by default. In this case, you need to specify what metadata you want using the parameter –metadata.

Integrating S3 Buckets with Coralogix

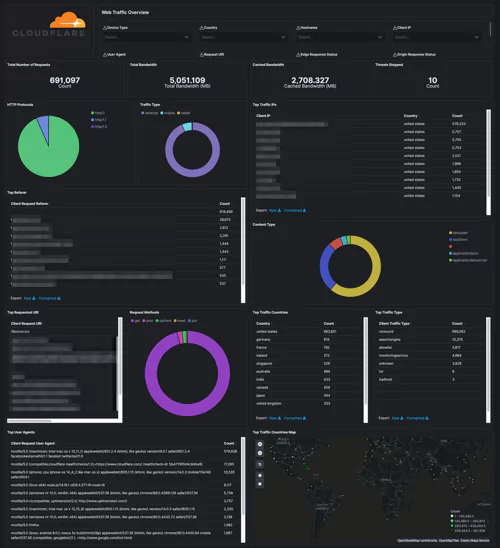

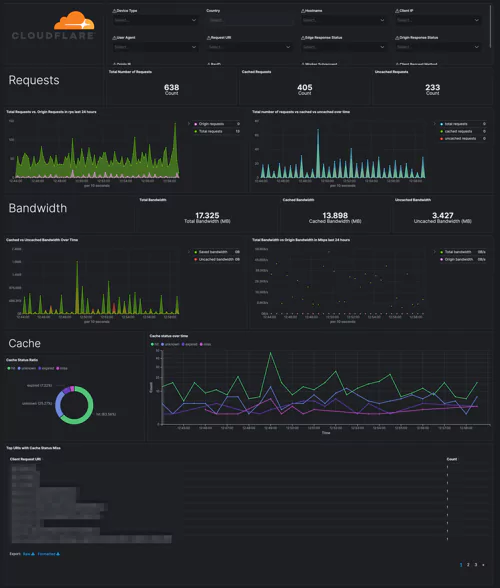

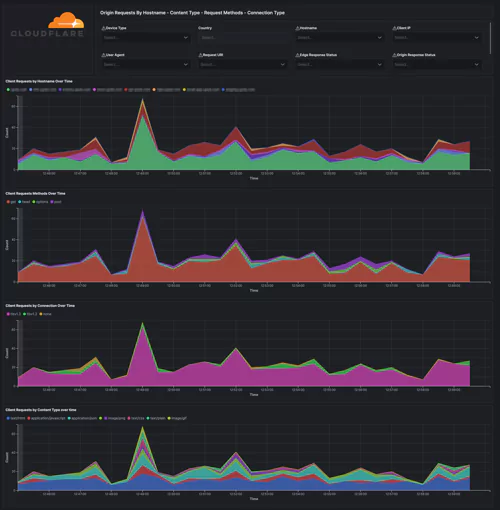

Hooray! Your logs are now firmly in the cloud with S3. Now, all you need to do is analyze them. Coralogix can help you do this with the S3 to Coralogix Lambda.

This is an API that lets you send log data from your S3 Bucket to Coralogix, where the full power of machine learning can be applied to uncover insights. To use it you need to define five parameters.

S3BucketName specifies the name of the S3 bucket storing the CloudTrail logs.

ApplicationName is a mandatory metadata field that is sent with each log and helps to classify it.

CoralogixRegion is the region in which your Coralogix account is located. CoralogixRegion can be Europe, US or India, depending on whether your Coralogix URL ends with .com, .us or .in.

PrivateKey is a parameter that can be found in your Coralogix account under Settings -> Send your logs. It is located in the upper left corner.

SubsystemName is a mandatory metadata field that is sent with each log and helps to classify it.

The S3 to Coralogix Lambda can be integrated with AWS’s automation framework through the Serverless Application Model. SAM is an AWS framework that provides resources for creating serverless applications, such as shorthand syntax for APIs and functions.

The code for the Lambda is also available at the S3 to Coralogix Lambda GitHub. As with Logstash, it’s open source under the Apache 2.0 License so there are no restrictions on how you use it.

To Conclude

Software as a Service is a paradigm that is transforming every part of the IT sector, including DevOps. It replaces difficult-to-configure on-premises architecture with uniform and consistent services that remove scalability from the list of an end user’s concerns.

Unfortunately, SaaS observability tooling is still falling behind the curve, but largely because organizations are still maintaining a plethora of systems (and therefore a variety of formats) on-prem.

Storing your logs in S3 lets you bring the power and convenience of SaaS to log collection. Once your logs are in S3, you can leverage Coralogix’s machine learning analytics to extract insights and predict trends.