Google is known for giving developers difficult and quirky brain teasers during the hiring process. They’re specifically designed to filter out all but the top 1% of coders with the ability to solve complex programming problems.

In the 2013 film, The Internship, Owen Wilson and Vince Vaughan pit their wits against Google’s hiring manager for a chance to win one of Google’s coveted internships. They had to work out how to escape from a blender if they’d been shrunk down to the size of flies and put inside. To this, Owen Wilson responded, “physics scares me.” He was not what you might call a 10x dev.

The Mythical 10x Dev

Techpedia defines a 10x dev as “an individual who is thought to be as productive as 10 others in his or her field. The 10x developer would produce 10 times the outcomes of other colleagues, in a production, engineering or software design environment.” It’s not hard surprising that every company wants to attract and hire these programmers.

A development team made of these mythical coding unicorns should be able to outperform average development teams by an order of magnitude, potentially leading to massive profits. There’s just one problem with building a business plan around this, it’s completely unreasonable. Regardless, the productivity of a software team is much more contingent on effective practices and culture than any individual’s abilities. Let’s explore this a bit.

Tar Pits of Code

IBM manager Fred Brooks painted a nightmare picture of how software development could go wrong in his essay The Tar Pit. Tar pits around the world contain the bodies of mammoths and sabre-toothed tigers who blundered in, only to find the tar was a death trap; the more fiercely they struggled to escape, the deeper they sank.

Brookes’ analogy was as pointed as it was chilling. An unavoidable by-product of large software projects is system complexity. He theorized that such projects are like tar pits and programmers working on them are in constant danger of being trapped within them. It doesn’t matter how quick they are at solving problems, the rot eventually sets in and development slows to a snail pace.

A Tale of Two Companies

About a year ago, I was entrenched in one of Brooks’ tar pits. It was a small software company who had built their codebase on a sturdy – if a little old fashioned – Java tech stack. On the business side they had some respectable clients, who paid them a lot of money. Not only were they keeping the lights on, there was cold beer in the fridge. Unfortunately, this picture of paradise crumbled as soon as anyone tried to do any actual coding.

There was no documentation (the resident 10x devs hadn’t written any), and unit test coverage for the entire codebase was less than 5% (because those same 10x devs didn’t know how to do TDD). The codebase was a monolith application, so to test any changes – even one line of code – you had to build the entire application and deploy it.

To top it off there was only one development server. Whenever anyone wanted to deploy a code change, for any reason, they had to holler “deploy to dev” to the entire room and wait for the entire room to give the ok (who said coding wasn’t a spectator sport?).

As a result, productivity was low, very low. Developers with Masters degrees in computer science were writing ten lines of code a day. The rest of their time was spent reading code or reading the server logs. When I was at that company, I couldn’t program my way out of a wet paper bag.

In contrast to this previous experience, I’m now working at a start-up which markets healthcare apps. I’m working entirely remotely, becoming familiar with an entirely new system on the job. The only other guy I talk to is my boss, and communication channels are limited to Slack and Zoom. And I’m doing fine.

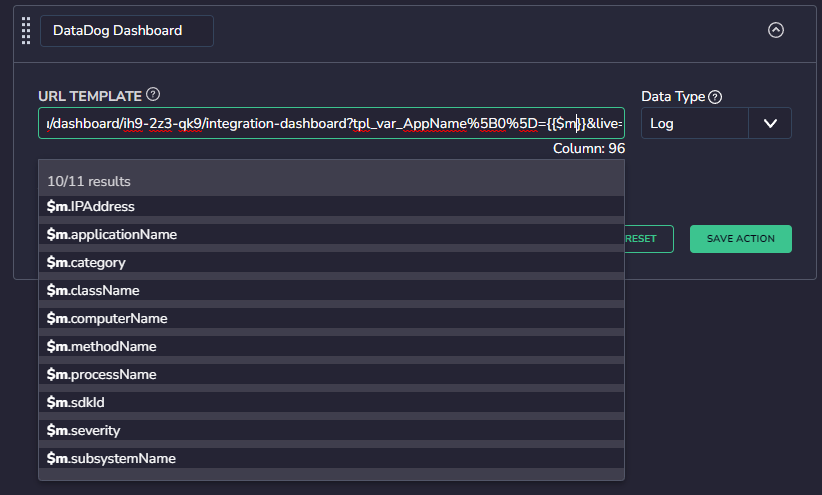

There’s no documentation yet but that doesn’t bother me. Firebase enables me to deploy my code with a single command while Node lets me fire it up on my laptop. Chrome dev tools gives me real-time feedback on what my system is doing, meaning I’ve got observability in spades.

What it Takes to Maintain Speed of Software Development

For me, the moral of the previous tale is that tooling and processes matter. Developers often think that their expert knowledge enables them to make even the most cumbersome tools pay dividends. The 2019 State of DevOps report busts this myth, showing that user-friendly tools and processes are just as important for pro devs as they are for end users.

Tooling Up

The fastest software teams use off-the-shelf systems that can be easily learned and require little customization. Developers in these teams can take their toolchain for granted and focus all their efforts on what Martin Fowler called “strategic” software. This is code that enhances the product and makes money for the company.

The explosion in serverless computing, containerisation, and automated pipelines has provided a plethora of commercially available tools for painless feature integration and deployment. State of DevOps findings show 92% of the highest performing software teams use automated build tools. In 2020, there is a wealth of CI/CD pipeline solutions, the most popular being Jenkins, Azure DevOps Services and CircleCI.

Accessible Knowledge Bases

The State of DevOps also found that easy internal and external search improved productivity. Internal search covers documentation, ticketing systems and easily navigable company codebases. External search includes Google and go-to sites like Stack Overflow. Good documentation and efficient project management frameworks, mediated by tools like Confluence and JIRA, increase developer productivity by over 70%.

Trust and Respect

A final point is psychological safety. Developers solve problems most effectively when they are working with people they can trust and respect. Like Thor and his hammer, a dev with the right tools can move the world.