What is real-time data?

Real-time data is where information is collected, immediately processed, and then delivered to users to make informed decisions at the moment. Health and fitness wearables such as Fitbits are a prime example of monitoring stats such as heart rate and the number of steps in real-time. These numbers enable both users and health professionals to identify any results, existing or potential risks, without delay.

In today’s digital age, data is the lifeblood of any business, and real-time data provides firms with granular visibility and insight into factors such as cost inefficiencies, performance levels, and customer habits.

What’s not real-time data?

Data cannot be classed as real-time if it’s intended to be kept back from its eventual use after it’s been gathered.

Examples of data that would not fall under the real-time umbrella would be emails or communication via posts in a discussion forum. They are not time-bound, making rapid responses rare, sometimes taking hours or even days for full resolutions.

Why is real-time data collection necessary to financial security?

Financial companies are tasked with protecting private and sensitive financial data for individuals and businesses alike.

Finance is one of the most targeted industries, with 350,000 sensitive files exposed, on average, from each individual cyber-attack. And this is without scrutinizing other forms of attacks that reveal financial data systems, such as fraud and bank account thefts.

Robust cyber and anti-fraud controls are paramount to help bolster financial security at banks, insurance companies, and other financial institutions. This is where real-time processing helps organizations obtain the business intelligence they need to react to security perils.

From credit risk assessments to detecting abnormal spending patterns and preventing data manipulation, real-time analytics allow firms to make quick data-driven decisions to ensure security defenses remain watertight.

Five benefits of real-time financial data

Implementing real-time financial data analytics doesn’t happen overnight. It takes time, effort, and patience. However, it can help financial institutions evolve across the many facets of their operations, including combatting monetary deception, enhancing forecasting accuracy, and building stronger client relationships.

Below we’ve listed five key advantages that real-time financial data can bring to organizations.

1. Improve accuracy and forecasting

To develop strategies for the future, financial companies need up-to-date views of significant figures to understand better their current state of affairs and their positioning in the market.

Making decisions based on last quarters’ numbers is quickly becoming antiquated, as it becomes impossible to anticipate shifts in the market, manage costs, and plan resources moving forward.

By leveraging present data, forecasts are more timely and precise.

Data and numbers are constantly moving, and they quickly become outdated. No company can expect to get their projections 100% correct all of the time, as external forces that nobody can see coming (such as the COVID-19 pandemic) can curtail expectations.

However, real-time data gives a confident starting point so that firms are better equipped to make accurate decisions on where to allocate funds, cut spending, and maximize ROI (Return On Investment).

2. Enhance business performance

Real-time data allows businesses to evaluate their organization efficiencies, improve workflows, and iron out any issues at any given moment. In other words, they take a proactive approach by gaining a clear overview of the business. Companies can seize opportunities or recognize problems when (or even before) they arise.

Empowering employees with real-time insights means they can drill down into customer behaviors, financial histories, and consumer spending patterns to deliver a personalized service. A well-oiled finance machine goes way beyond anticipating customer demands and preferences. Financial businesses rely on efficient IT infrastructures to keep a hold on organizational assets and prioritize security. Real-time analytics helps firms establish a common operational picture that reduces downtime and increases the bottom line.

3. Upgrade strategic decisions

Traditionally, the decision-making process has lagged behind the actual information stored in financial data systems. Real-time data enrich this process by driving businesses to form purposeful judgments with the most current information possible.

Employees can make decisions confidently, particularly when looking ahead and matching what they know about the business with emerging industry trends and challenges faced in external landscapes.

What does data tell a business? What does it highlight? Firms need reliable, up-to-the-minute answers so they can head in the right direction. This deeper understanding bridges the gap between real-time and historical data to inform outcomes.

4. Utilize up-to-date reporting

Real-time reporting saves time for everyone. Businesses can give their clients access to the most up-to-date financial data at any time, which, in turn, builds greater trust and transparency.

Live reporting also means that businesses can wave goodbye to the monotony of manual labor or being bound by specific deadlines to run off reports. Real-time reporting automates data collection, allowing staff to work on more pressing tasks or issues.

Not only can organizations review data at the click of a button, but they also benefit from consistent, accessible, and unerringly accurate data.

5. Detect fraud faster

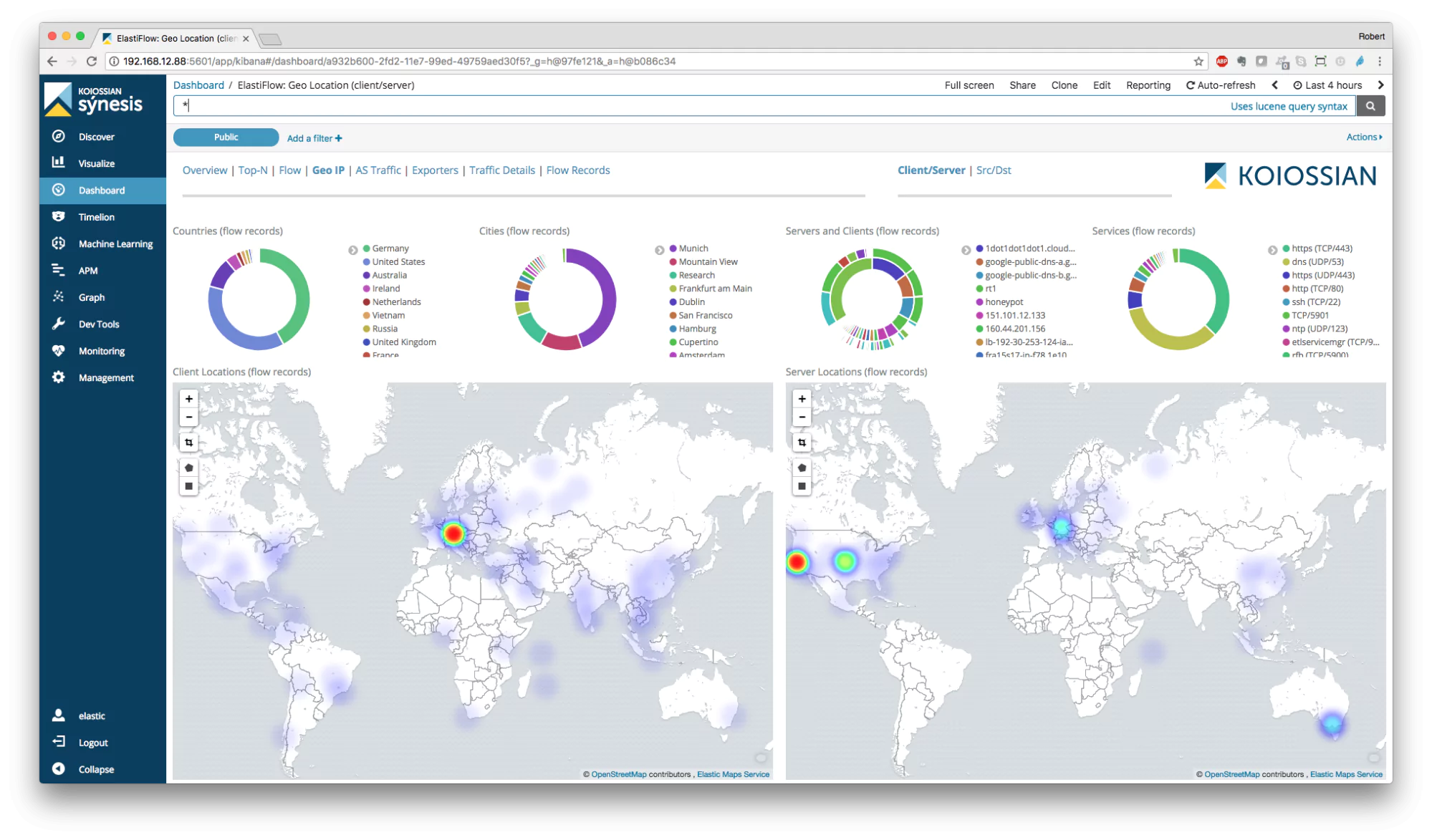

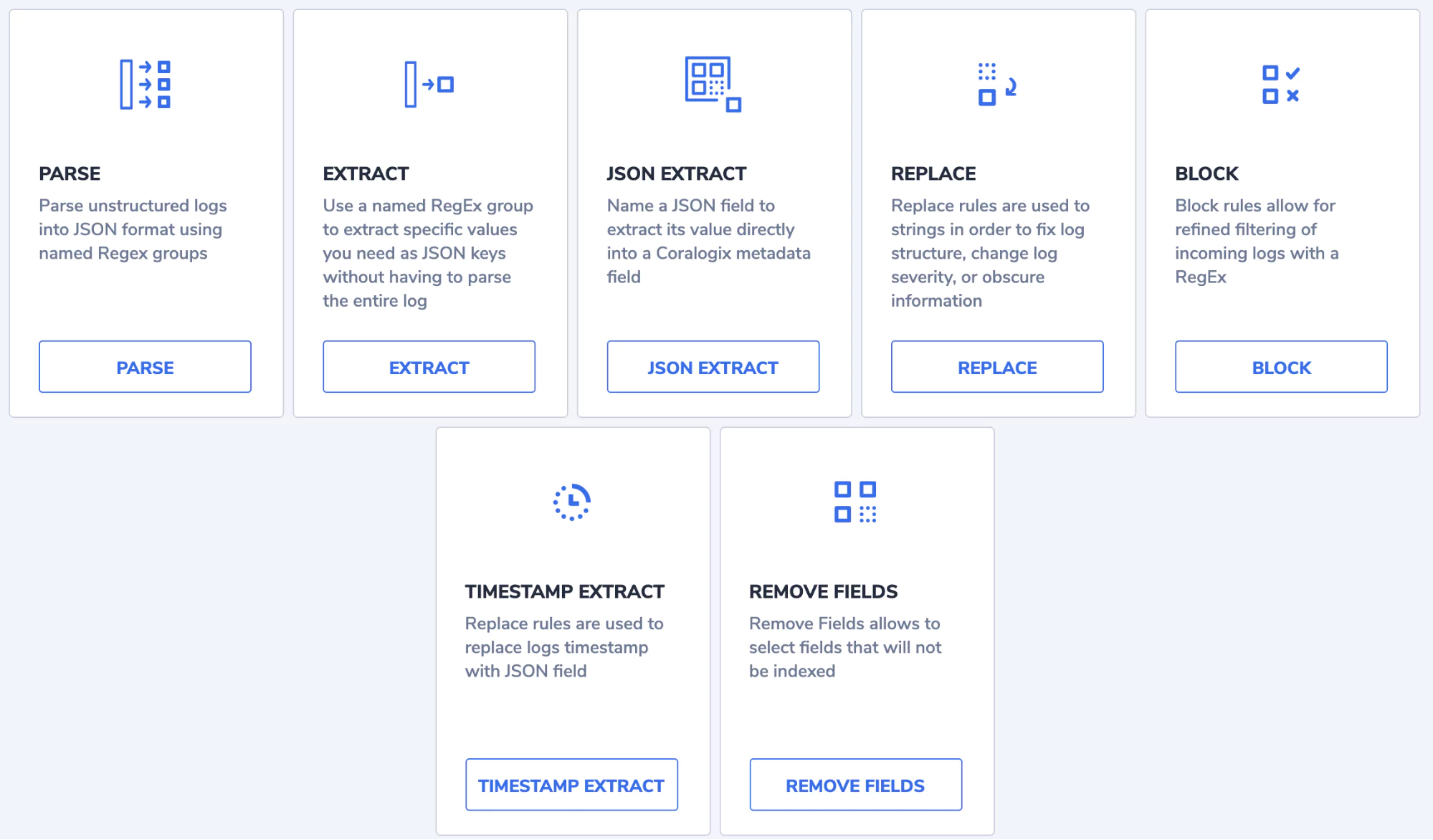

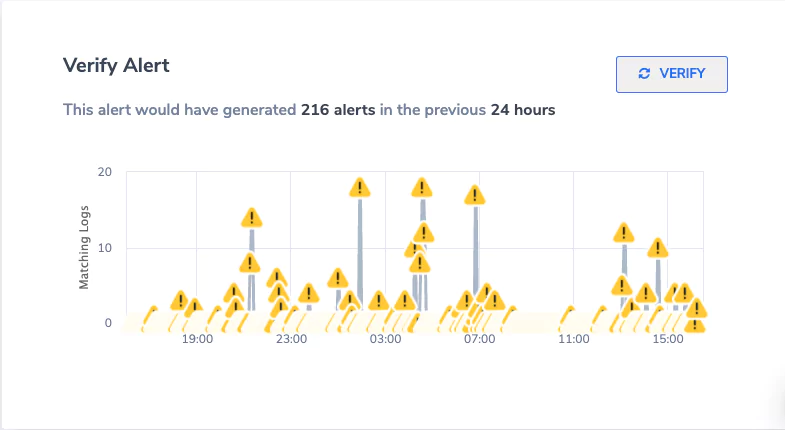

Real-time data insights help firms jump on top of fraudulent scams and transactions before it’s too late. Fraud attacks are becoming increasingly sophisticated and prevalent, so financial companies need to act on the real-time synthesis of data.

From a customer’s demographics and purchase history to linking intelligence from devices to transactional data, finance teams can accurately assess potential fraud by using data as soon as it’s produced.

Trends to watch in real-time financial data

Banks will focus on monetizing real-time

Customers want everything at speed but without a drop-off in efficiency. This is why real-time payments are one of the fastest-moving developments in the financial industry.

One of these emerging channels is Request to Pay (RTP) services. This method means payments can be fast-tracked to eliminate the process of a customer entering their credit or debit card every time they shop online.

RTP is essentially a messaging service that gives payees the ability to request payments for a bill rather than send an invoice. If the payment is approved, it initiates a real-time credit to the payee. Customers can immediately view their balance in real-time and avoid surprise transactions or unwanted overdraft charges.

Instant cross-border payments

Belgium-headquartered payments solution provider Swift helps banks meet global demand for instant and frictionless cross-border payments. Some of the main issues with cross-border payments involved long processing times, which often involved more than one bank.

SWIFT GPI enables banks to provide end-to-end payment tracking to their customers. More than 1,000 banks have joined the service to breed a standard of speed, tracking, and transparency that matches the trouble-free experience when businesses and consumers make domestic real-time payments.

Final thoughts

In the past decade, the financial industry has progressed rapidly. Companies that neglect the opportunity to implement real-time analytics will miss out on making calculated business decisions, minimizing complexities, and managing risk more effectively.

As John Mitchell, CEO of next-generation payments software technology provider, Episode Six, points out: “Data in and of itself is not necessarily the king. Rather, it is what organizations can do with the knowledge and insight the data provides that makes it key.”