Why do organizations invest in data observability?

Because it adds value. Sometimes we forget this when we’re building our observability solutions. We get so excited about what we’re tracking that we can lose sight of why we’re tracking it.

Technical metrics reveal how systems react to change. What they don’t give is a picture of how change impacts the broader business goals. The importance of qualitative data in business observability is often overlooked.

Data-driven insights which only include technical or quantitative modeling miss the big picture. Pulling data from holistic sources unlocks the full power of business observability.

Including holistic data collectors in your observability stack grants visibility not just into what’s happening, but why it happened, and the impact it has on your business outside of your systems.

What are Holistic Data Collectors?

Holistic data collectors draw from unusual or uncommon sources. They log information that wouldn’t usually be tracked, enabling businesses to get a holistic view of their systems. A holistic view means observability of all interconnected components.

A data strategy that includes the collection of holistic data empowers effective business observability. By logging as hidden pockets of data much clearer insight can be generated, and data-backed strategic decisions become much better informed.

The list of data sources it is possible to include is potentially limitless. With the proper application of collectors, any communication platform or user service can become a source of data and insight. Holistic data collectors exist for code repositories such as GitHub, collaboration tools like Teams or Slack, and even marketing data aggregators such as Tableau.

When and How to Use Holistic Data Collection

With some creative thinking and technical know-how, almost anything can be a source of holistic data. Common business tools, software, and platforms can be mined for useful data and analysis.

Below are some examples that exemplify and illustrate the usefulness of this approach to data-driven insight.

Microsoft Teams

Microsoft Teams has become a vital communication channel for the modern enterprise. As one of the most popular internal communication platforms on the market, Teams can be an invaluable source of holistic data from within your workforce.

Integrating webhooks into Teams enables you to monitor and track specific activity. Webhooks are a simple HTTP callback, usually in JSON format. They’re one of the simplest ways to connect your systems to an externally hosted channel such as Teams.

Pushing holistic, qualitative Teams data to a third party platform using webhooks enables correlation of non-numeric insight with finance and marketing figures and system metrics.

As Teams is most often used internally, this is an invaluable asset for understanding how changes to your organization are reflected in the morale of your staff. Many developers use Teams to discuss issues and outages. Having visibility of your IT team’s responses identifies which tasks take the most of their time and what knowledge blindspots exist in their collective technical skillset.

PagerDuty

PagerDuty is transforming the way IT teams deal with incidents. Integrating holistic data collectors greatly expands the level of insight gained from this powerful tool.

High levels of criteria specificity on alerts and push notifications to enable effective prioritizing of support. IT teams can manage large sets of alerts without risking an overwhelming amount of notifications.

As with Teams, webhooks are one of the most common and simplest methods of collecting holistic data from PagerDuty. By collecting enriched event data around incidents, outages, and how your IT teams respond can be analyzed in the context of the wider business organization.

GitHub

Scraping GitHub provides great insight into the performance of your dev teams. What’s not as widely known is the business insight that can be gained by correlating GitHub commits on repositories.

Commits are changes to code in GitHub. Each commit comes with a comment message and log. Keeping GitHub commits under the eye of your monitoring stack reveals a fascinating hidden pocket of data that could change the way your teams approach outages and troubleshooting.

Outages occur for many reasons. Some require more work and code changes than others. Bad or lazy coding creates a lot of outages. Tracking and logging GitHub commits will reveal both the kinds of outages and the specific chunks of code that take up the most time for your engineers.

GitOps

Monitoring GitOps declarations and version edits pinpoint not only where weaknesses exist at an architectural level, but when they were implemented and whether the problem is isolated or part of a wider trend.

Tableau

Tableau is an invaluable source of marketing data. Integrating Tableau with your log analytics platform opens up integral insight.

Digital marketing is an essential business aspect of modern enterprises. An effective digital marketing presence is key to success, and Tableau is the go-to tool for many organizations.

Tableau is useful for market strategy. It’s when Tableau data is included as part of a wider, holistic picture that organizational intelligence and insight can be gained from it. Scraping Tableau for data such as page bounce rates and read time allows you to see how technical measurements correlate with your marketing metrics.

Silo-Free Reporting

Say you’ve experienced a sudden dip in online sales despite an advertising drive. Your first reaction could be to blame the marketing material.

By including Tableau data in your analytics and reporting you can see that the advertising campaign was successful. Your website had a spike in visitors. This spike in traffic led to lag and a poor customer experience, visible due to an equally large spike in bounce rate.

Scraping Tableau as a source of holistic data reveals that the sales dip was down to issues with your systems. Your strategy can then be to improve your systems so they can keep up with your successful marketing and large digital presence.

Jira

Integrating your analytics and monitoring platform with Jira can yield powerful results, both in alerting capabilities and collecting insight-generating data.

Using webhooks, your integrated platform can create Jira tickets based on predefined criteria. These criteria can be defined based on data from other sources in your ecosystem, as the issue is being raised and pushed from a third party platform.

Automating this process enables your IT team to deploy themselves both efficiently and with speed. It allows them to concentrate on resolving issues without getting bogged down in logging and pushing the alerts manually.

By having tickets raised by an ML-powered platform with observability over your whole infrastructure, your engineers won’t be blindsided by errors occurring in areas they may not have predicted.

Use Case: Measuring the Business Impact of Third Party Outages with Holistic Collectors

Third party outages are one of the most common reasons for lost productivity and revenue. Many enterprises rely on third party software, systems, or services to deliver their own. Reliance on one or more third parties is the norm for many sectors and industries.

A third party will inevitably experience an outage at some point. There’s no way to avoid this. Even the most reliable provider can fall victim to unforeseen circumstances such as power cuts or emergency server maintenance from time to time.

Third party outages can have huge ramifications. These services are often business-critical, and the financial costs of even brief outages can reach far past the hundred thousand dollar mark.

Lost revenue is one way to measure the impact of such an outage. While financial data helps understand the initial impact of unforeseen downtime, alone it doesn’t provide full visibility of long-term consequences or the reaction of the business and consumers.

Collecting holistic data alongside the usual logging metrics helps to fill in the blanks. It allows a business to answer questions like:

- Has this affected our public reputation?

- Are users speaking positively about our response?

- Did we respond faster or slower to this outage than usual?

- Was our response effective at returning us to BAU as soon as possible?

- Is this affecting the morale of our staff?

- How much work is this making for my IT team?

- Has web traffic taken a hit?

This leaves any business or organization in a much better position to control and mitigate the long-term impact of a third party outage.

A study by Deloitte found that direct losses due to third party mismanagement have been found to directly cost businesses as much as $48m. This is before indirect losses are factored in. An outage could have minor immediate financial ramifications but damage long-term prospects through reputational damage. It would be almost impossible to gain the insight to prevent this using financial or systems metrics alone.

A Complete Holistic Data Solution

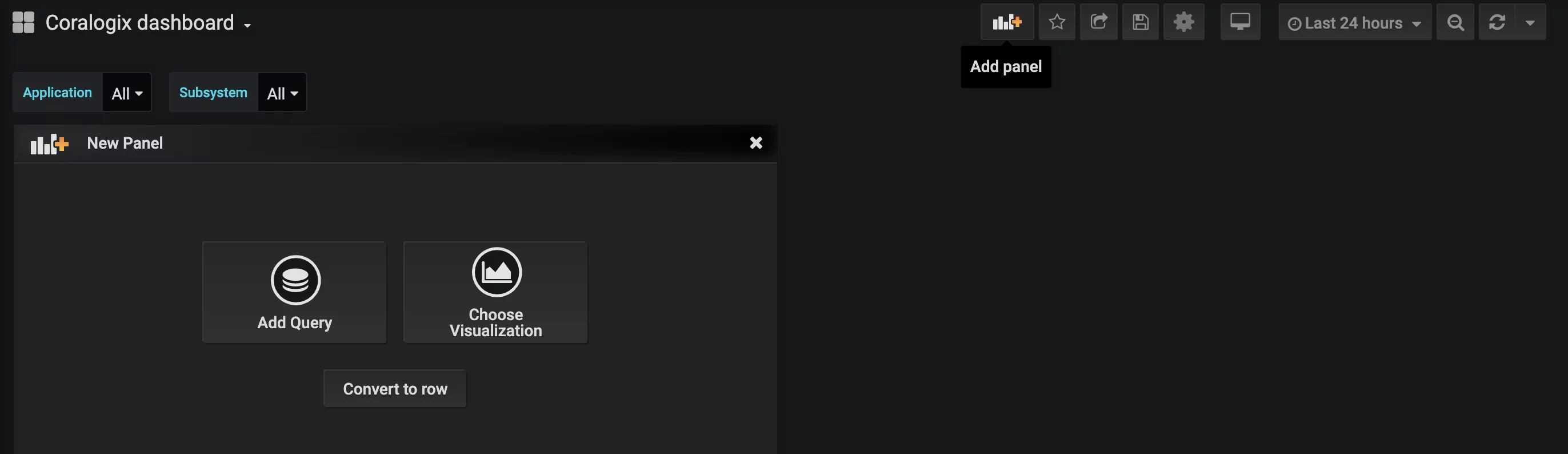

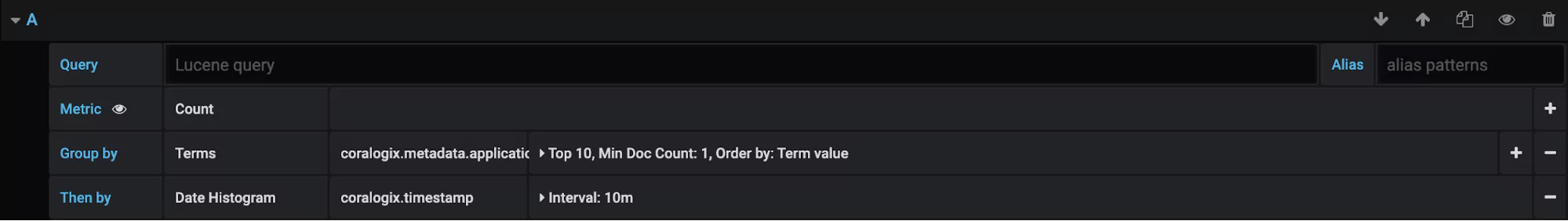

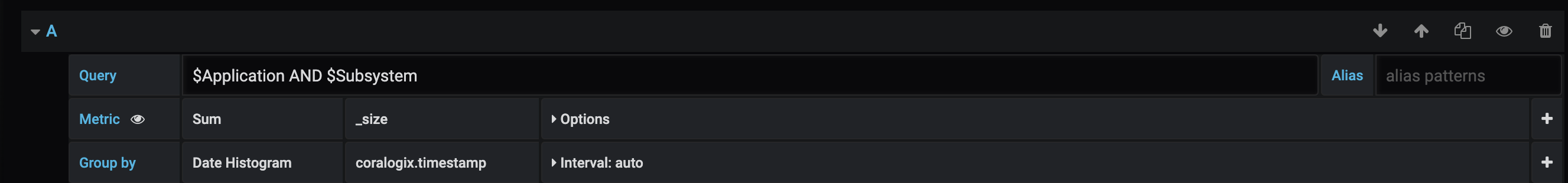

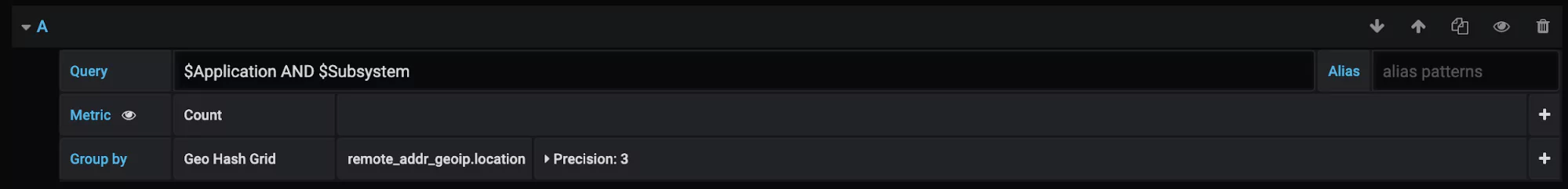

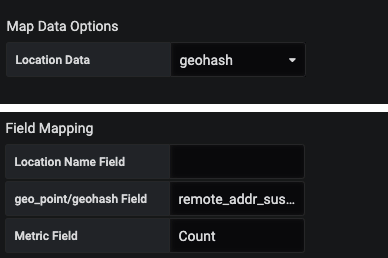

The Coralogix observability platform is a monitoring solution that enables holistic data collection from sources such as Teams, GitHub, PagerDuty, Slack, Tableau, and many others.

Collecting and logging data from multiple sources, both traditional and unorthodox, can be difficult to manage. Business intelligence and organizational insight are difficult to gain if information is stored and reported from dozens of sources in as many formats.

Coralogix’s observability platform provides a holistic system and organized view in a single pane. Using machine learning, our platform creates a comprehensive picture of your technical estate that comes with actionable business context. For visibility and insight that extends beyond the purely technical, the Coralogix platform is the solution your business needs.