Like cloud-native and DevOps, full-stack observability is one of those software development terms that can sound like an empty buzzword. Look past the jargon, and you’ll find considerable value to be unlocked from building data observability into each layer of your software stack.

Before we get into the details of monitoring observability, let’s take a moment to discuss the context. Over the last two decades, software development and architecture trends have departed from single-stack, monolithic designs toward distributed, containerized deployments that can leverage the benefits of cloud-hosted, serverless infrastructure.

This provides a range of benefits, but it also creates a more complex landscape to maintain and manage: software breaks down into smaller, independent services that deploy to a mix of virtual machines and containers hosted both on-site and in the cloud, with additional layers of software required to manage automatic scaling and updates to each service, as well as connectivity between services.

At the same time, the industry has seen a shift from the traditional linear build-test-deploy model to a more iterative methodology that blurs the boundaries between software development and operations. This DevOps approach has two main elements.

First, developers have more visibility and responsibility for their code’s performance once released. Second, operations teams are getting involved in the earlier stages of development — defining infrastructure with code, building in shorter feedback loops, and working with developers to instrument code so that it can output signals about how it’s behaving once released.

With richer insights into a system’s performance, developers can investigate issues more efficiently, make better coding decisions, and deploy changes faster.

Observability closely ties into the DevOps philosophy: it plays a central role in providing the insights that inform developers’ decisions. It depends on addressing matters traditionally owned by ops teams earlier in the development process.

What is full-stack observability?

Unlike monitoring, observability is not what you do. Instead, it’s a quality or property of a software system. A system is observable if you can ask questions about the data it emits to gain insight into how it behaves. Whereas monitoring focuses on a pre-determined set of questions — such as how many orders are completed or how many login attempts failed — with an observable system, you don’t need to define the question.

Instead, observability means that enough data is collected upfront allowing you to investigate failures and gain insights into how your software behaves in production, rather than adding extra instrumentation to your code and reproducing the issue.

Once you have built an observable system, you can use the data emitted to monitor the current state and investigate unusual behaviors when they occur. Because the data was already collected, it’s possible to look into what was happening in the lead-up to the issue.

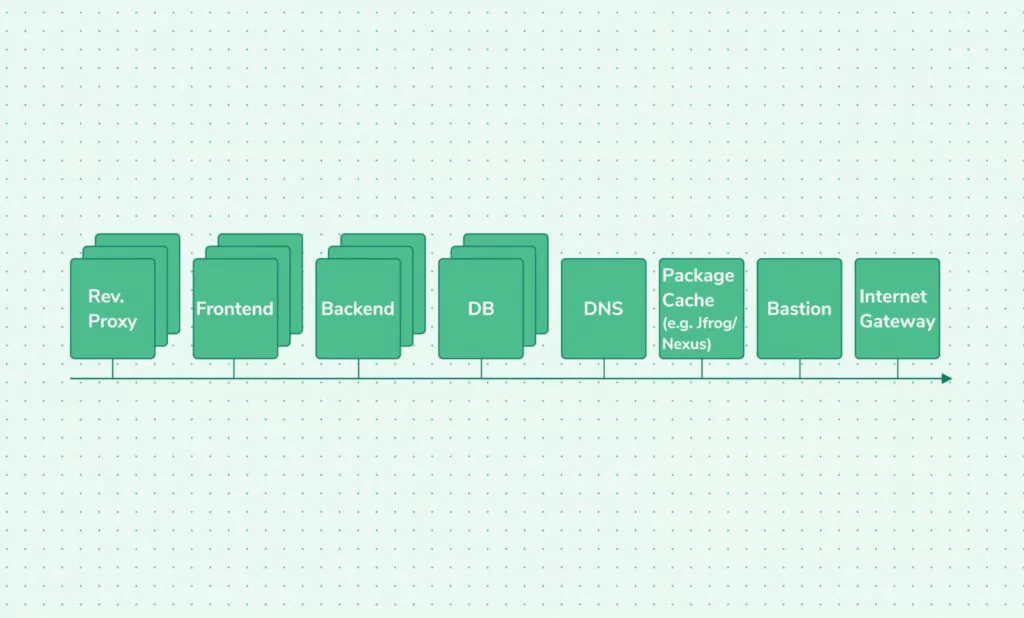

Full-stack observability refers to observability implemented at every layer of the technology stack. – From the containerized infrastructure on which your code is running and the communications between the individual services that make up the system, to the backend database, application logic, and web server that exposes the system to your users.

With full-stack observability, IT teams gain insight into the entire functioning of these complex, distributed systems. Because they can search, analyze, and correlate data from across the entire software stack, they can better understand the relationships and dependencies between the various components. This allows them to maintain systems more effectively, identify and investigate issues quickly, and provide valuable feedback on how the software is used.

So how do you build an observable system? The answer is by instrumenting your code to emit signals and collect telemetry centrally so that you can ask questions about how it’s behaving and why it’s running in production. The types of telemetry can be broken down into what is known as the “four pillars of observability”: metrics, logs, traces, and security data.

Each pillar provides part of the picture, as we’ll discuss in more detail below. Ensuring these types of data are emitted and collating that information into a single observability platform makes it possible to observe how your software behaves and gain insights into its internal workings.

Deriving value from metrics

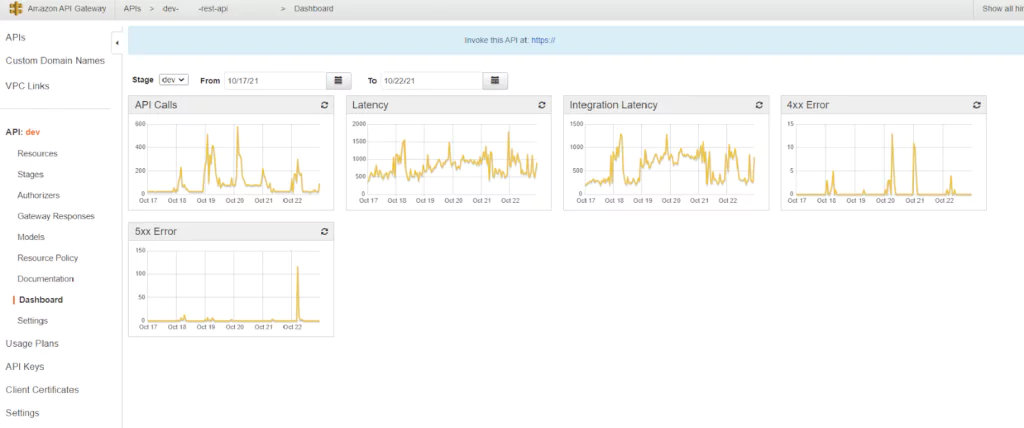

The first of our four pillars is metrics. These are time series of numbers derived from the system’s behavior. Examples of metrics include the average, minimum, and maximum time taken to respond to requests in the last hour or day, the available memory, or the number of active sessions at a given point in time.

The value of metrics is in indicating your system’s health. You can observe trends and identify any significant changes by plotting metric values over time. For this reason, metrics play a central role in monitoring tools, including those measuring system health (such as disk space, memory, and CPU availability) and those which track application performance (using values such as completed transactions and active users).

While metrics must be derived from raw data, the metrics you want to observe don’t necessarily have to be determined in advance. Part of the art of building an observable system is ensuring that a broad range of data is captured so that you can derive insights from it later; this can include calculating new metrics from the available data.

Gaining specific insights with logs

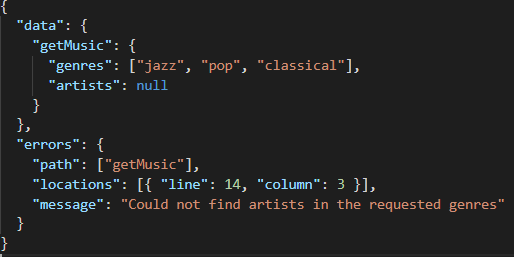

The next source of telemetry is logs. Logs are time-stamped messages produced by software that record what happened at a given point. Log entries might record a request made to a service, the response served, an error or warning triggered, or an unexpected failure. Logs can be produced from every level of the software stack, including operating systems, container runtimes, service meshes, databases, and application code.

Most software (including IaaS, PaaS, CaaS, SaaS, firewalls, load balancers, reverse proxies, data stores, and streaming platforms) can be configured to emit logs, and any software developed in-house will typically have logging added during development. What causes a log entry to be emitted and the details it includes depend on how the software has been instrumented. This means that the exact format of the log messages and the information they contain will vary across your software stack.

In most cases, log messages are classified using logging levels, which control the amount of information that is output to logs. Enabling a more detailed logging level such as “debug” or “verbose” will generate far more log entries, whereas limiting logging to “warning” or “error” means you’ll only get logs when something goes wrong. If log messages are in a structured format, they can more easily be searched and queried, whereas unstructured logs must be parsed before you can manipulate them programmatically.

Logs’ low-level contextual information makes them helpful in investigating specific issues and failures. For example, you can use logs to determine which requests were produced before a database query ran out of memory or which user accounts accessed a particular file in the last week.

Taken in aggregate, logs can also be analyzed to extrapolate trends and detect past and real-time anomalies (assuming they are processed quickly enough). However, checking the logs from each service in a distributed system is rarely practical. To leverage the benefits of logs, you need to collate them from various sources to a central location so they can be parsed and analyzed in bulk.

Using traces to add context

While metrics provide a high-level indication of your system’s health and logs provide specific details about what was happening at a given time, traces supply the context. Distributed tracing records the chain of events involved in servicing a particular request. This is especially relevant in microservices, where a request triggered by a user or external API call can result in dozens of child requests to different services to formulate the response.

A trace identifies all the child calls related to the initiating request, the order in which they occurred, and the time spent on each one. This makes it much easier to understand how different types of requests flow through a system, so that you can work out where you need to focus your attention and drill down into more detail. For example, suppose you’re trying to locate the source of performance degradation. In that case, traces will help you identify where the most time is being spent on a request so that you can investigate the relevant service in more detail.

Implementing distributed tracing requires code to be instrumented so that trace identifiers are propagated to each child request (known as spans), and the details of each span are forwarded to a database for retrieval and analysis.

Adding security data to the picture

The final element of the observability puzzle is security data. Whereas the first three pillars represent specific types of telemetry, security data refers to a range of data, including network traffic, firewall logs, audit logs and security-related metrics, and information about potential threats and attacks from security monitoring platforms. As a result, security data is both broader and narrower than the first three pillars.

Security data merits inclusion as a pillar in its own right because of the crucial importance of defending against cybersecurity attacks for today’s enterprises. In the same way that the importance of building security into software has been highlighted by the term DevSecOps, including security as a pillar in its own right serves to highlight the role that observability plays in improving software security and the value to be had from bringing all available data into a single platform.

As with metrics, logs, and traces, security data comes from multiple sources. One of the side effects of the trend towards more distributed systems is an increase in the potential attack surface. With application logic and data spread across multiple platforms, the network connections between individual containers and servers and across public and private clouds have become another target for cybercriminals. Collating traffic data from various sources makes it possible to analyze that data more effectively to detect potential threats and investigate issues efficiently.

Using an observability platform

While these four types of telemetry provide valuable data, using each in isolation will not deliver the full benefits of observability. To answer questions about how your system is performing efficiently, you need to bring the data together into a single platform that allows you to make connections between data points and understand the complete picture. This is how an observability platform adds value.

Full-stack observability platforms provide a single source of truth for the state of your system. Rather than logging in to each component of a distributed system to retrieve logs and traces, view metrics, or examine network packets, all the information you need is available from a single location. This saves time and provides you with better context when investigating an issue so that you can get to the source of the problem more quickly.

Armed with a comprehensive picture of how your system behaves at all layers of the software stack, operations teams, software developers, and security specialists can benefit from these insights. Full-stack observability makes it easier for these teams to detect and troubleshoot production issues and monitor changes’ impact as they deploy.

Better visibility of the system’s behavior also reduces the risk associated with trialing and adopting new technologies and platforms, enabling enterprises to move fast without compromising performance, reliability, or security. Finally, having a shared perspective helps to break down siloes and encourages the cross-team collaboration that’s essential to a DevSecOps approach.