Scrum metrics and data observability are an essential indicator of your team’s progress. In an agile team, they help you understand the pace and progress of every sprint, ascertain whether you’re on track for timely delivery or not, and more.

Although scrum metrics are essential, they are only one facet of the delivery process — sure, they ensure you’re on track, but how do you ensure that there are no roadblocks during development?

That’s precisely where observability helps. Observability gives you a granular overview of your application. It monitors and records performance logs continuously, helping you isolate and fix issues before scrum metrics are affected. Using observability makes your scrum team more efficient — let’s see how.

Scrum Metrics: The Current Issues & How Observability Helps

Problem #1:

Imagine a scenario where you’ve just pushed new code into production and see an error. If it’s a single application, you only have to see the logs to pinpoint exactly where the issue lies. However, when you add distributed systems and cloud services to the mix, the cause of the defect can range from a possible server outage to cloud services being down.

Cue the brain-racking deep dives into logs and traces on multiple servers, with everyone from the developers to DevOps engineers doing their testing and mock runs to figure out the what and where.

This is an absolute waste of time because looking at these individually is like hoping to hit the jackpot – you’re lucky if one of you finds it early on, or you might end up clueless for days on end. And not to mention, scrum metrics would be severely impacted the longer this goes on, causing more pressure from clients and product managers.

How observability fixes it:

With observability, you do not need to comb through individual logs and traces — you can track your applications and view real-time data in a centralized dashboard.

Finding where the problem lies becomes as simple as understanding which request is breaking through a system trace. Since observability tools are configured to your entire system, that just means clicking a few buttons to start the process. Further, application observability metrics can help you understand your system uptime, response time, the number of requests per second, and how much processing power or memory an application uses — thereby helping you find the problem quickly.

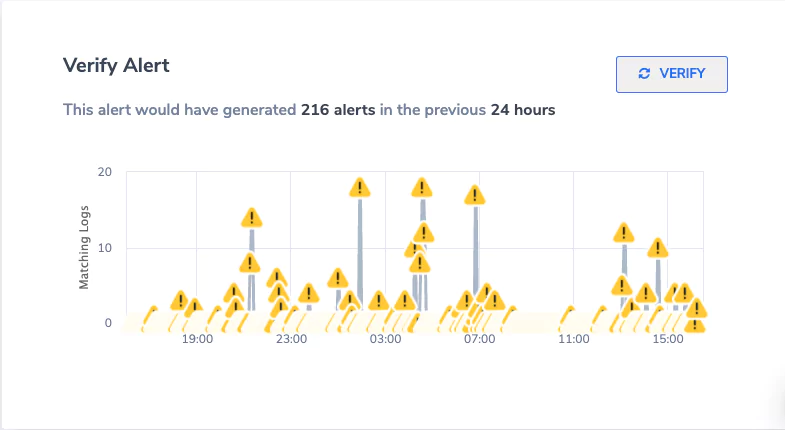

Thus, you mitigate downtime risks and can even solve issues proactively through triggered alerts.

Problem #2: Hierarchy & Information Sharing

Working in teams is more than distributing tasks and ensuring their timely completion. Information sharing and prompt communication across the ladder are critical to reducing the mean response time to threats. However, if your team prefers individual-level monitoring and problem-solving, they may not readily share or access information as and when required.

This could create a siloed workplace environment where multiple analytics and monitoring tools are used across the board. This purpose-driven approach inhibits any availability of unified metric data and limits information sharing.

How observability fixes it:

Observability introduces centralized dashboards that enable teams to work on issues collaboratively. You can access pre-formatted, pre-grouped, and segregated logs and traces that indicate defects. A centralized view of these logs simplifies data sharing and coordination within the team, fostering problem-solving through quick communication and teamwork.

Log management tools such as Coralogix’s full-stack observability platform can generate intelligent reports that help you improve scrum metrics and non-scrum KPIs. Standardizing log formats and traces helps ease the defect and threat-finding process. And your teams can directly access metrics that showcase application health across the organization without compromising on the security of your data.

Let’s look at standard scrum metrics and how observability helps them.

Scrum Metrics & How Observability Improves Them

Sprint Burndown

Sprint burndown is one of the most common scrum metrics. It gives information about the tasks completed and tasks remaining. This helps identify whether the team is on track for each sprint.

As the sprints go on and the scheduled production dates draw close, the code gets more complicated and harder to maintain. More importantly, it becomes harder to discern for those not involved in developing bits.

Observability enables fixing the issues early on. With observability, you get a centralized, real-time logging and tracing system that can predictively analyze and group errors, defects, or vulnerabilities. Metrics allow you to monitor your applications in real time and get a holistic view of system performance.

Thus, the effect on your sprint burndown graph is minimal, with significant defects caught beforehand. Observability generates a more balanced sprint burndown graph that shows the exact work done, including fixing defects.

Team Satisfaction

Observability enables easy collaboration, and information sharing, and gives an overview of how the system performs in real time. A comprehensive centralized observability platform allows developers to analyze logs quickly, fix defects easily, and save the headache of monitoring applications themselves through metrics. And then, they can focus on the job they signed up for — development.

Software Quality

Not all metrics in scrum are easy to measure, and software quality is one of the hardest. The definition is subjective; the closest measurable metric is the escaped defects metric. That’s perhaps why not everyone considers this, but at the end of the day, a software engineering team’s goal is to build high-quality software.

The quicker you find and squash code bugs and vulnerability threats, the easier it gets to improve overall code quality. You’ll have more time to enhance rather than fix and focus more on writing “good code” instead of “code that works.”

Escaped Defects

Have you ever deployed code that works flawlessly in pre-production but breaks immediately in production? Don’t worry — we’ve all been there!

That’s precisely why the escaped defects metric is a core scrum metric. It gives you a good overview of your software’s performance in production.

Implementing observability can directly improve this metric. A good log management and analytics platform like Coralogix can help you identify most bugs proactively through real-time reporting and alerting systems. This reduces the number of defects you may have missed, thus reducing the escaped defects metric.

You benefit from improved system performance and a reduced overall cost and technical debt.

Defect Density

Defect density goes hand-in-hand with escaped defects, especially for larger projects. It measures the number of defects relative to the size of the project.

You could measure this for a class, a package, a set of classes or packages of that deployment, etc. Observability improves the overall performance here. Since you can monitor and generate centralized logs, you can now analyze the defect density and dive deeper into the “why.” Also, using application metrics, you can figure out individual application performance and how efficiently your system works when they are integrated together.

Typically, this metric is used to study irregular defects and answer questions like “are some parts of the code particularly defective?” or “Are some areas out of analysis coverage?” etc. But with observability, you can answer questions like “what’s causing so many defects in these areas?” as defect density and observability complement each other.

Use Observability To Enhance Scrum Metrics

Monitoring scrum KPIs can help developers make better-informed decisions. But these metrics can be hard to track when it comes to developing and deploying modern, distributed systems and microservices. Often, scrum metrics are impacted due to preventable bugs and coordination issues across teams.

Introducing full observability to your stack can revamp the complete development process, significantly improving many crucial scrum metrics. You get a clear understanding of your application health at all times and reduce costs while boosting team morale. If you’re ready to harness the power of observability, contact Coralogix today!