DevOps Security: Challenges and Best Practices

With the shift from traditional monolithic applications to the distributed microservices of DevOps, there is a need for a similar change in operational security policies. For…

This week, the world stopped for a few hours as Google users experienced an outage on a massive scale. The outage affected ALL services which require Google account authentication. This includes the Google Cloud Platform (Cloud Console, Cloud Storage, BigQuery, etc.), Google Workspace (Gmail, Calendar, Docs, Drive, etc.) and Google Classroom.

With the myriad of affected platforms, this particular outage was far from passing by unnoticed by users. In fact, it was reported by users even before being reported on Google’s Cloud Status Dashboard. The Wall Street Journal even reported it as the modern “snow day” with schools being cancelled for the day as many students are learning remotely.

This isn’t the first time software we rely on has fallen (and it certainly won’t be the last), so let’s quickly review what lessons we can all learn from this most recent Google outage.

We’ll start with Google’s incident report which is, in a word, lacking. There aren’t enough details there to really understand all of the steps that lead to this issue, and the information that is included is questionable at best.

First, there are inconsistencies surrounding when the incident occurred. In the Preliminary Incident Statement, the incident is reported as starting at 03:45 PST, lasting 50 minutes, and ending at 04:35 PST. But the summary of the incident lists the start time as 04:07 PST and end time at 06:23 PST, putting the total incident time at 2 hours and 16 minutes.

On top of that, the first entry in the report was added only at 05:34:

Google Cloud services are experiencing issues and we have an other update at 5:30 PST

And the following entry at 06:23 claimed “as of 04:32 PST the affected system was restored and all services recovered shortly afterwards.”

That means that, according to Google’s timeline, the incident was reported 2 hours after it began, they had already resolved it, and somehow they still didn’t have the information about what happened.

This raises a ton of questions regarding Google’s incidence response. First, what’s causing these inconsistencies? Is the incident response and reporting manual? Was the initial incident time altered to avoid exceeding a one-hour SLA? Also, as a side note, are the grammatical issues in the original statement due to the pressure faced by whoever was updating the report?

At 12:17 PST (8 and a half hours after the incident reportedly began), the Preliminary Incident Statement was published including the following information:

The root cause was an issue in our automated quota management system which reduced capacity for Google’s central identity management system, causing it to return errors globally.

It may be unreasonable to expect a corporation as big and powerful as Google to provide transparency into their error resolution process, but we should all take notice and try to learn from this as much as possible.

This first lesson is more of a warning which emphasizes the importance of the following lessons. Back in 2013, Venture Beat reported that a 5-minute outage cost Google more than half a million dollars in revenue. Similar to this most recent outage, all of Google’s services were affected including YouTube, Drive, and Gmail.

In the past 7 years, Google’s quarterly reported revenue has increased by 326% (from $14.1 billion in Q2 2013 to $46.02 billion in Q3 2020). The amount of money lost per minute of downtime has increased proportionately, meaning that this most recent outage could have cost Google as much as $47 million conservatively ($350,000 per minute).

The bottom line is that we need to do everything we can to avoid persistent outages. If Google had been able to resolve this issue in 5 minutes, their revenue loss would have been less than $2 million.

We don’t know what happened behind the scenes at Google during this latest outage. What we do know is that we need to know about any critical errors in our systems before our customers do.

Part of this comes with automated alerting, but that’s not enough on its own. Our alerting should be dynamic and optimized to reduce noise as much as possible. If our alerts contain too many false positives (i.e. if our teams are being constantly bombarded by meaningless noise), real issues are likely to slip through the cracks.

Google listed the root cause in this incident as a problem with their automated quota management system. Even without a deeper understanding of how the system works, I’m sure we can all still identify with the issue of exceeded data quotas.

We can all do better when it comes to understanding and handling our system data.

Log data, for example, is most commonly used for identifying and resolving system or application errors, but most of the tools for managing and analyzing them don’t provide the insights we need, when we need them.

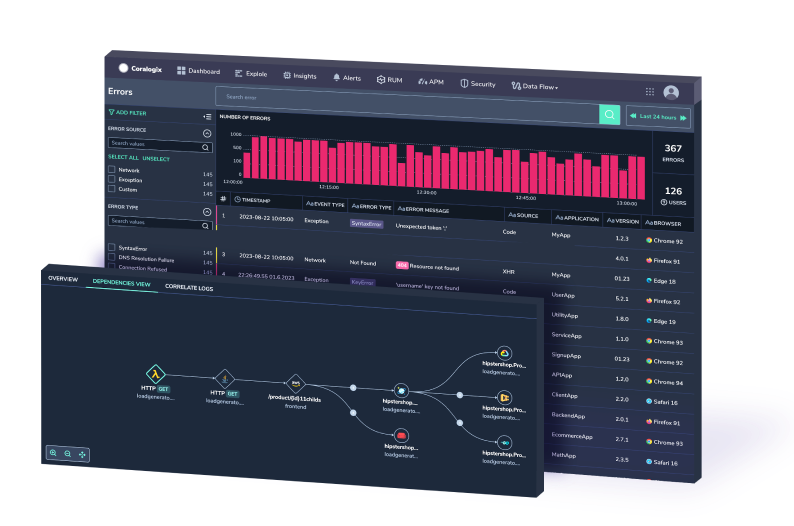

Unlike other logging solutions, Coralogix is a modern Observability platform that analyzes log data in real-time before it’s indexed. This allows teams to get deeper insights about their application and system health immediately.